Notes: it is customary in high energy particle/nuclear physics for authors to be listed in alphabetical order. Experimental collaborations are often large (hundreds to thousands!) and it is typical to list everyone as authors. The papers and notes listed below are only the ones with substantial group contribution. The publications below are ordered by date of arXiv posting (or equivalent).

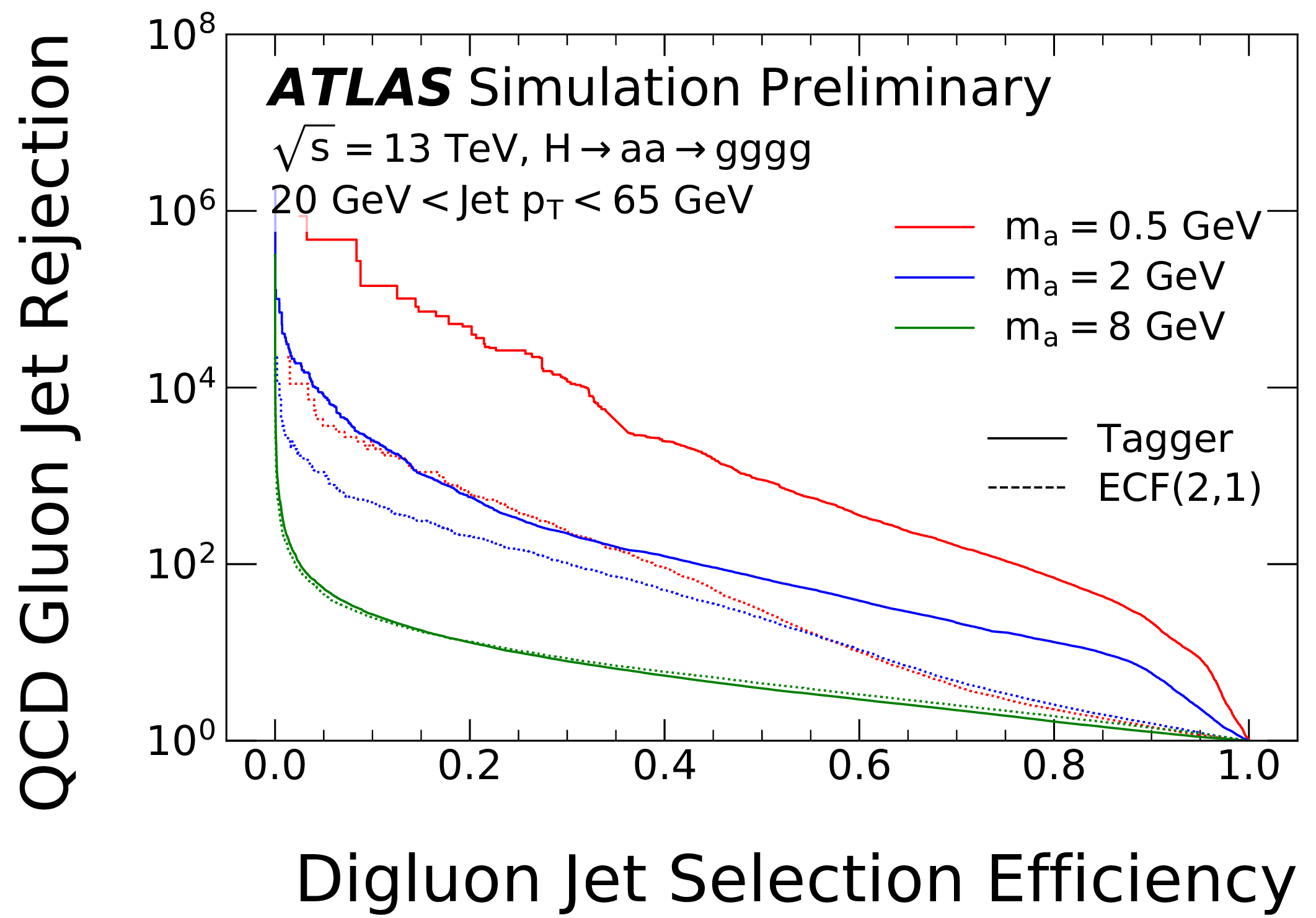

author="{J. Geuskens, N. Gite, M. Krämer, V. Mikuni, A. Mück, B. Nachman, H. Reyes-González}",

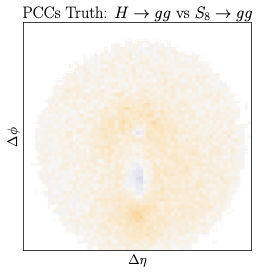

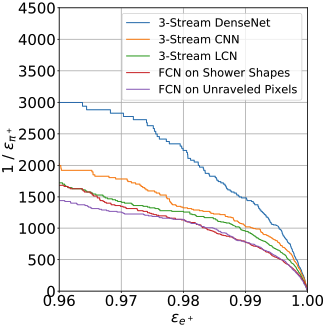

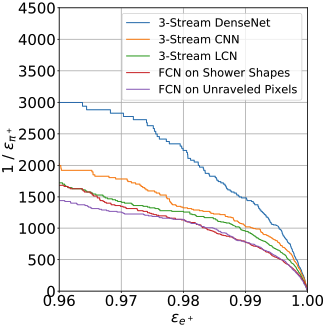

title="{The Fundamental Limit of Jet Tagging}",

eprint="2411.02628",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

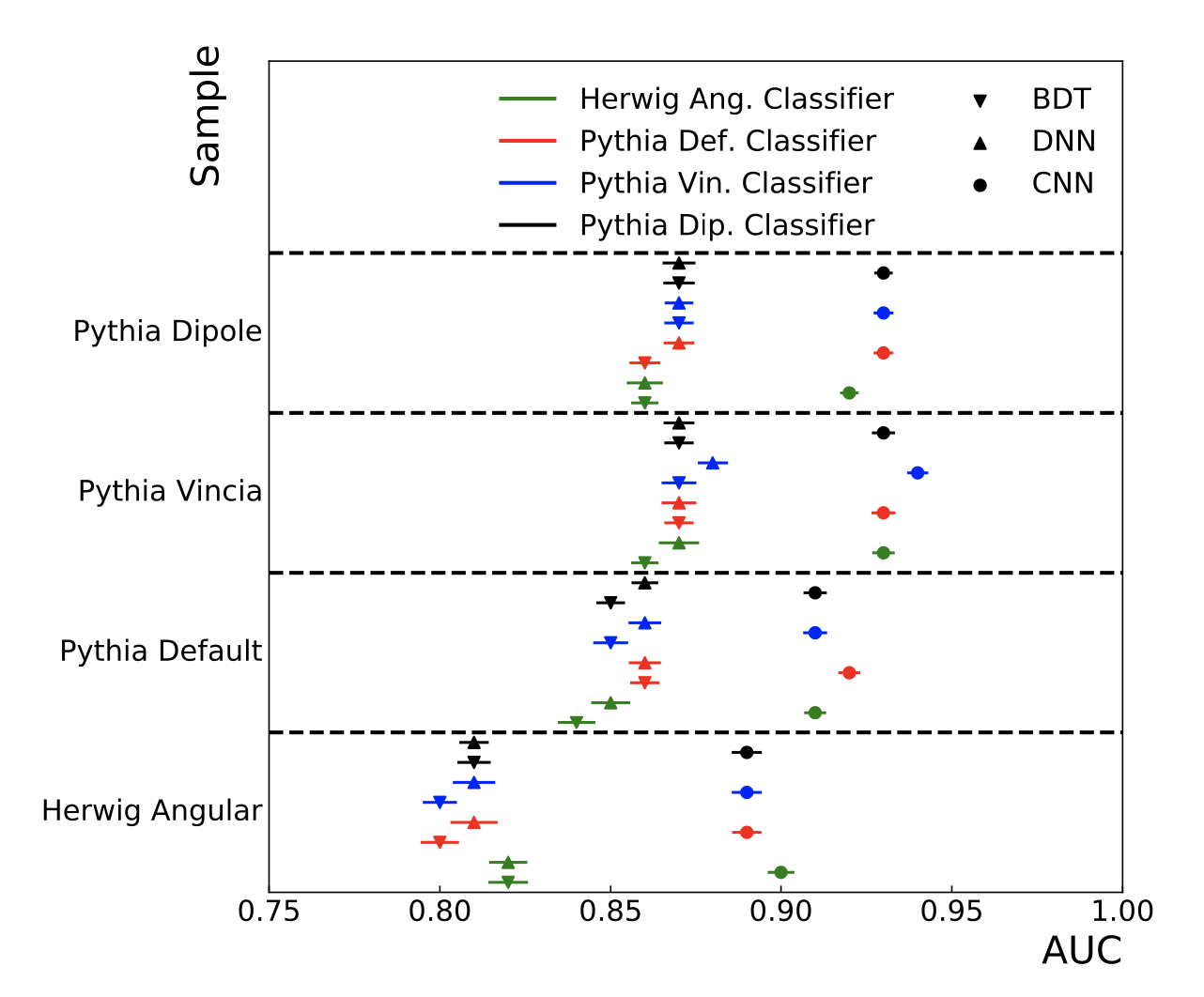

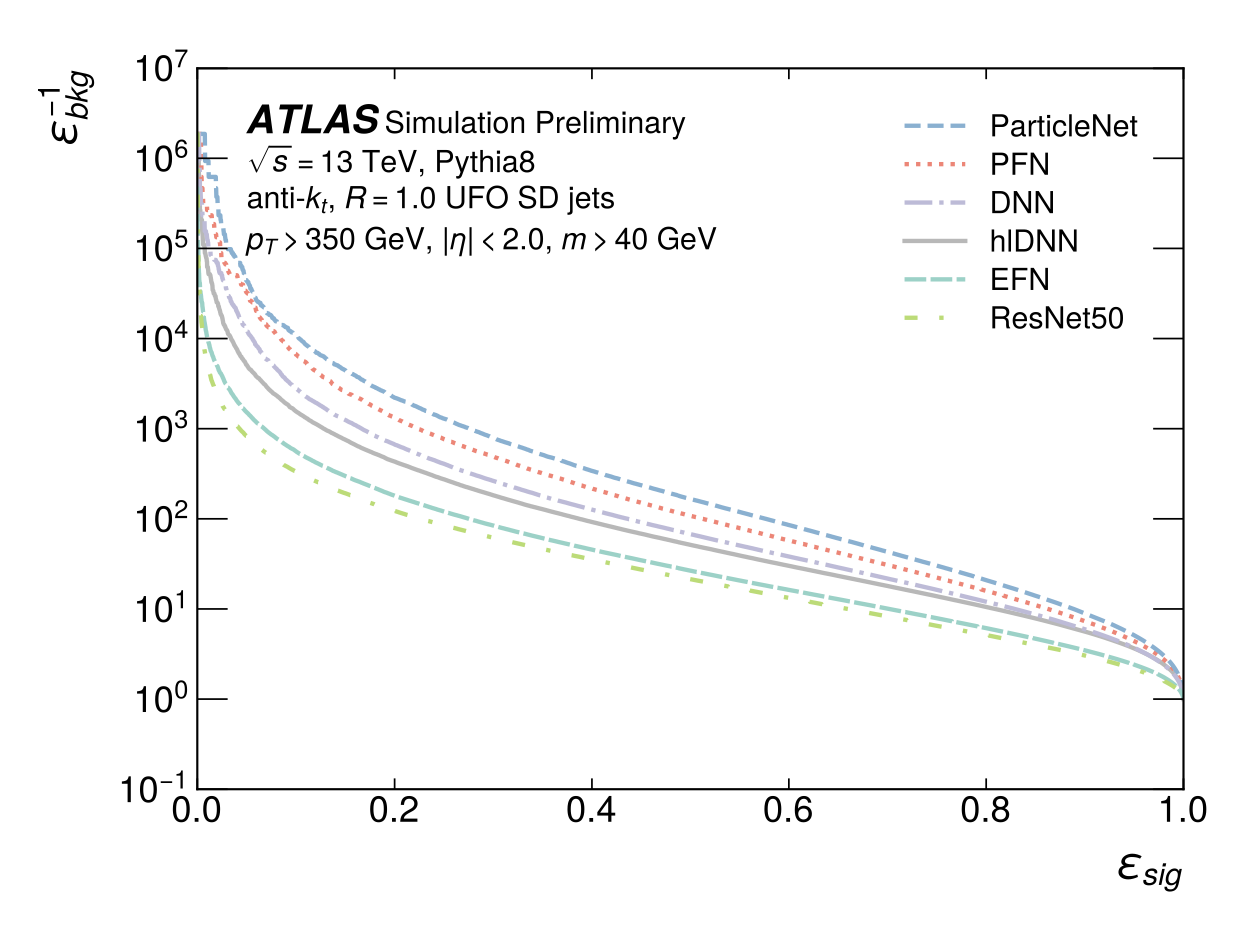

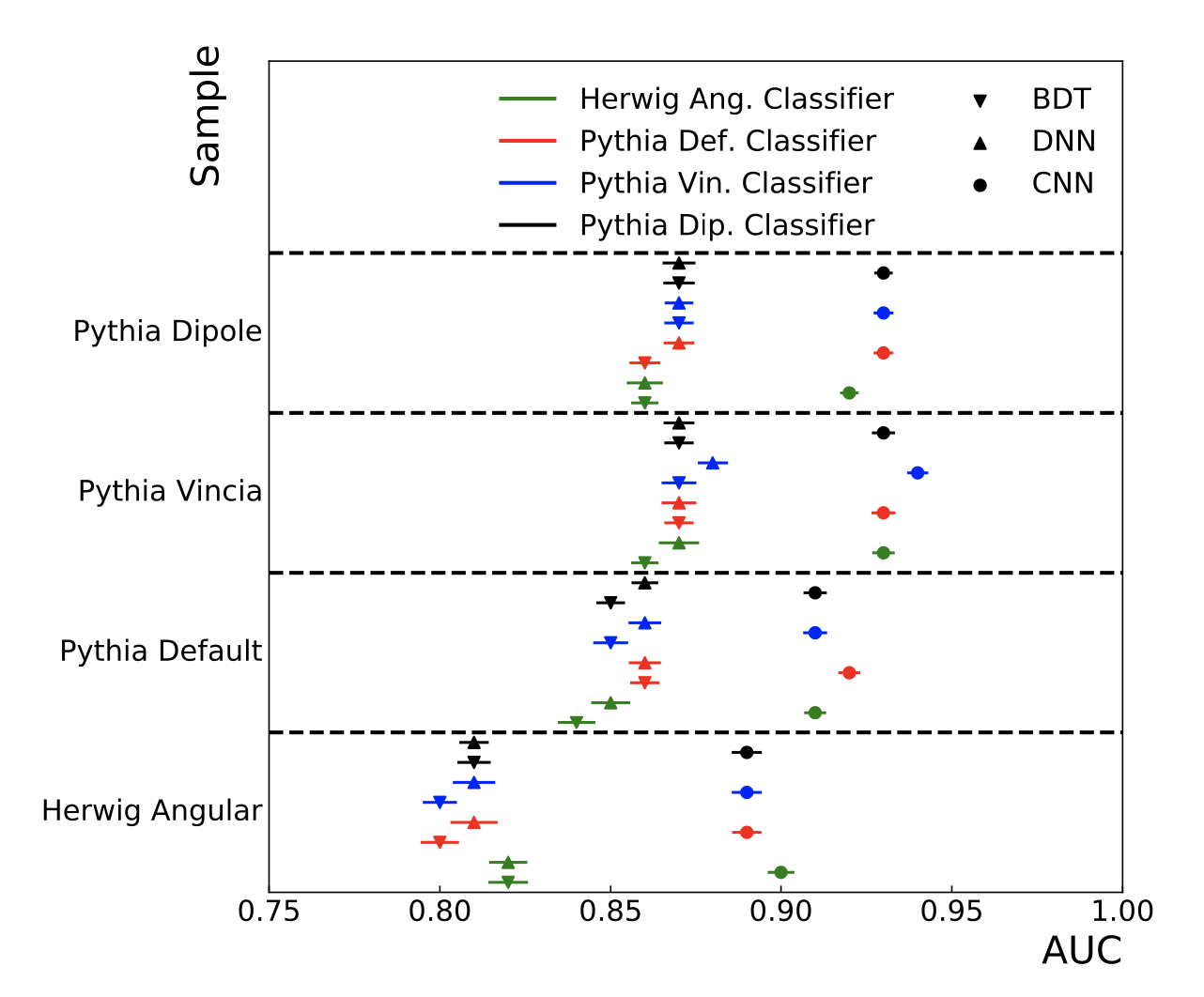

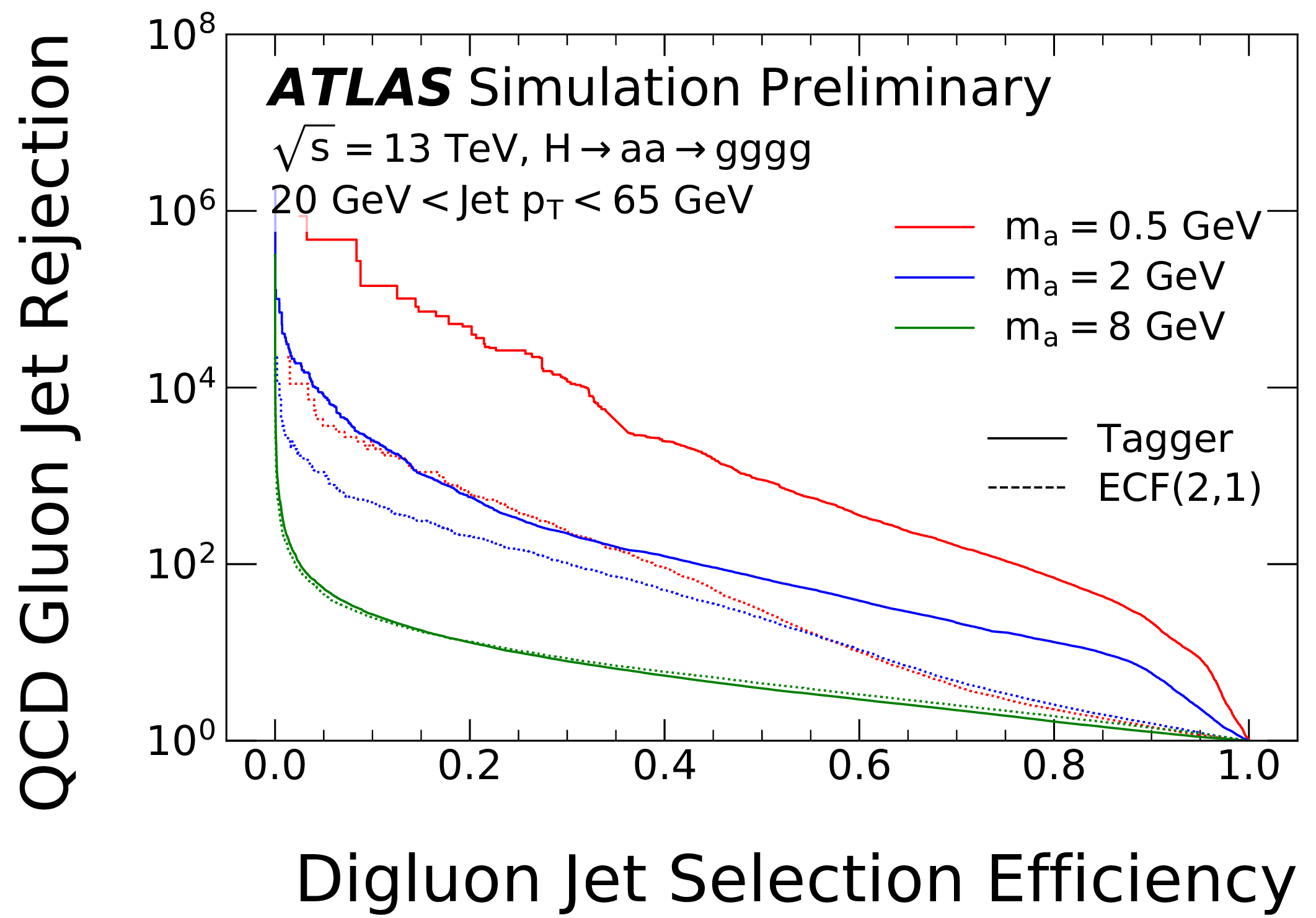

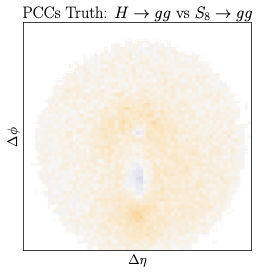

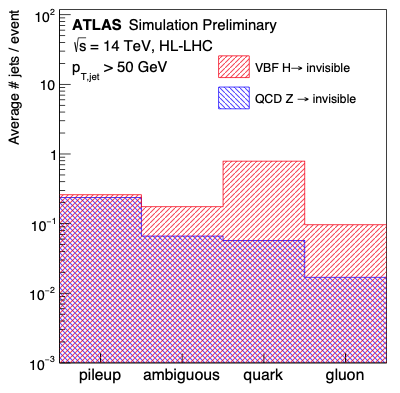

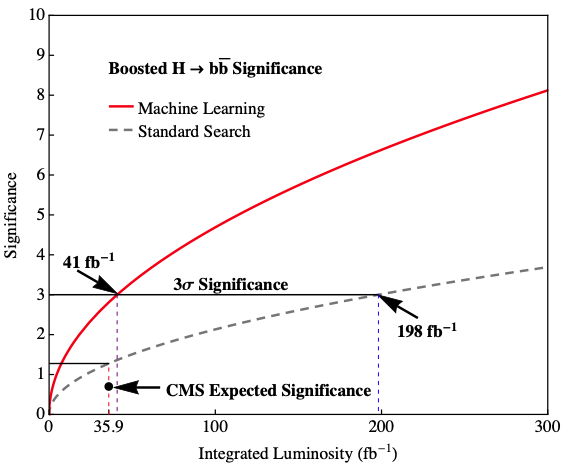

Identifying the origin of high-energy hadronic jets ('jet tagging') has been a critical benchmark problem for machine learning in particle physics. Jets are ubiquitous at colliders and are complex objects that serve as prototypical examples of collections of particles to be categorized. Over the last decade, machine learning-based classifiers have replaced classical observables as the state of the art in jet tagging. Increasingly complex machine learning models are leading to increasingly more effective tagger performance. Our goal is to address the question of convergence -- are we getting close to the fundamental limit on jet tagging or is there still potential for computational, statistical, and physical insights for further improvements? We address this question using state-of-the-art generative models to create a realistic, synthetic dataset with a known jet tagging optimum. Various state-of-the-art taggers are deployed on this dataset, showing that there is a significant gap between their performance and the optimum. Our dataset and software are made public to provide a benchmark task for future developments in jet tagging and other areas of particle physics.

×

author="{A. Butter, S. Diefenbacher, N. Huetsch, V. Mikuni, B. Nachman, S. Palacios Schweitzer, T. Plehn}",

title="{Generative Unfolding with Distribution Mapping}",

eprint="2411.02495",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

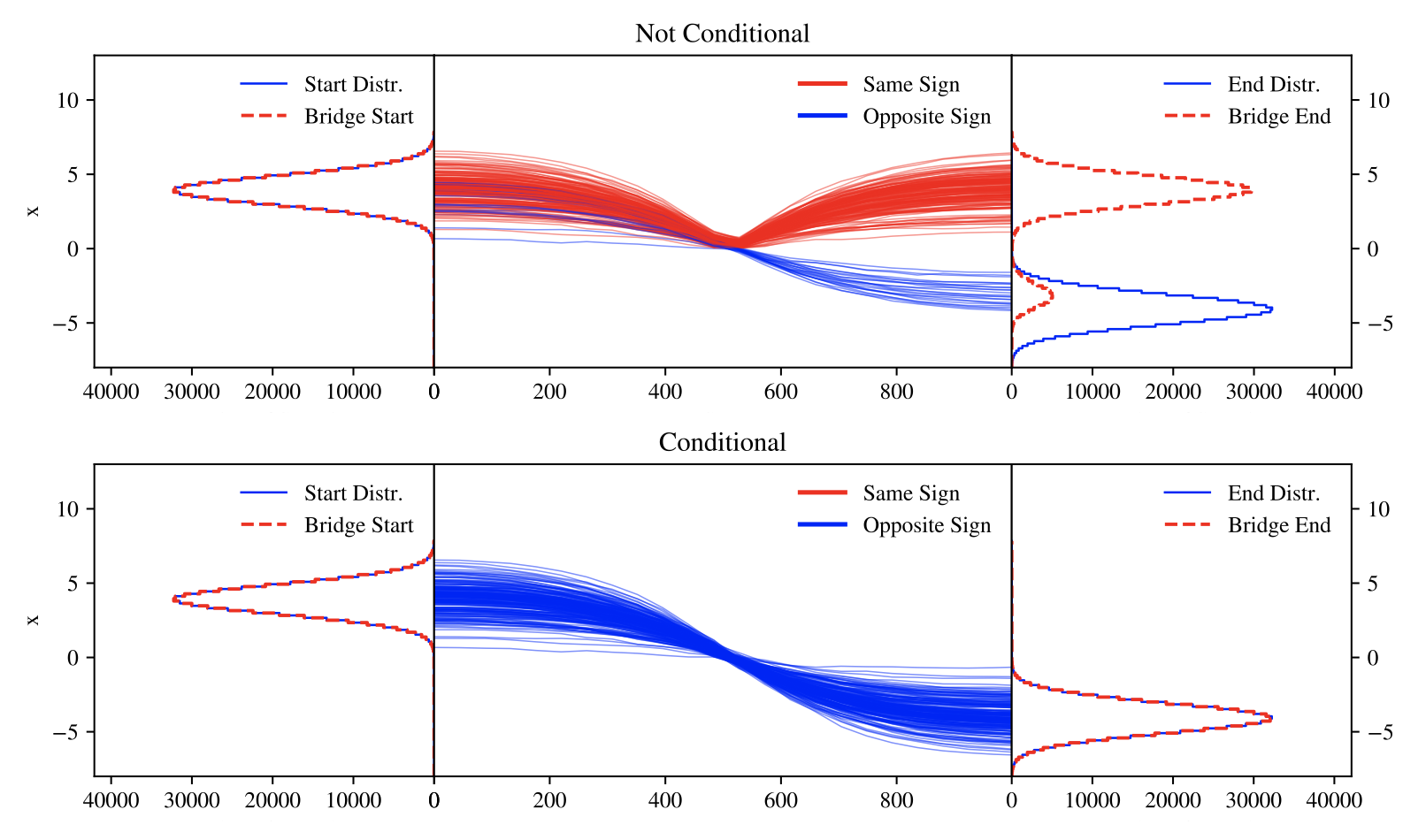

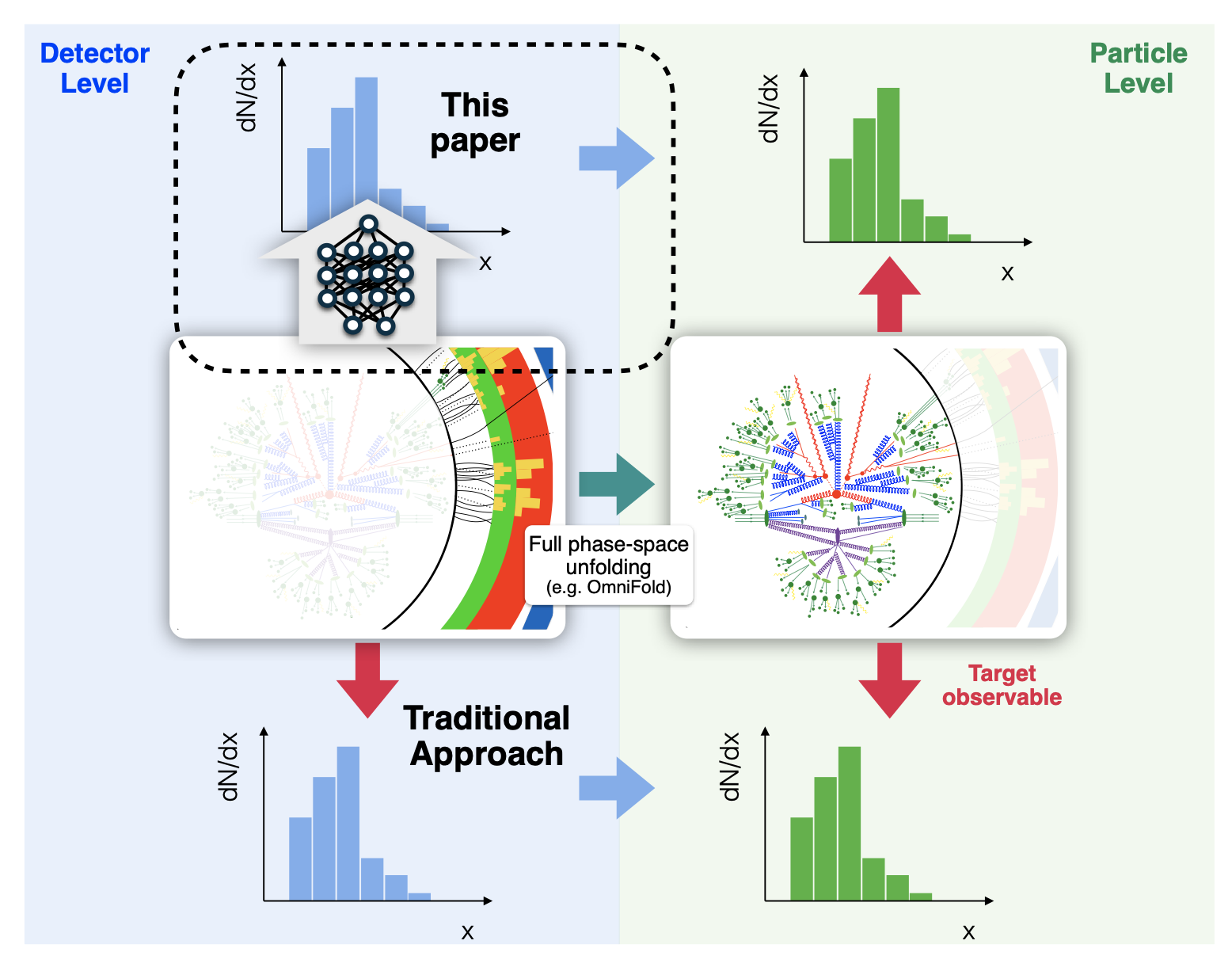

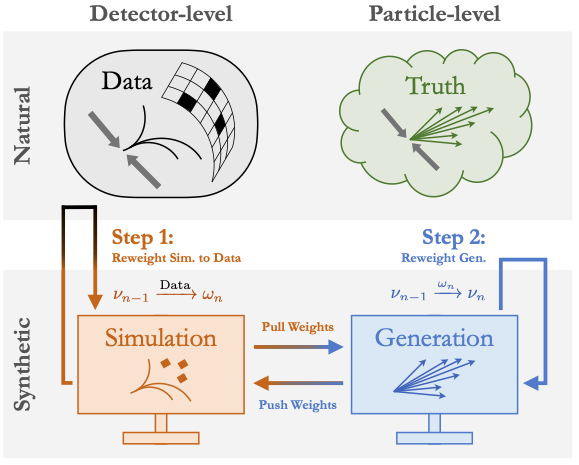

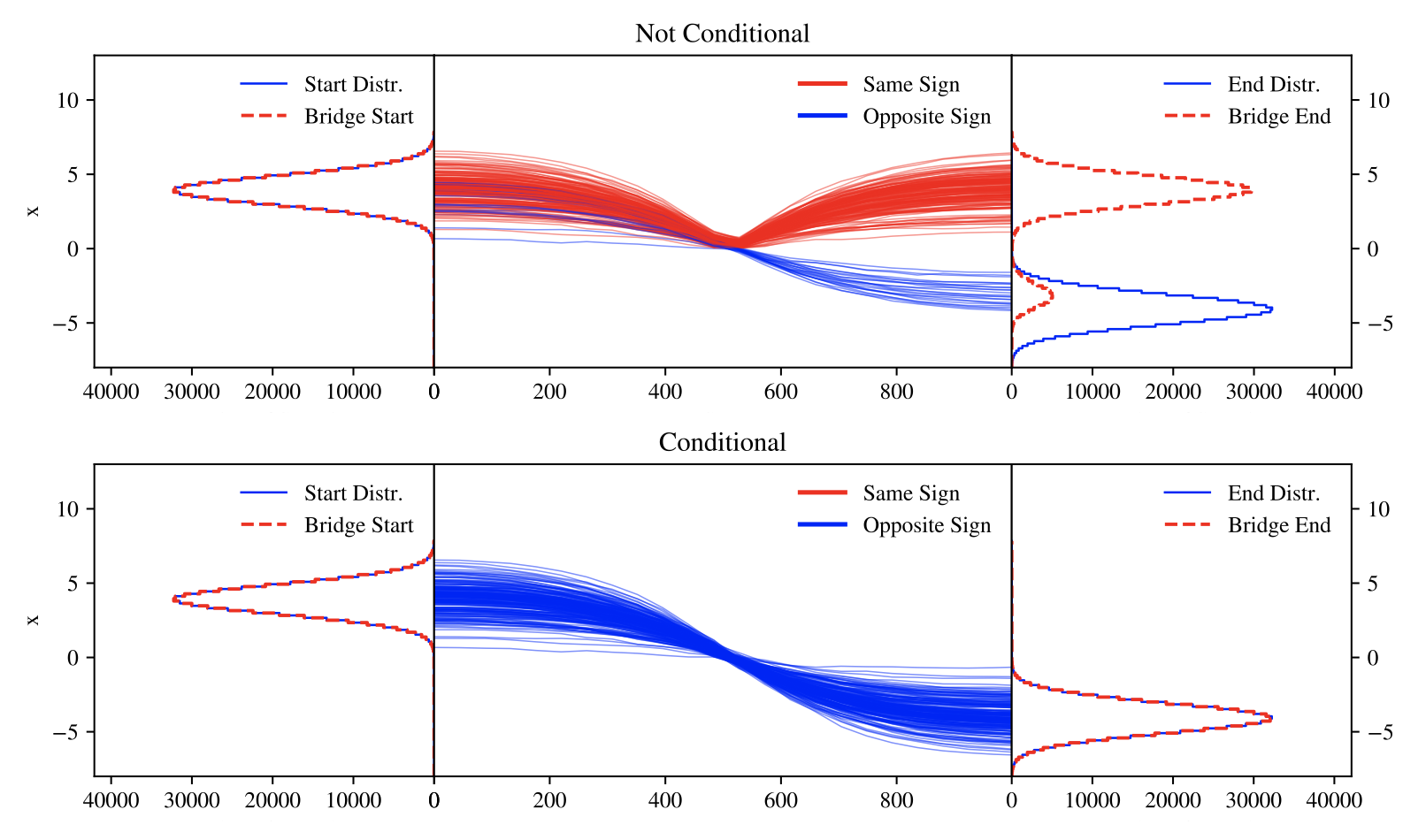

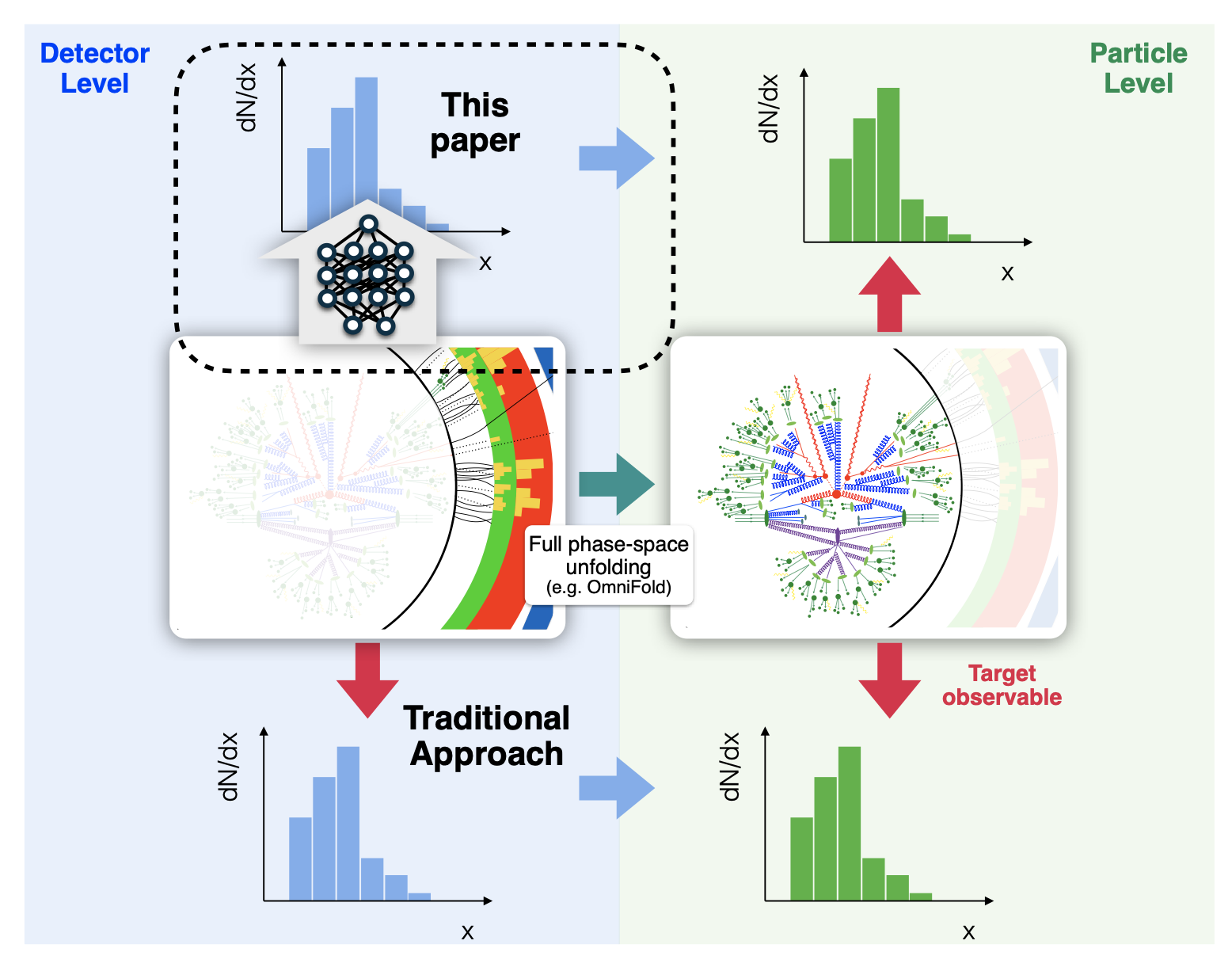

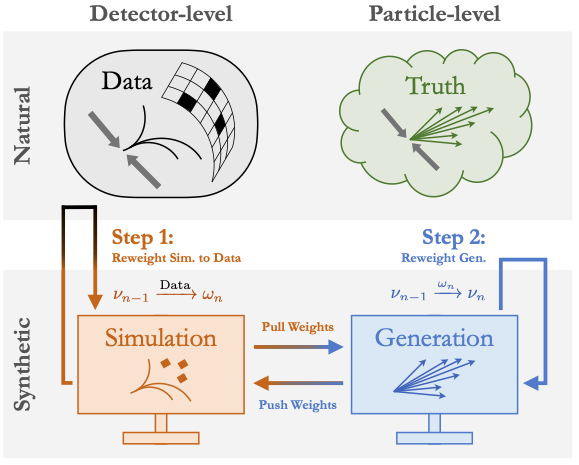

Machine learning enables unbinned, highly-differential cross section measurements. A recent idea uses generative models to morph a starting simulation into the unfolded data. We show how to extend two morphing techniques, Schrödinger Bridges and Direct Diffusion, in order to ensure that the models learn the correct conditional probabilities. This brings distribution mapping to a similar level of accuracy as the state-of-the-art conditional generative unfolding methods. Numerical results are presented with a standard benchmark dataset of single jet substructure as well as for a new dataset describing a 22-dimensional phase space of Z + 2-jets.

×

author="{N. Heller, P. Ilten, T. Menzo, S. Mrenna, B. Nachman, A. Siodmok, M. Szewc, A. Youssef}",

title="{Rejection Sampling with Autodifferentiation -- Case study: Fitting a Hadronization Model}",

eprint="2411.02194",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

We present an autodifferentiable rejection sampling algorithm termed Rejection Sampling with Autodifferentiation (RSA). In conjunction with reweighting, we show that RSA can be used for efficient parameter estimation and model exploration. Additionally, this approach facilitates the use of unbinned machine-learning-based observables, allowing for more precise, data-driven fits. To showcase these capabilities, we apply an RSA-based parameter fit to a simplified hadronization model.

×

author="{W. Bhimji, P. Calafiura, R. Chakkappai, Y. Chou, S. Diefenbacher, J. Dudley, S. Farrell, A. Ghosh, I. Guyon, C. Harris, S. Hsu, E. Khoda, R. Lyscar, A. Michon, B. Nachman, P. Nugent, M. Reymond, D. Rousseau, B. Sluijter, B. Thorne, I. Ullah, Y. Zhang}",

title="{FAIR Universe HiggsML Uncertainty Challenge Competition}",

eprint="2410.02867",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

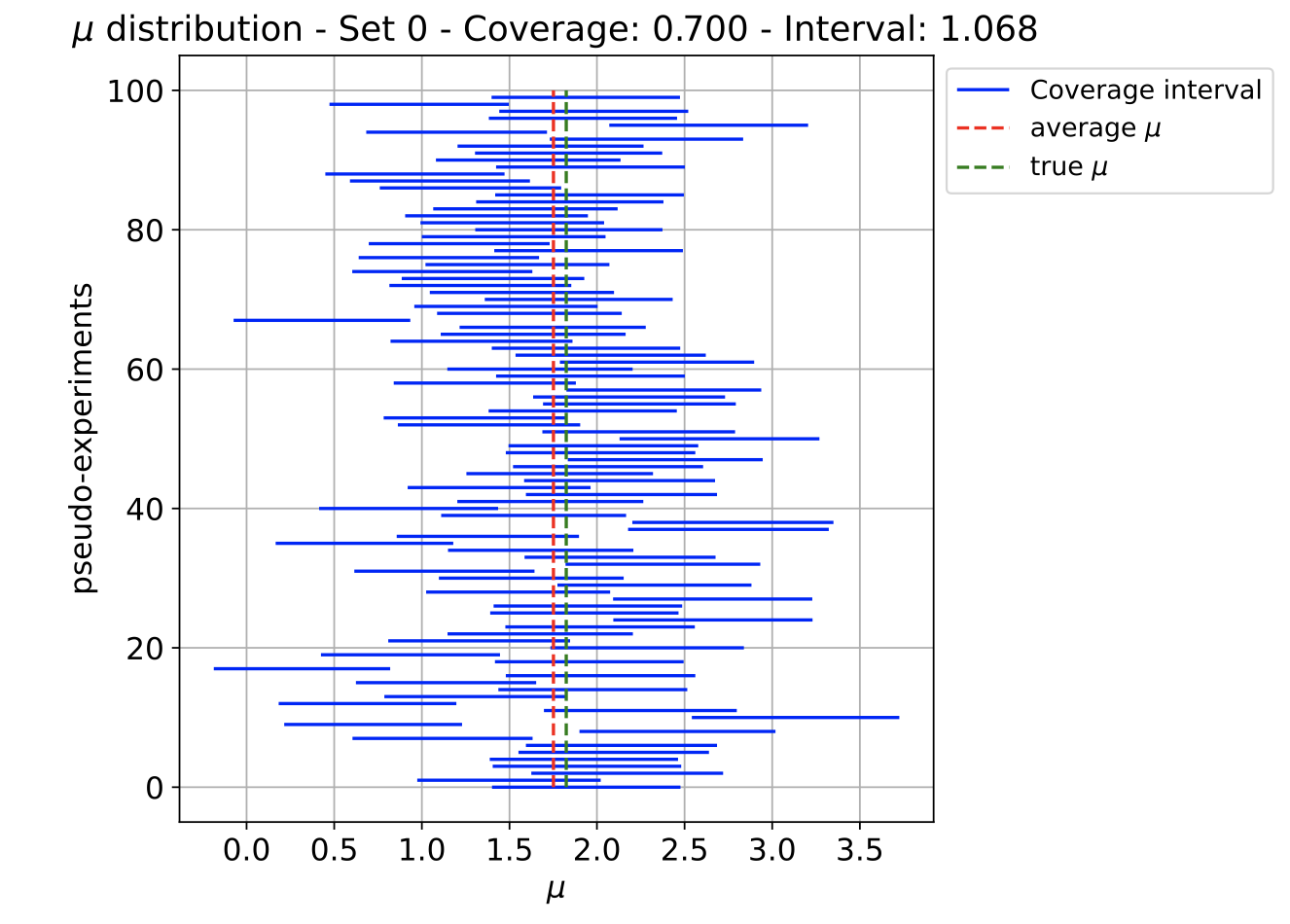

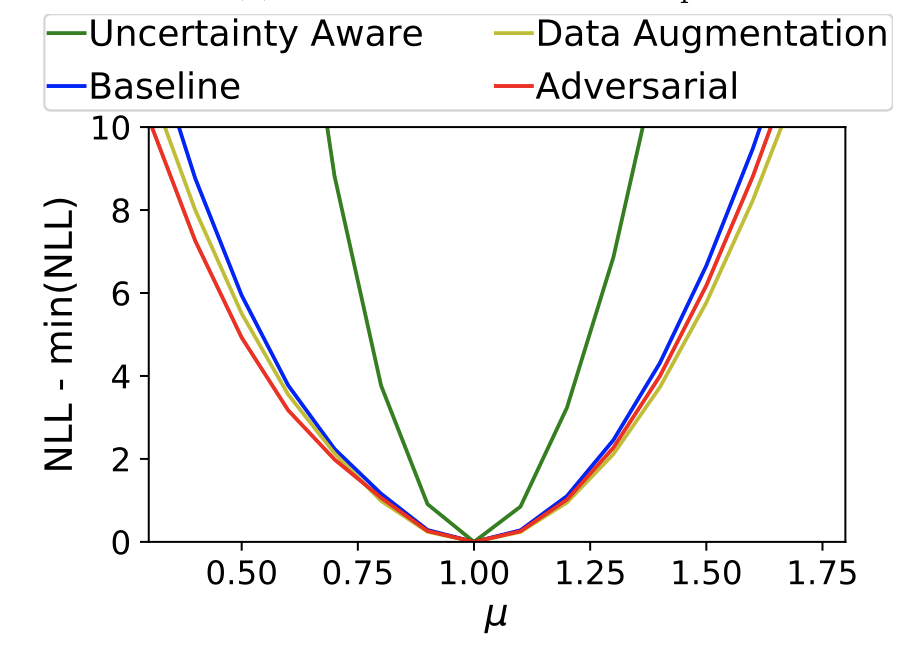

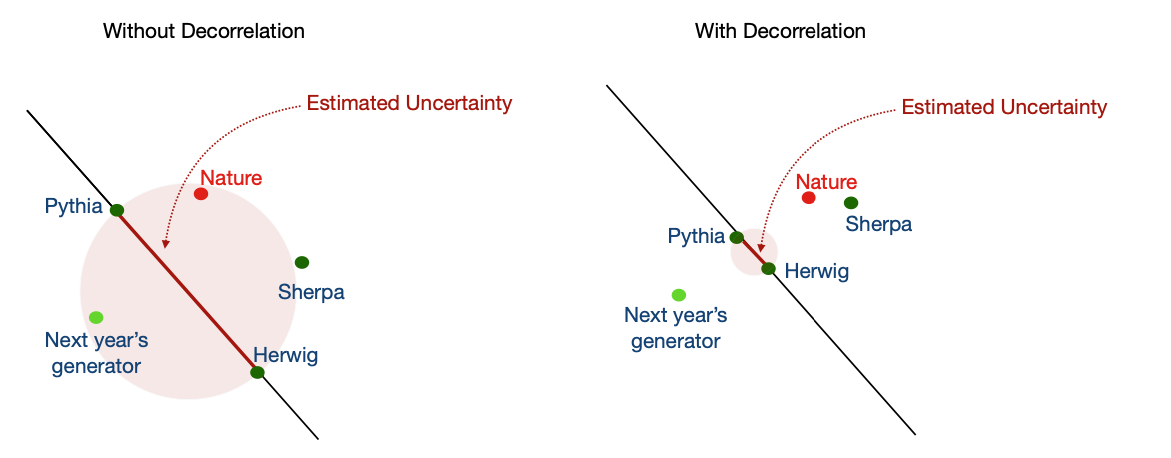

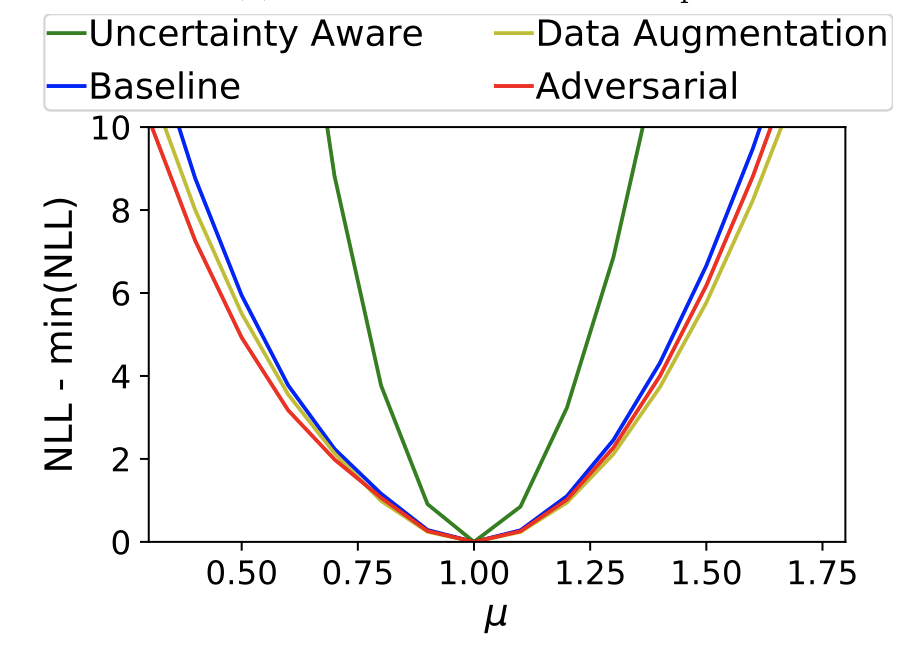

The FAIR Universe -- HiggsML Uncertainty Challenge focuses on measuring the physics properties of elementary particles with imperfect simulators due to differences in modelling systematic errors. Additionally, the challenge is leveraging a large-compute-scale AI platform for sharing datasets, training models, and hosting machine learning competitions. Our challenge brings together the physics and machine learning communities to advance our understanding and methodologies in handling systematic (epistemic) uncertainties within AI techniques.

×

author="{H. Zhu, K. Desai, M. Kuusela, V. Mikuni, B. Nachman, L. Wasserman}",

title="{Multidimensional Deconvolution with Profiling}",

eprint="2409.10421",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

In many experimental contexts, it is necessary to statistically remove the impact of instrumental effects in order to physically interpret measurements. This task has been extensively studied in particle physics, where the deconvolution task is called unfolding. A number of recent methods have shown how to perform high-dimensional, unbinned unfolding using machine learning. However, one of the assumptions in all of these methods is that the detector response is accurately modeled in the Monte Carlo simulation. In practice, the detector response depends on a number of nuisance parameters that can be constrained with data. We propose a new algorithm called Profile OmniFold (POF), which works in a similar iterative manner as the OmniFold (OF) algorithm while being able to simultaneously profile the nuisance parameters. We illustrate the method with a Gaussian example as a proof of concept highlighting its promising capabilities.

×

author="{M. Leigh, D. Sengupta, B. Nachman, T. Golling}",

title="{Accelerating template generation in resonant anomaly detection searches with optimal transport}",

eprint="2407.19818",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

We introduce Resonant Anomaly Detection with Optimal Transport (RAD-OT), a method for generating signal templates in resonant anomaly detection searches. RAD-OT leverages the fact that the conditional probability density of the target features vary approximately linearly along the optimal transport path connecting the resonant feature. This does not assume that the conditional density itself is linear with the resonant feature, allowing RAD-OT to efficiently capture multimodal relationships, changes in resolution, etc. By solving the optimal transport problem, RAD-OT can quickly build a template by interpolating between the background distributions in two sideband regions. We demonstrate the performance of RAD-OT using the LHC Olympics R&D dataset, where we find comparable sensitivity and improved stability with respect to deep learning-based approaches.

×

author="{K. Desai, B. Nachman, J. Thaler}",

title="{Moment Unfolding}",

eprint="2407.11284",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

Deconvolving ("unfolding'') detector distortions is a critical step in the comparison of cross section measurements with theoretical predictions in particle and nuclear physics. However, most existing approaches require histogram binning while many theoretical predictions are at the level of statistical moments. We develop a new approach to directly unfold distribution moments as a function of another observable without having to first discretize the data. Our Moment Unfolding technique uses machine learning and is inspired by Generative Adversarial Networks (GANs). We demonstrate the performance of this approach using jet substructure measurements in collider physics. With this illustrative example, we find that our Moment Unfolding protocol is more precise than bin-based approaches and is as or more precise than completely unbinned methods.

×

author="{E. Dreyer, E. Gross, D. Kobylianskii, V. Mikuni, B. Nachman, N. Soybelman}",

title="{Parnassus: An Automated Approach to Accurate, Precise, and Fast Detector Simulation and Reconstruction}",

eprint="2406.01620",

archivePrefix = "arXiv",

primaryClass = "physics.data-an",

year = "2023"}

×

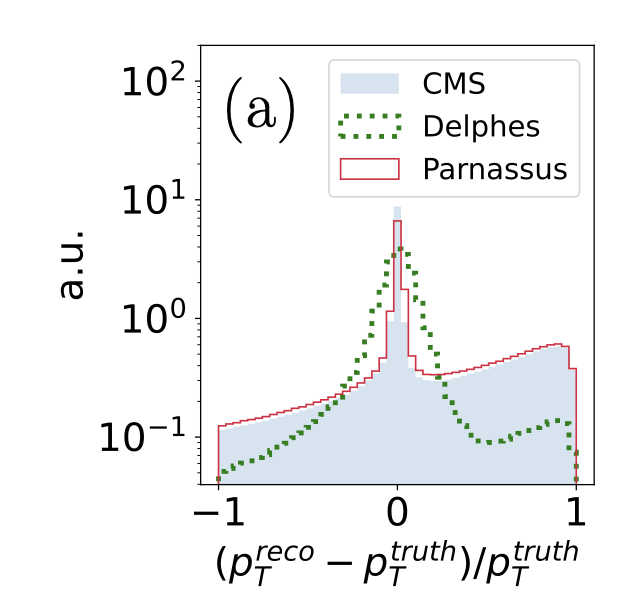

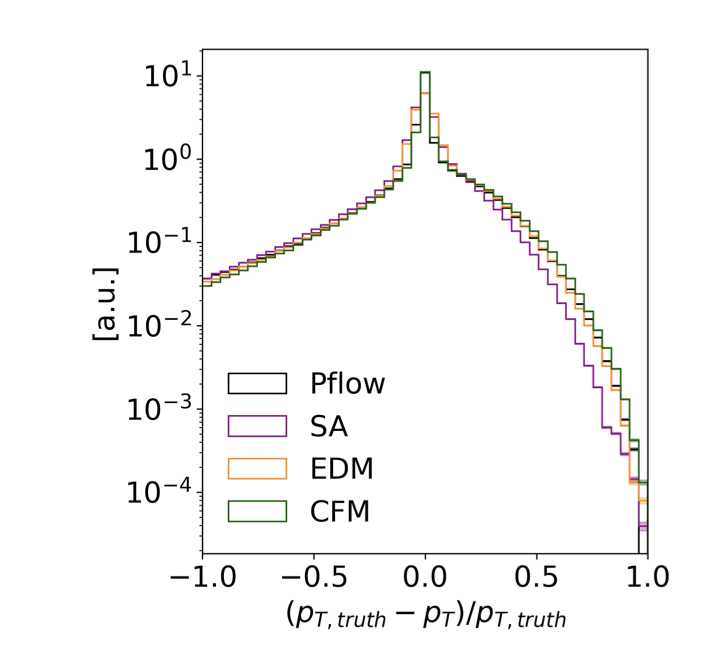

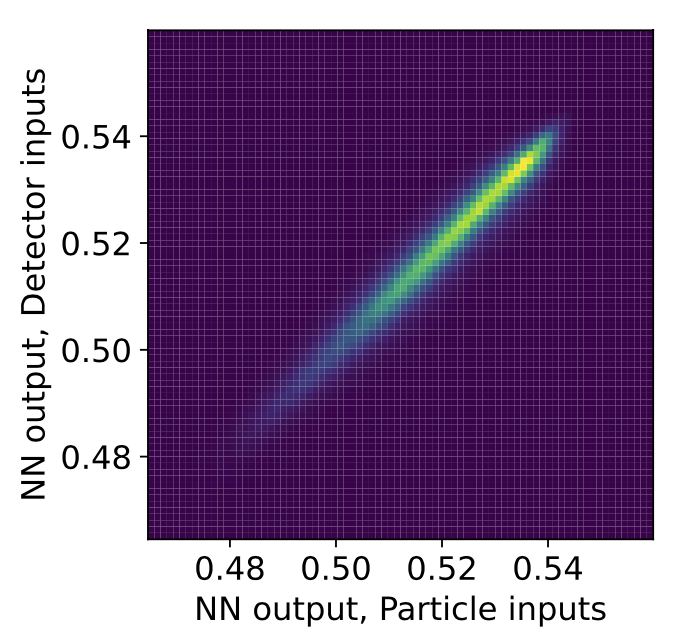

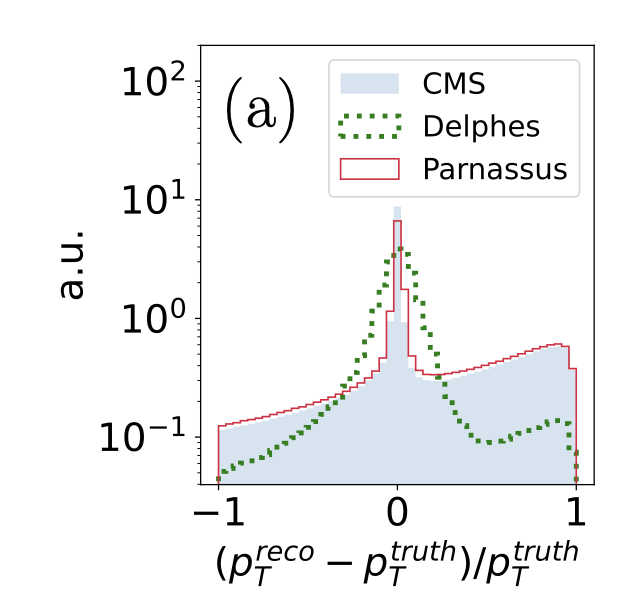

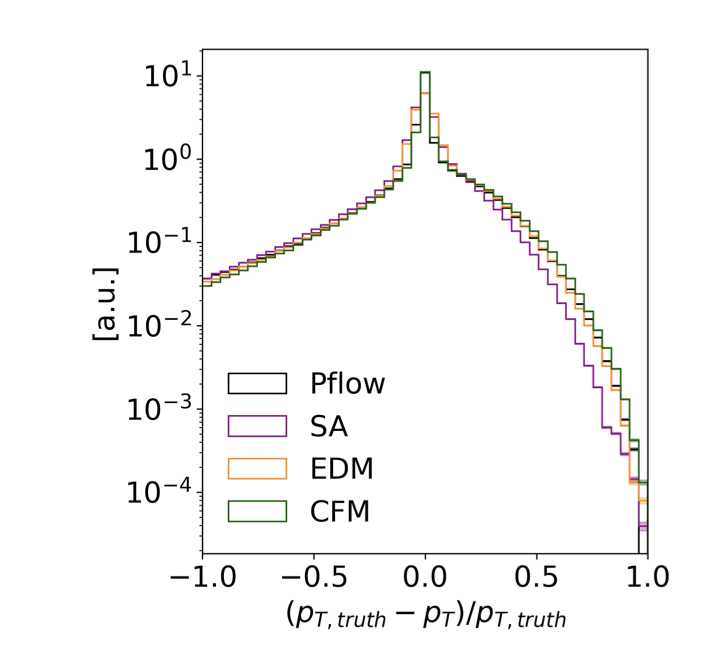

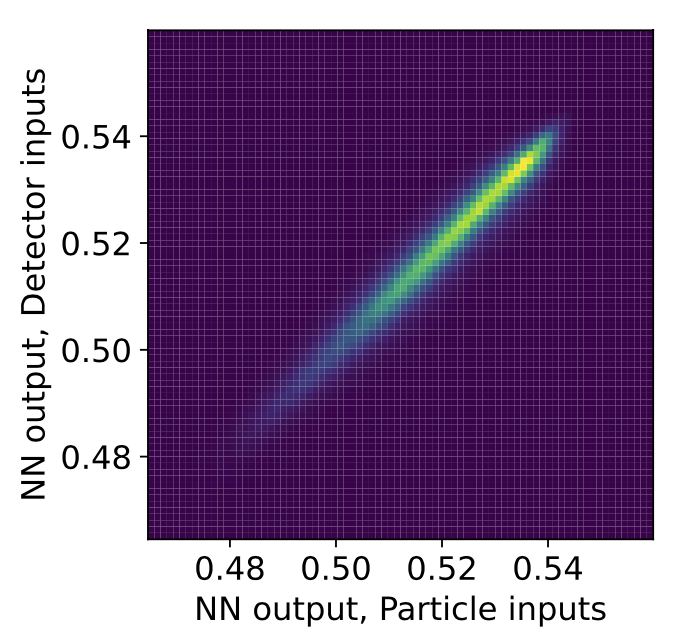

Detector simulation and reconstruction are a significant computational bottleneck in particle physics. We develop Particle-flow Neural Assisted Simulations (Parnassus) to address this challenge. Our deep learning model takes as input a point cloud (particles impinging on a detector) and produces a point cloud (reconstructed particles). By combining detector simulations and reconstruction into one step, we aim to minimize resource utilization and enable fast surrogate models suitable for application both inside and outside large collaborations. We demonstrate this approach using a publicly available dataset of jets passed through the full simulation and reconstruction pipeline of the CMS experiment. We show that Parnassus accurately mimics the CMS particle flow algorithm on the (statistically) same events it was trained on and can generalize to jet momentum and type outside of the training distribution.

×

author="{R. Mastandrea, B. Nachman, T. Plehn}",

title="{Constraining the Higgs potential with neural simulation-based inference for di-Higgs production}",

eprint="2405.15847",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

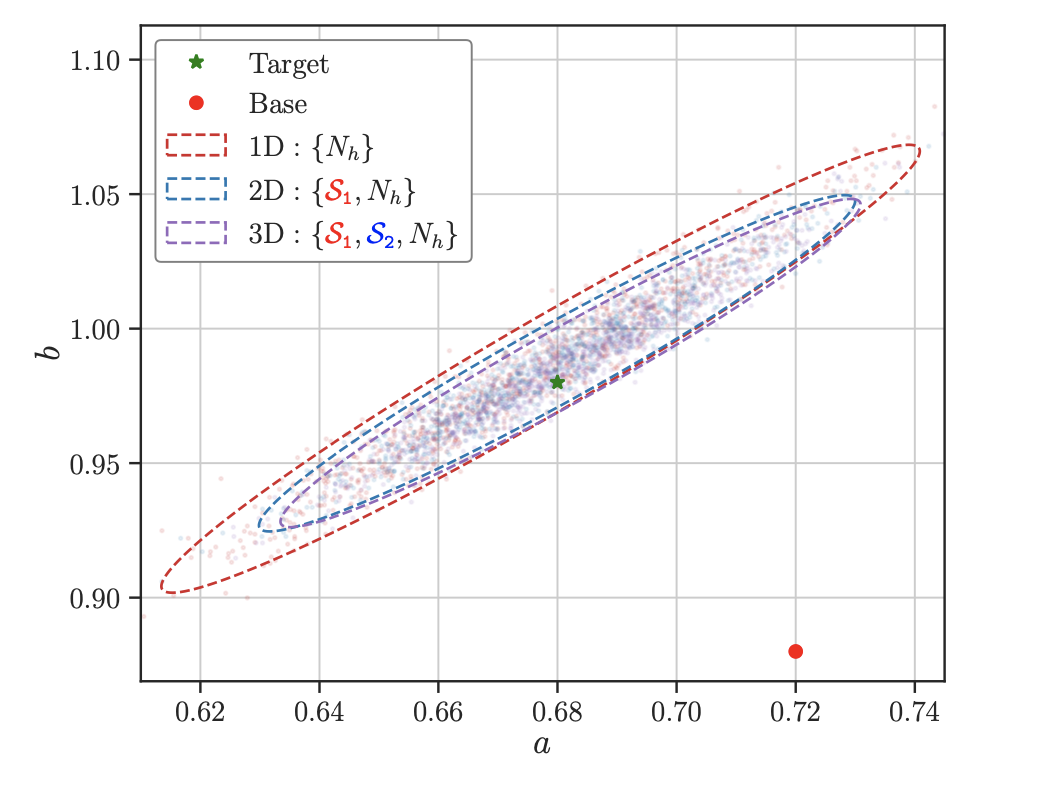

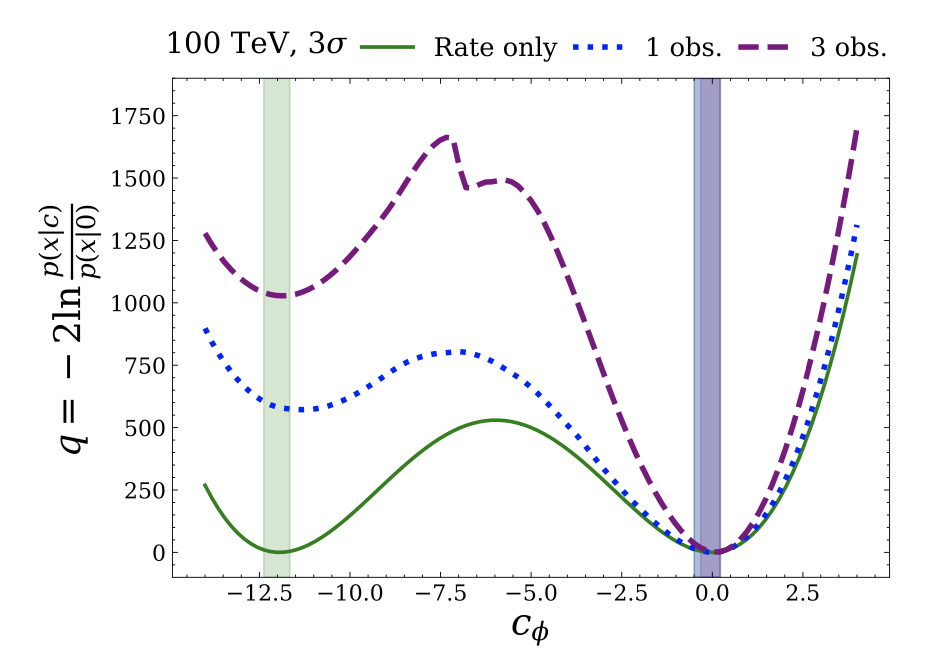

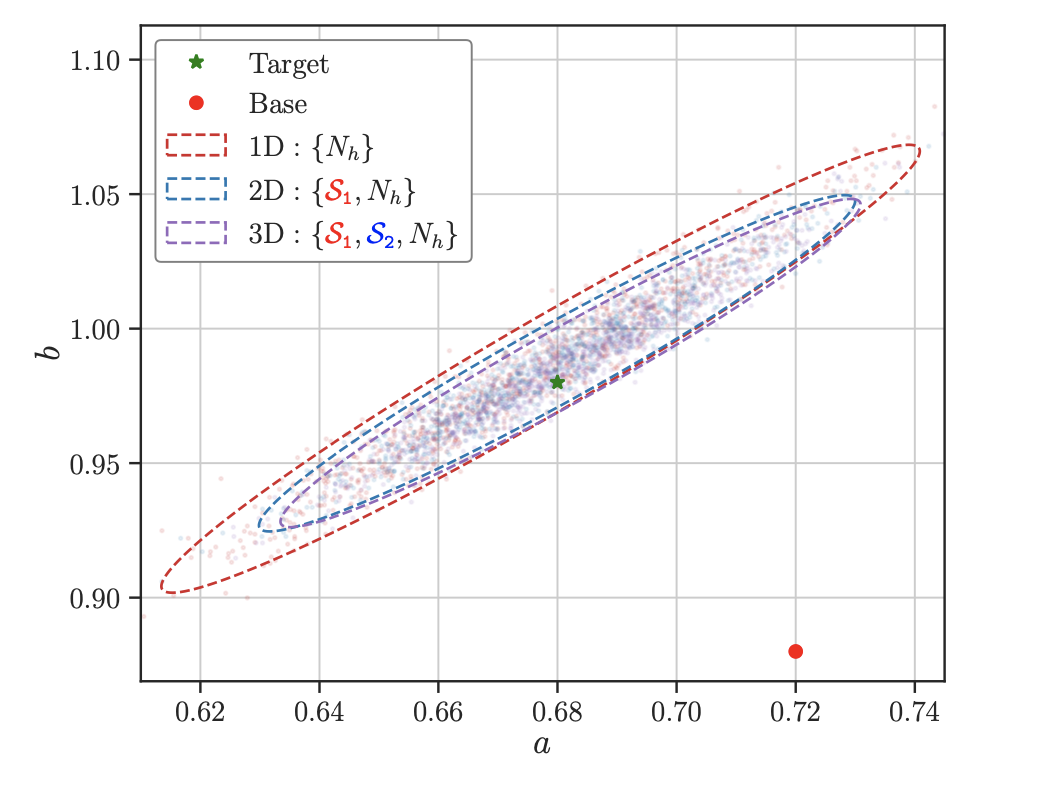

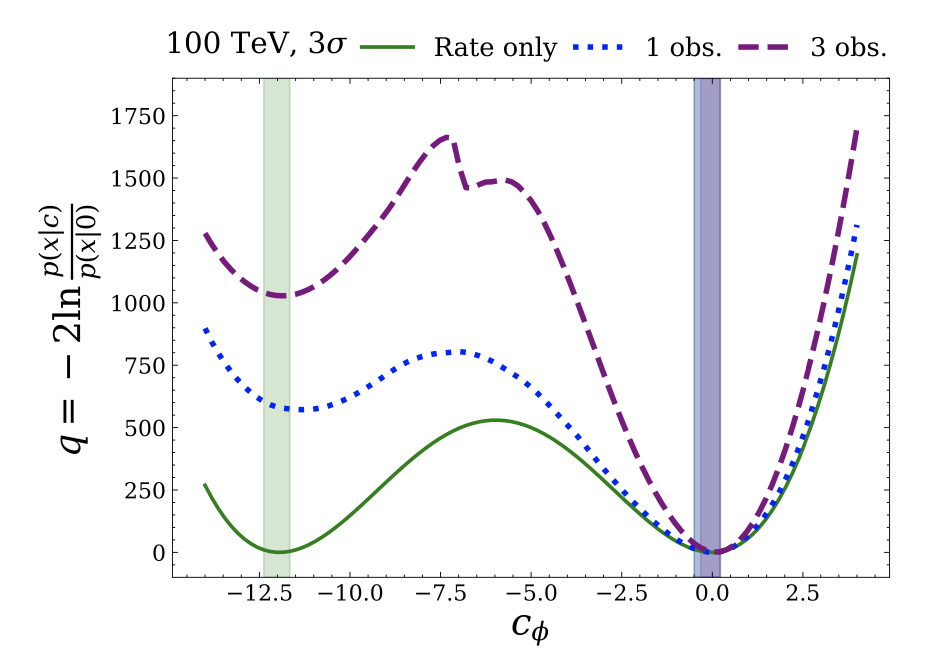

Determining the form of the Higgs potential is one of the most exciting challenges of modern particle physics. Higgs pair production directly probes the Higgs self-coupling and should be observed in the near future at the High-Luminosity LHC. We explore how to improve the sensitivity to physics beyond the Standard Model through per-event kinematics for di-Higgs events. In particular, we employ machine learning through simulation-based inference to estimate per-event likelihood ratios and gauge potential sensitivity gains from including this kinematic information. In terms of the Standard Model Effective Field Theory, we find that adding a limited number of observables can help to remove degeneracies in Wilson coefficient likelihoods and significantly improve the experimental sensitivity.

×

author="{D. Kobylianskii, N. Soybelman, N. Kakati, E. Dreyer, B. Nachman, E. Gross}",

title="{Advancing Set-Conditional Set Generation: Diffusion Models for Fast Simulation of Reconstructed Particles}",

eprint="2405.10106",

archivePrefix = "arXiv",

primaryClass = "hep-ex",

year = "2023"}

×

The computational intensity of detector simulation and event reconstruction poses a significant difficulty for data analysis in collider experiments. This challenge inspires the continued development of machine learning techniques to serve as efficient surrogate models. We propose a fast emulation approach that combines simulation and reconstruction. In other words, a neural network generates a set of reconstructed objects conditioned on input particle sets. To make this possible, we advance set-conditional set generation with diffusion models. Using a realistic, generic, and public detector simulation and reconstruction package (COCOA), we show how diffusion models can accurately model the complex spectrum of reconstructed particles inside jets.

×

author="{C. Cheng, G. Singh, B. Nachman}",

title="{Incorporating Physical Priors into Weakly-Supervised Anomaly Detection}",

eprint="2405.08889",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

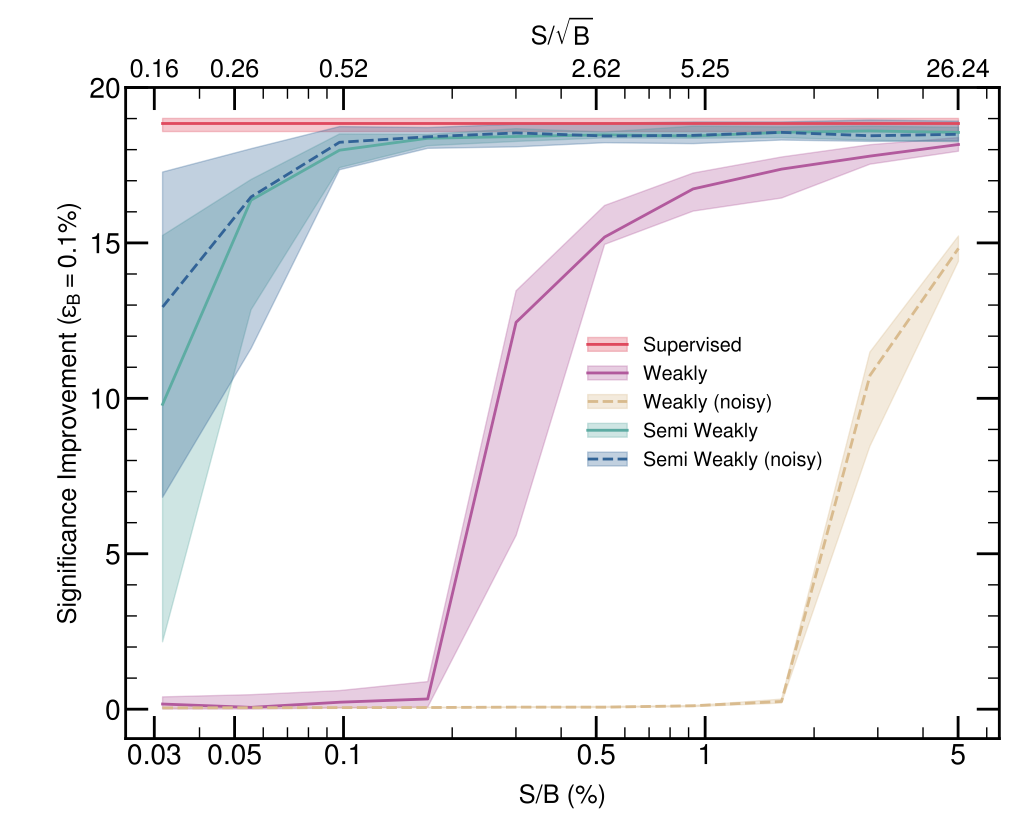

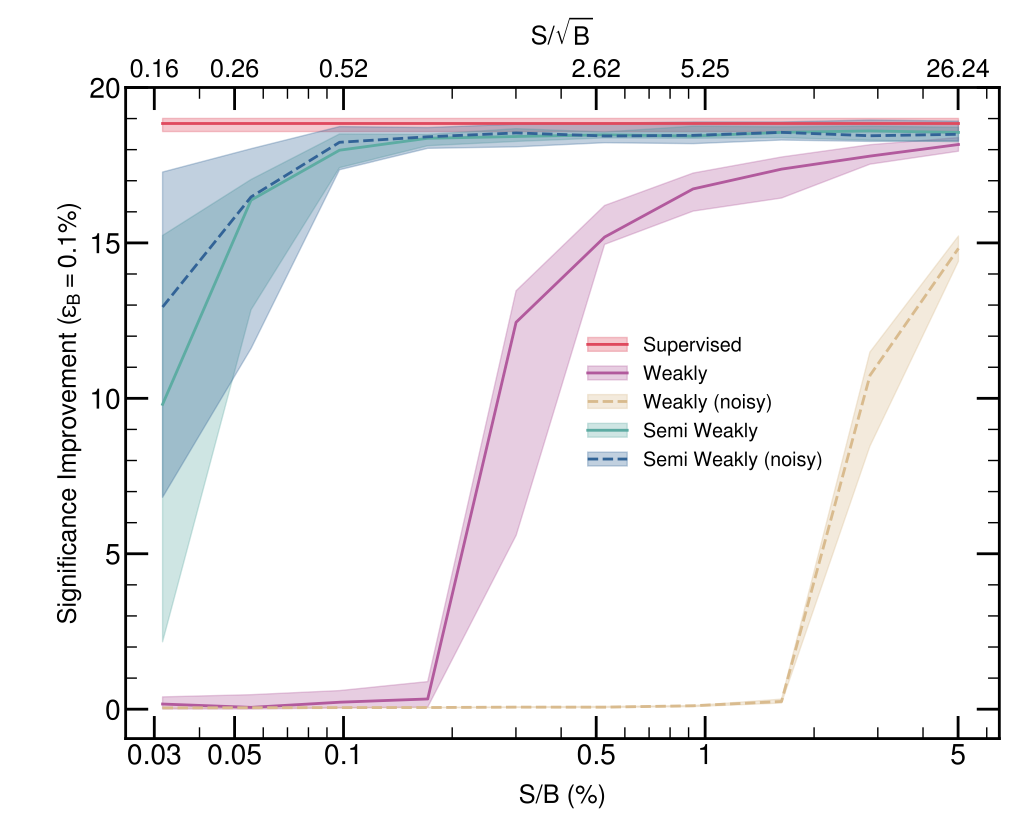

We propose a new machine-learning-based anomaly detection strategy for comparing data with a background-only reference (a form of weak supervision). The sensitivity of previous strategies degrades significantly when the signal is too rare or there are many unhelpful features. Our Prior-Assisted Weak Supervision (PAWS) method incorporates information from a class of signal models in order to significantly enhance the search sensitivity of weakly supervised approaches. As long as the true signal is in the pre-specified class, PAWS matches the sensitivity of a dedicated, fully supervised method without specifying the exact parameters ahead of time. On the benchmark LHC Olympics anomaly detection dataset, our mix of semi-supervised and weakly supervised learning is able to extend the sensitivity over previous methods by a factor of 10 in cross section. Furthermore, if we add irrelevant (noise) dimensions to the inputs, classical methods degrade by another factor of 10 in cross section while PAWS remains insensitive to noise. This new approach could be applied in a number of scenarios and pushes the frontier of sensitivity between completely model-agnostic approaches and fully model-specific searches.

×

author="{H. Du, C. Krause, V. Mikuni, B. Nachman, I. Pang, D. Shih}",

title="{Unifying Simulation and Inference with Normalizing Flows}",

eprint="2404.18992",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

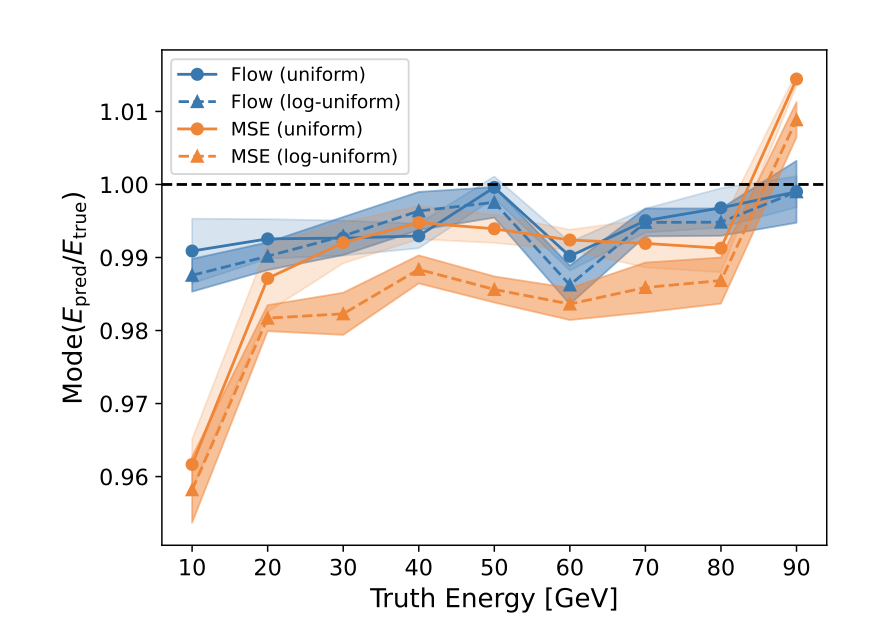

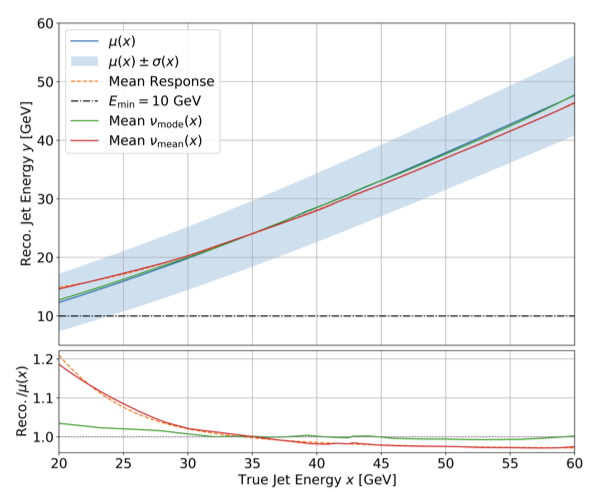

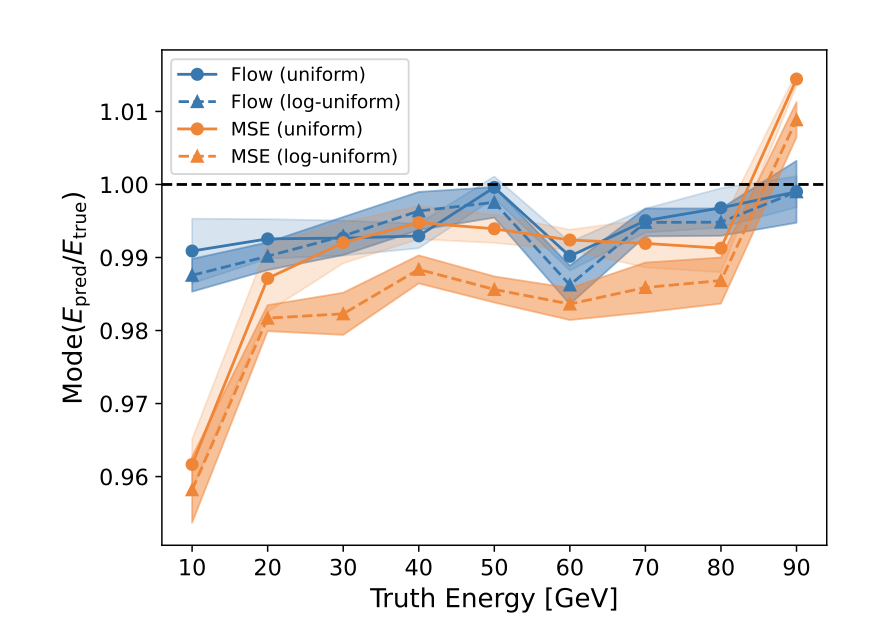

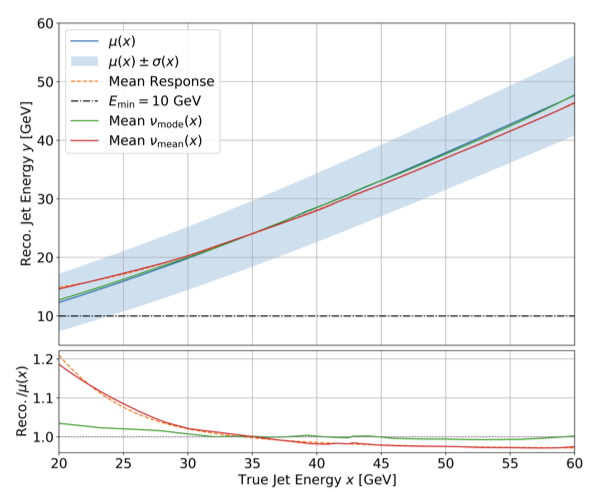

There have been many applications of deep neural networks to detector calibrations and a growing number of studies that propose deep generative models as automated fast detector simulators. We show that these two tasks can be unified by using maximum likelihood estimation (MLE) from conditional generative models for energy regression. Unlike direct regression techniques, the MLE approach is prior-independent and non-Gaussian resolutions can be determined from the shape of the likelihood near the maximum. Using an ATLAS-like calorimeter simulation, we demonstrate this concept in the context of calorimeter energy calibration.

×

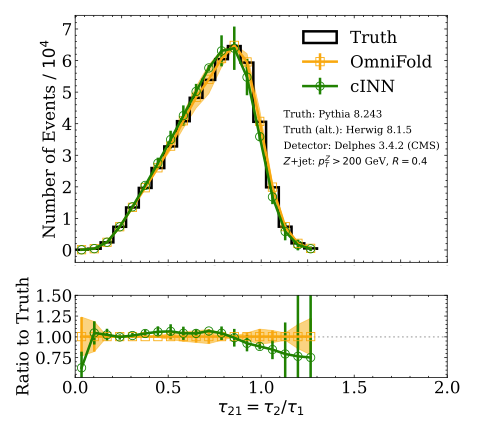

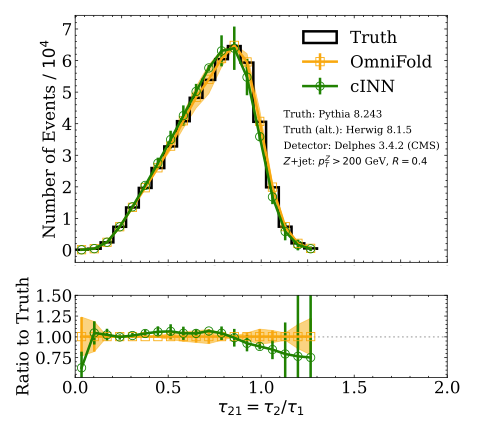

author="{N. Huetsch, J. Villadamigo, A. Shmakov, S. Diefenbacher, V. Mikuni, T. Heimel, M. Fenton, K. Greif, B. Nachman, D. Whiteson, A. Butter, T. Plehn}",

title="{The Landscape of Unfolding with Machine Learning}",

eprint="2404.18807",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

Recent innovations from machine learning allow for data unfolding, without binning and including correlations across many dimensions. We describe a set of known, upgraded, and new methods for ML-based unfolding. The performance of these approaches are evaluated on the same two datasets. We find that all techniques are capable of accurately reproducing the particle-level spectra across complex observables. Given that these approaches are conceptually diverse, they offer an exciting toolkit for a new class of measurements that can probe the Standard Model with an unprecedented level of detail and may enable sensitivity to new phenomena.

×

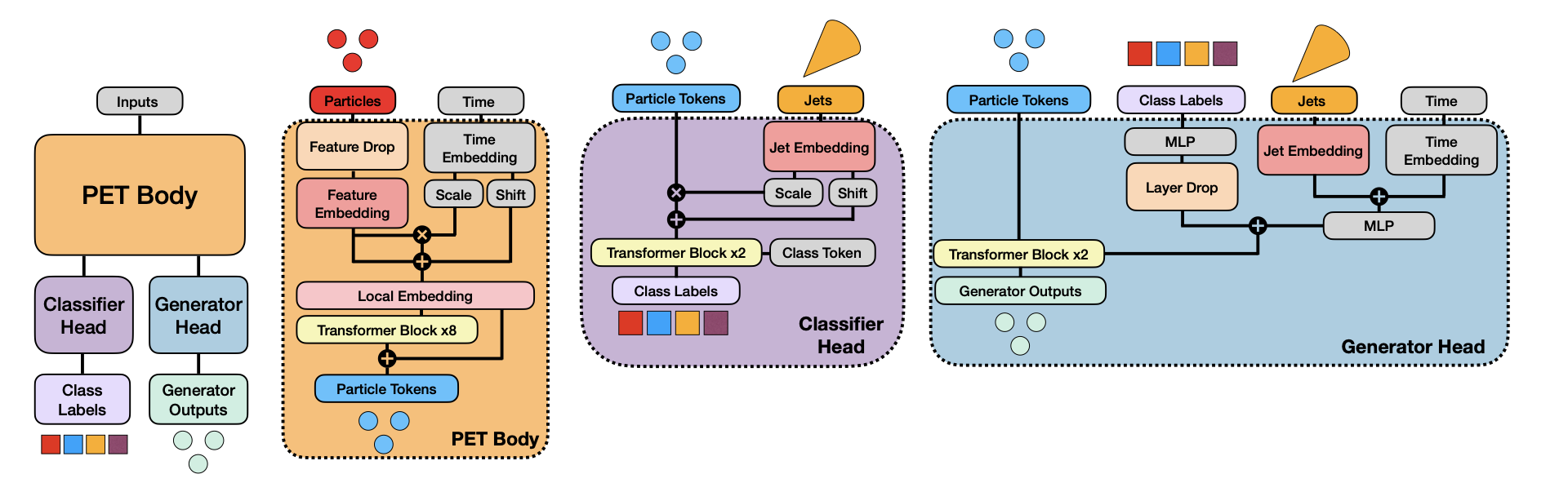

author="{V. Mikuni, B. Nachman}",

title="{OmniLearn: A Method to Simultaneously Facilitate All Jet Physics Tasks}",

eprint="2404.16091",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

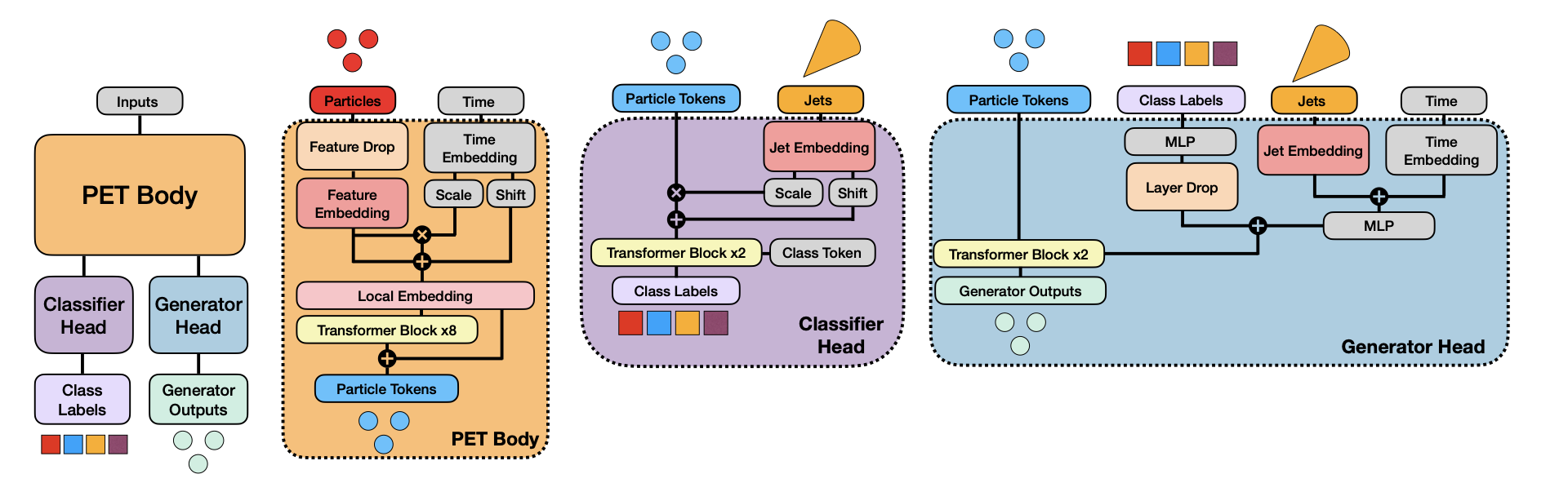

Machine learning has become an essential tool in jet physics. Due to their complex, high-dimensional nature, jets can be explored holistically by neural networks in ways that are not possible manually. However, innovations in all areas of jet physics are proceeding in parallel. We show that specially constructed machine learning models trained for a specific jet classification task can improve the accuracy, precision, or speed of all other jet physics tasks. This is demonstrated by training on a particular multiclass classification task and then using the learned representation for different classification tasks, for datasets with a different (full) detector simulation, for jets from a different collision system ($pp$ versus $ep$), for generative models, for likelihood ratio estimation, and for anomaly detection. Our OmniLearn approach is thus a foundation model and is made publicly available for use in any area where state-of-the-art precision is required for analyses involving jets and their substructure.

×

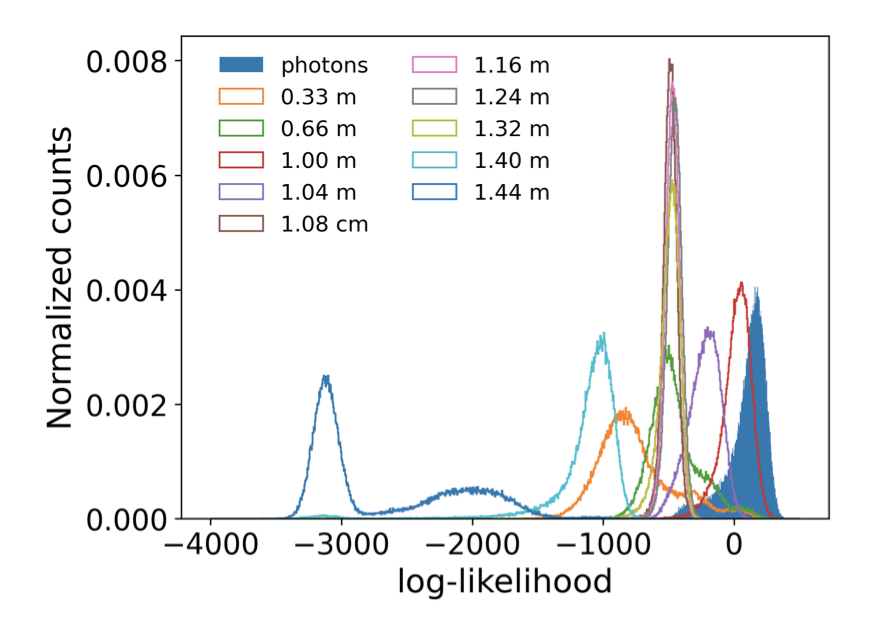

author="{C. Krause, B. Nachman, I. Pang, D. Shih, Y. Zhu}",

title="{Anomaly detection with flow-based fast calorimeter simulators}",

eprint="2312.11618",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

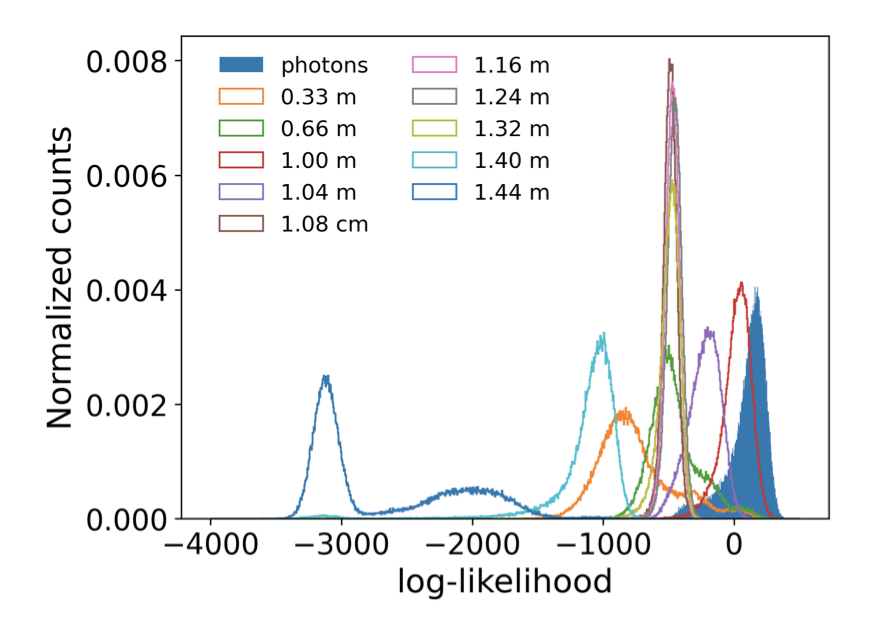

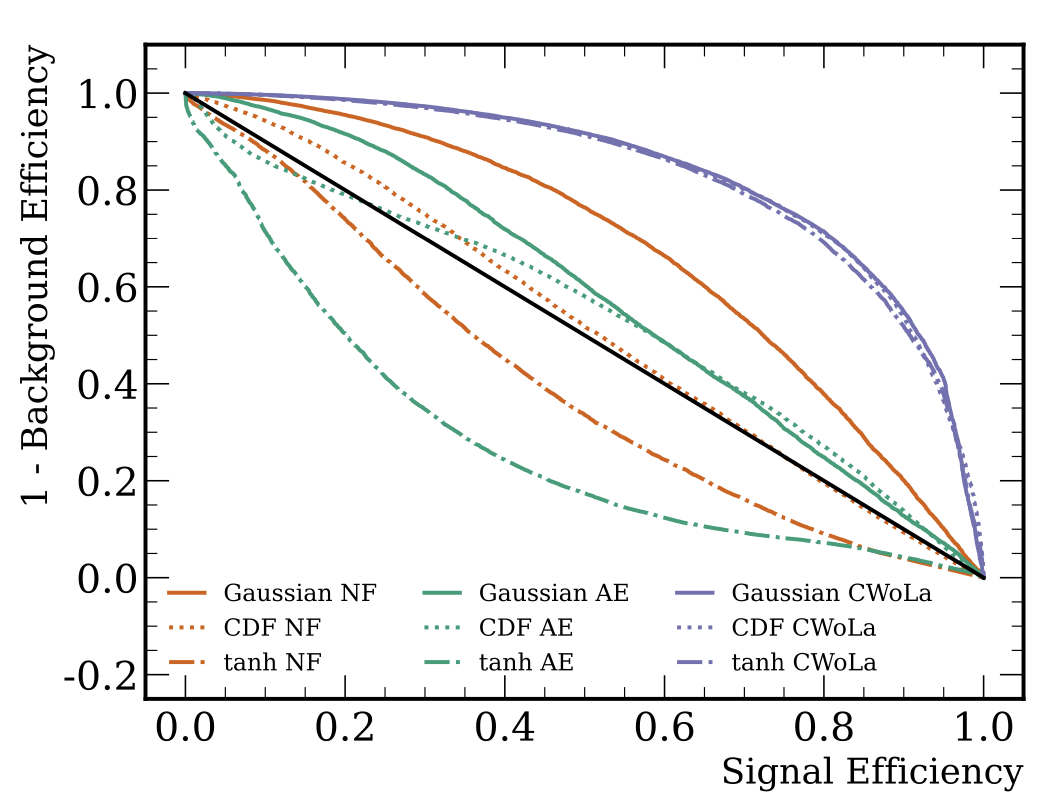

Recently, several normalizing flow-based deep generative models have been proposed to accelerate the simulation of calorimeter showers. Using CaloFlow as an example, we show that these models can simultaneously perform unsupervised anomaly detection with no additional training cost. As a demonstration, we consider electromagnetic showers initiated by one (background) or multiple (signal) photons. The CaloFlow model is designed to generate single photon showers, but it also provides access to the shower likelihood. We use this likelihood as an anomaly score and study the showers tagged as being unlikely. As expected, the tagger struggles when the signal photons are nearly collinear, but is otherwise effective. This approach is complementary to a supervised classifier trained on only specific signal models using the same low-level calorimeter inputs. While the supervised classifier is also highly effective at unseen signal models, the unsupervised method is more sensitive in certain regions and thus we expect that the ultimate performance will require a combination of approaches.

×

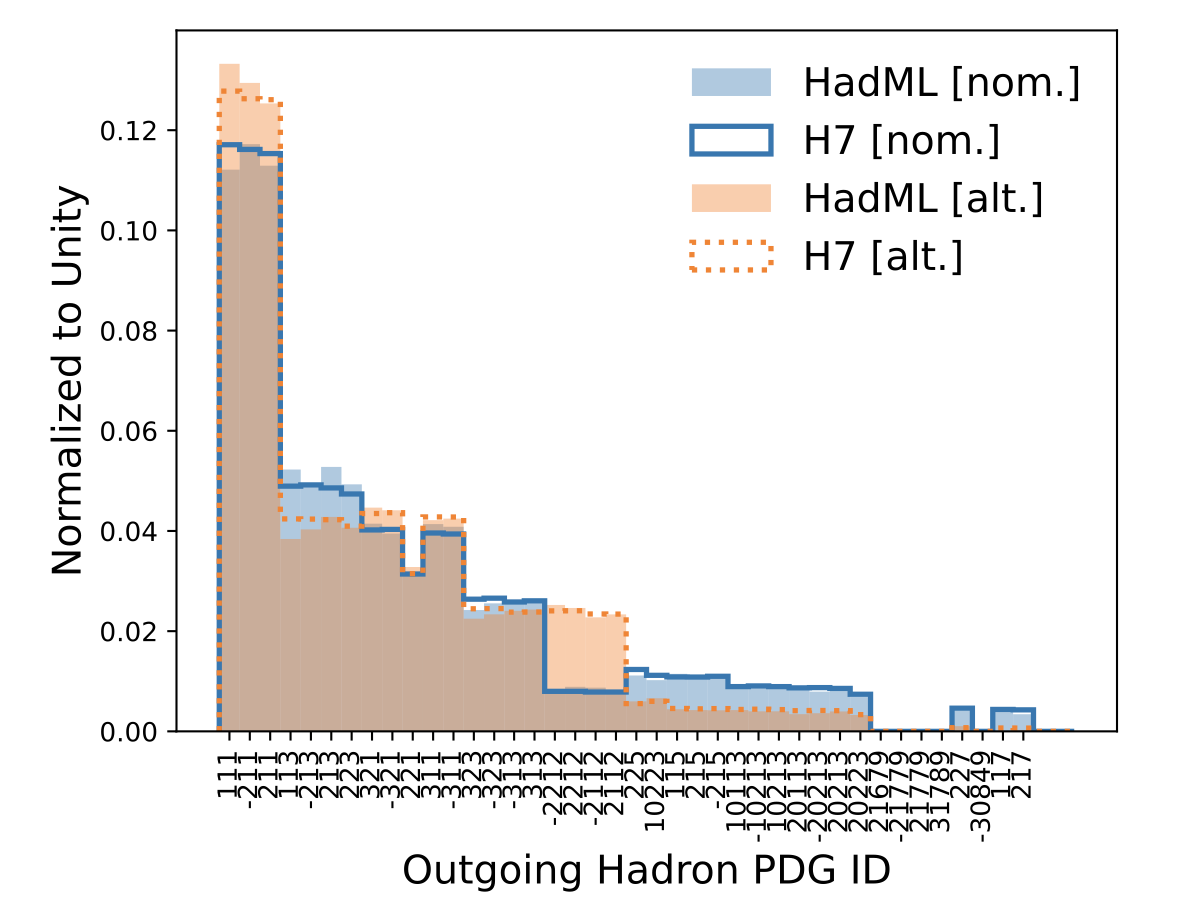

author="{J. Chan, X. Ju, A. Kania, B. Nachman, V. Sangli, A. Siodmok}",

title="{Integrating Particle Flavor into Deep Learning Models for Hadronization}",

eprint="2312.08453",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

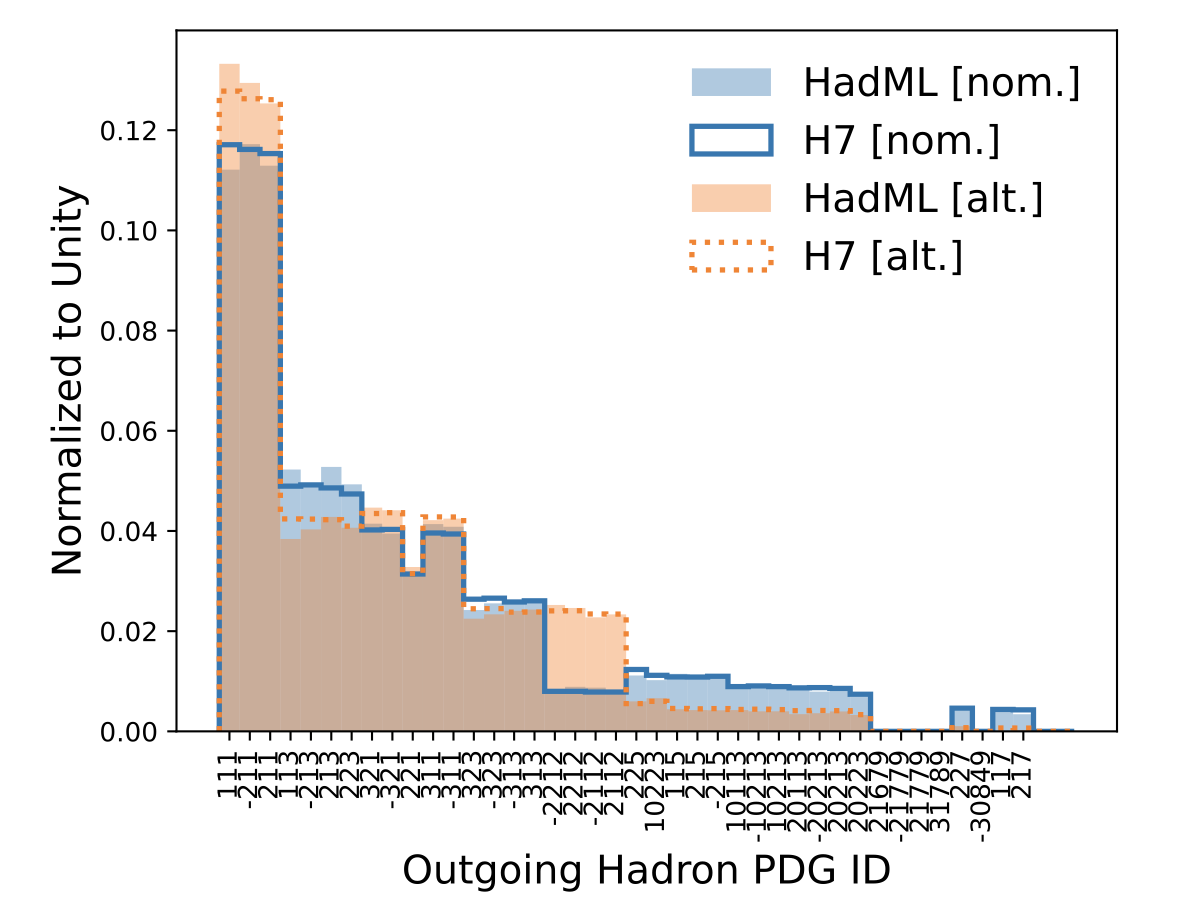

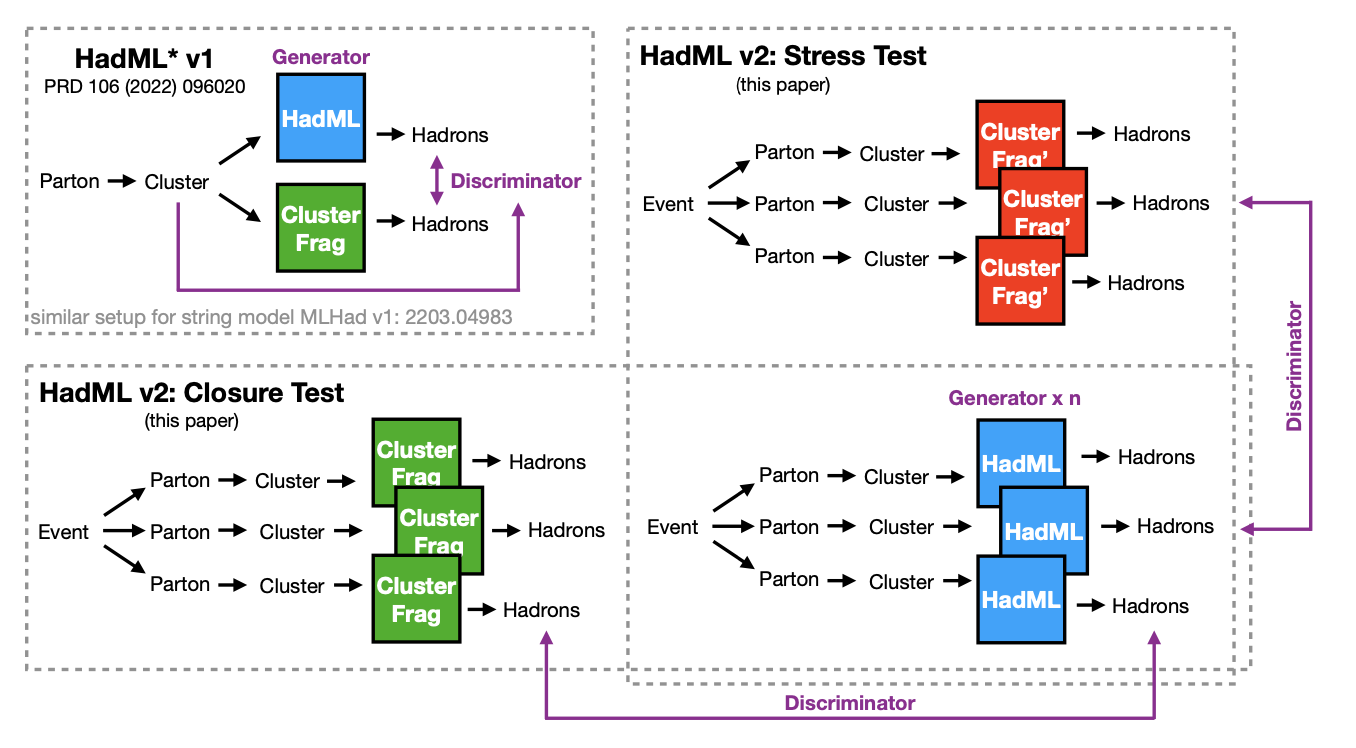

Hadronization models used in event generators are physics-inspired functions with many tunable parameters. Since we do not understand hadronization from first principles, there have been multiple proposals to improve the accuracy of hadronization models by utilizing more flexible parameterizations based on neural networks. These recent proposals have focused on the kinematic properties of hadrons, but a full model must also include particle flavor. In this paper, we show how to build a deep learning-based hadronization model that includes both kinematic (continuous) and flavor (discrete) degrees of freedom. Our approach is based on Generative Adversarial Networks and we show the performance within the context of the cluster hadronization model within the Herwig event generator.

×

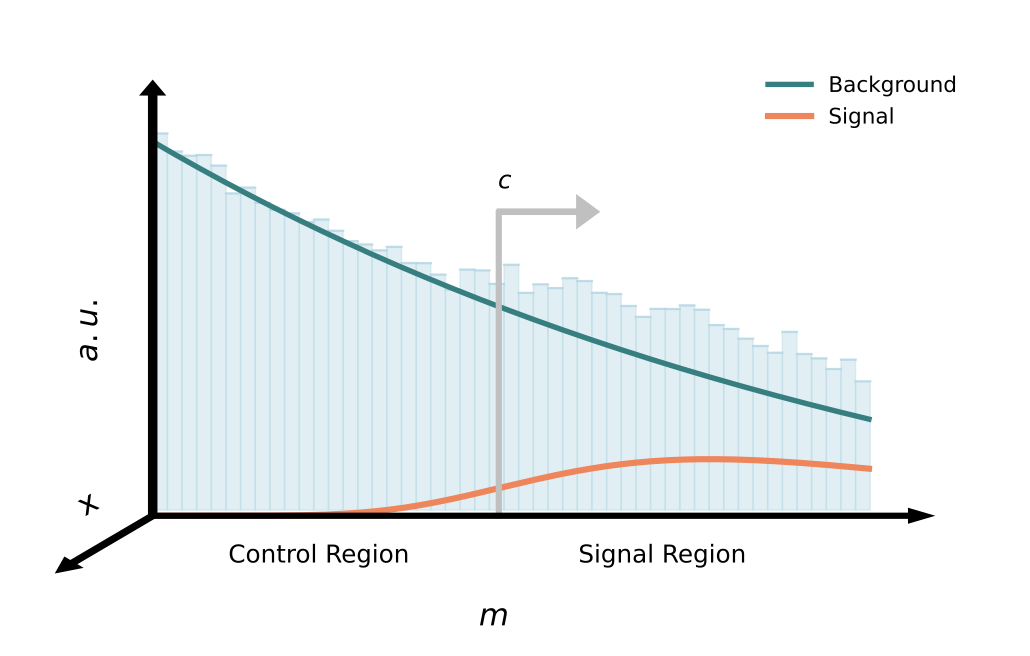

author="{K. Bai, R. Mastandrea, B. Nachman}",

title="{Non-resonant Anomaly Detection with Background Extrapolation}",

eprint="2311.12924",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

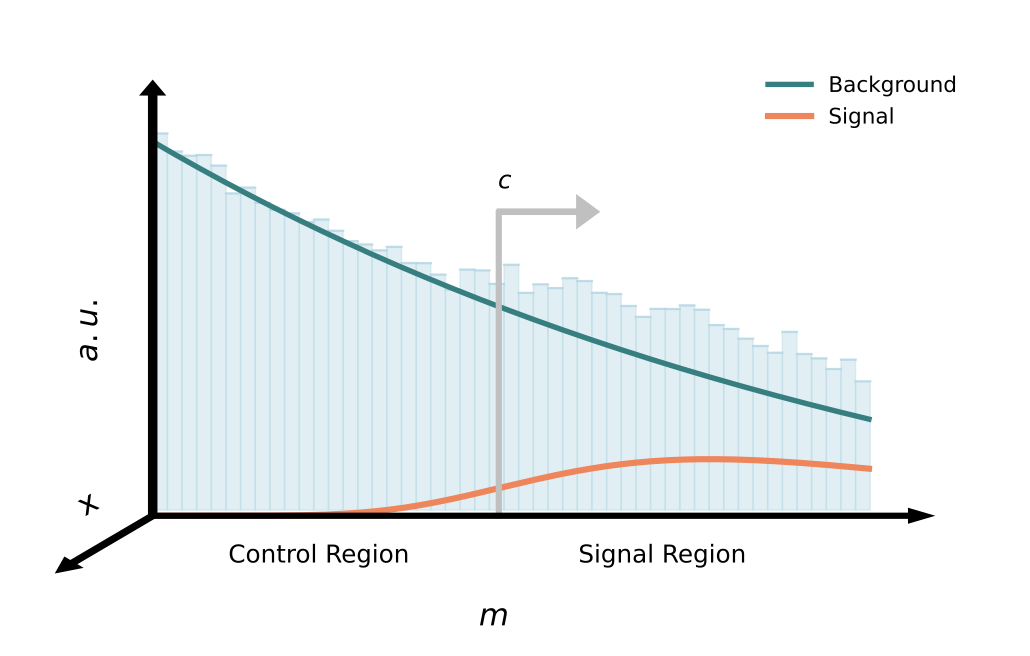

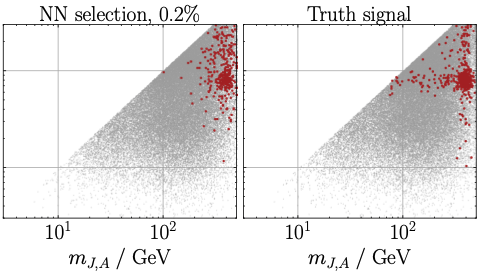

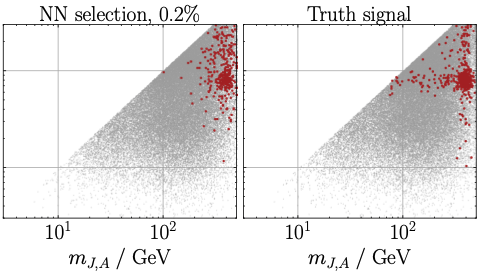

Complete anomaly detection strategies that are both signal sensitive and compatible with background estimation have largely focused on resonant signals. Non-resonant new physics scenarios are relatively under-explored and may arise from off-shell effects or final states with significant missing energy. In this paper, we extend a class of weakly supervised anomaly detection strategies developed for resonant physics to the non-resonant case. Machine learning models are trained to reweight, generate, or morph the background, extrapolated from a control region. A classifier is then trained in a signal region to distinguish the estimated background from the data. The new methods are demonstrated using a semi-visible jet signature as a benchmark signal model, and are shown to automatically identify the anomalous events without specifying the signal ahead of time.

×

author="{S. Bright-Thonney, B. Nachman, J. Thaler}",

title="{Safe but Incalculable: Energy-weighting is not all you need}",

eprint="2311.07652",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

Infrared and collinear (IRC) safety has long been used a proxy for robustness when developing new jet substructure observables. This guiding philosophy has been carried into the deep learning era, where IRC-safe neural networks have been used for many jet studies. For graph-based neural networks, the most straightforward way to achieve IRC safety is to weight particle inputs by their energies. However, energy-weighting by itself does not guarantee that perturbative calculations of machine-learned observables will enjoy small non-perturbative corrections. In this paper, we demonstrate the sensitivity of IRC-safe networks to non-perturbative effects, by training an energy flow network (EFN) to maximize its sensitivity to hadronization. We then show how to construct Lipschitz Energy Flow Networks (L-EFNs), which are both IRC safe and relatively insensitive to non-perturbative corrections. We demonstrate the performance of L-EFNs on generated samples of quark and gluon jets, and showcase fascinating differences between the learned latent representations of EFNs and L-EFNs.

×

author="{O. Long, B. Nachman}",

title="{Designing Observables for Measurements with Deep Learning}",

eprint="2310.08717",

archivePrefix = "arXiv",

primaryClass = "physics.data-an",

year = "2023"}

×

Many analyses in particle and nuclear physics use simulations to infer fundamental, effective, or phenomenological parameters of the underlying physics models. When the inference is performed with unfolded cross sections, the observables are designed using physics intuition and heuristics. We propose to design optimal observables with machine learning. Unfolded, differential cross sections in a neural network output contain the most information about parameters of interest and can be well-measured by construction. We demonstrate this idea using two physics models for inclusive measurements in deep inelastic scattering.

×

author="{E. Buhmann, C. Ewen, G. Kasieczka, V. Mikuni, B. Nachman, D. Shih}",

title="{Full Phase Space Resonant Anomaly Detection}",

eprint="2310.06897",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

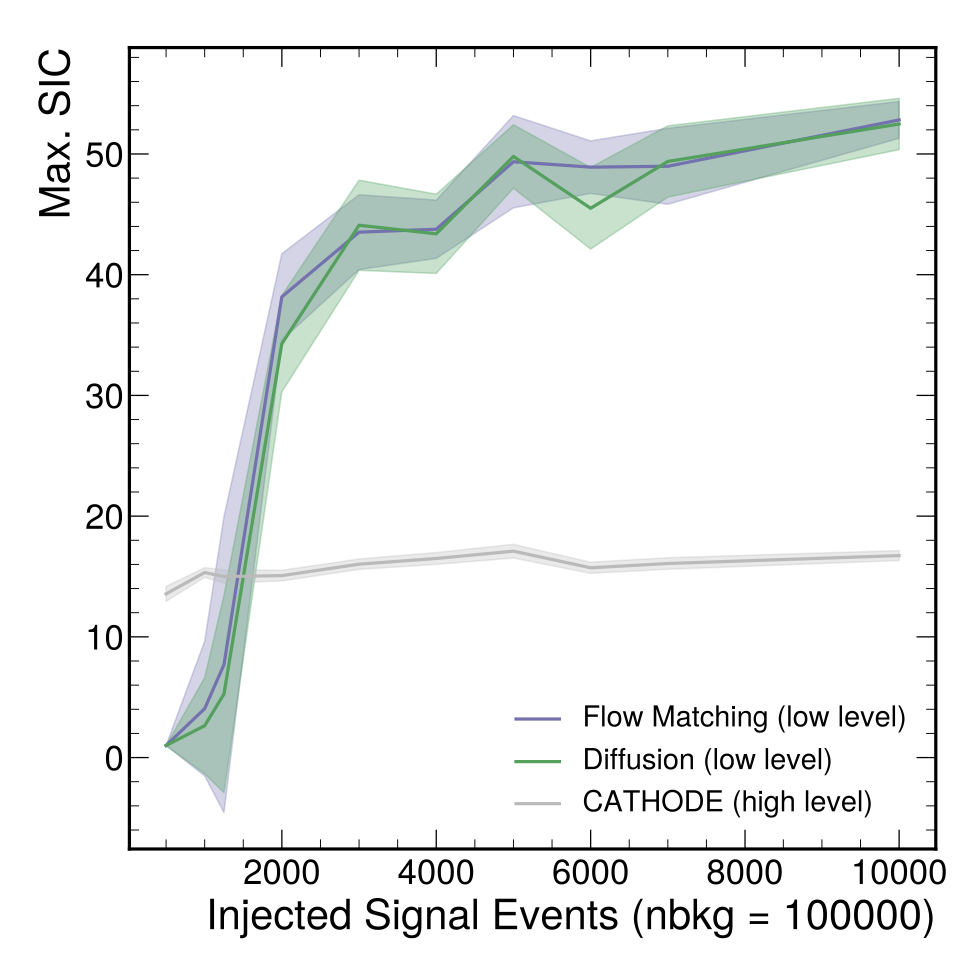

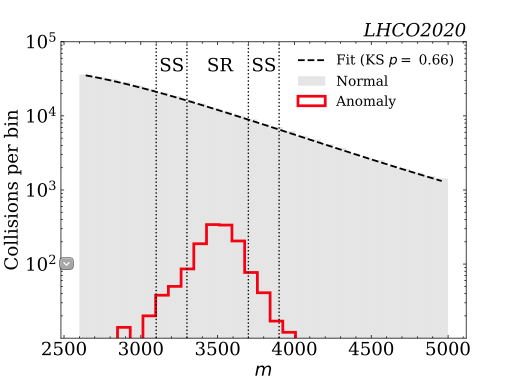

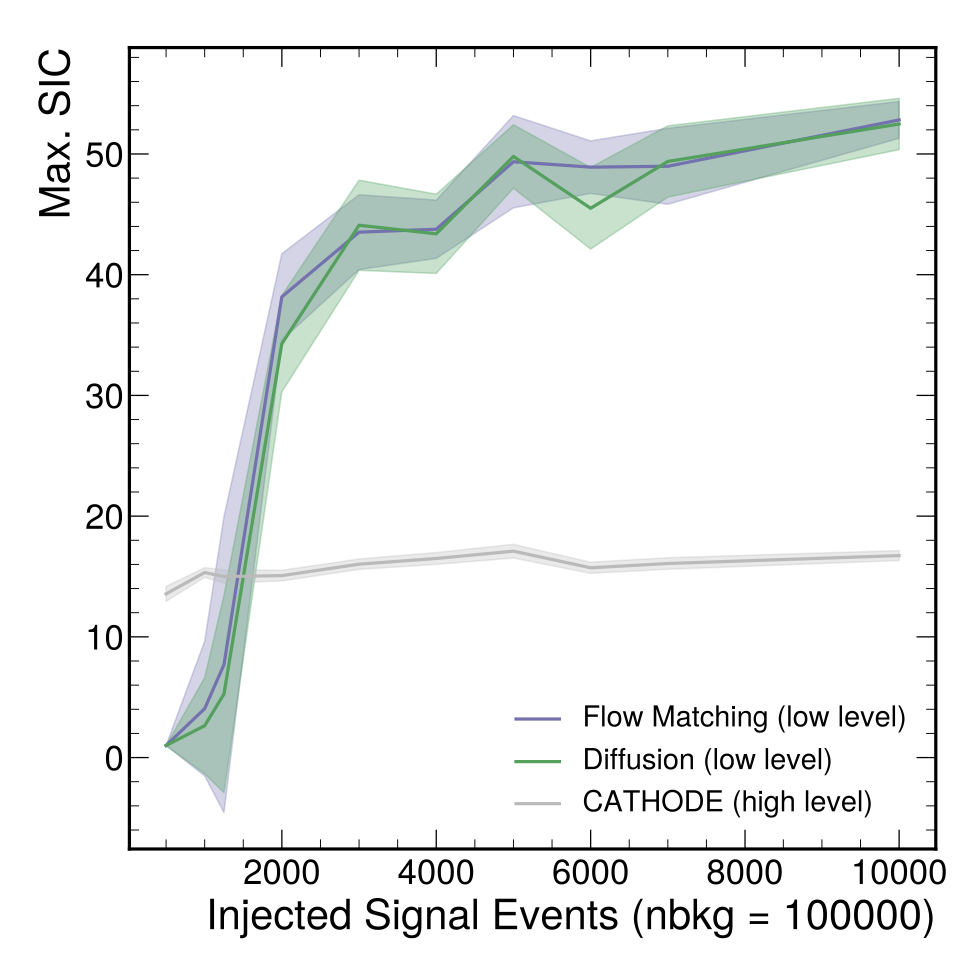

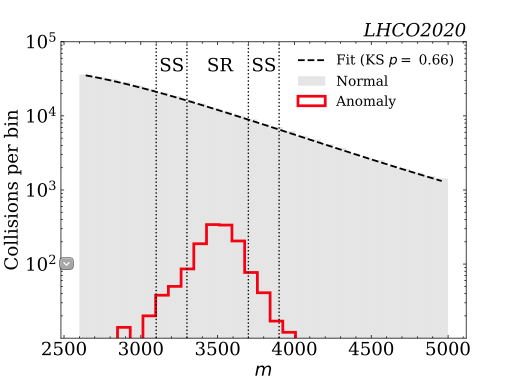

Physics beyond the Standard Model that is resonant in one or more dimensions has been a longstanding focus of countless searches at colliders and beyond. Recently, many new strategies for resonant anomaly detection have been developed, where sideband information can be used in conjunction with modern machine learning, in order to generate synthetic datasets representing the Standard Model background. Until now, this approach was only able to accommodate a relatively small number of dimensions, limiting the breadth of the search sensitivity. Using recent innovations in point cloud generative models, we show that this strategy can also be applied to the full phase space, using all relevant particles for the anomaly detection. As a proof of principle, we show that the signal from the R&D dataset from the LHC Olympics is findable with this method, opening up the door to future studies that explore the interplay between depth and breadth in the representation of the data for anomaly detection.

×

author="{F. Acosta, B. Karki, P. Karande, A. Angerami, M. Arratia, K. Barish, R. Milton, S. Morán, B. Nachman, A. Sinha}",

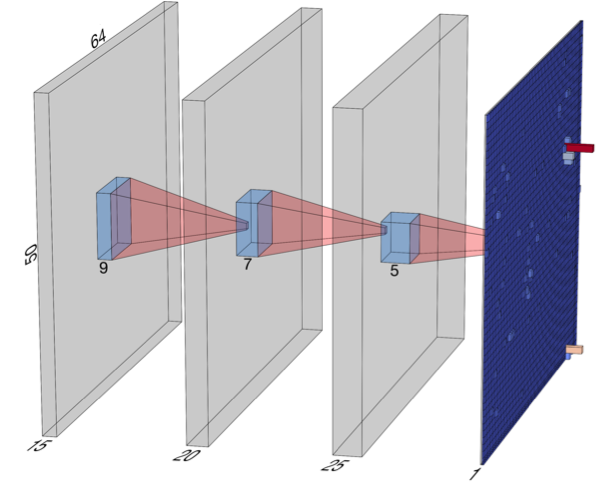

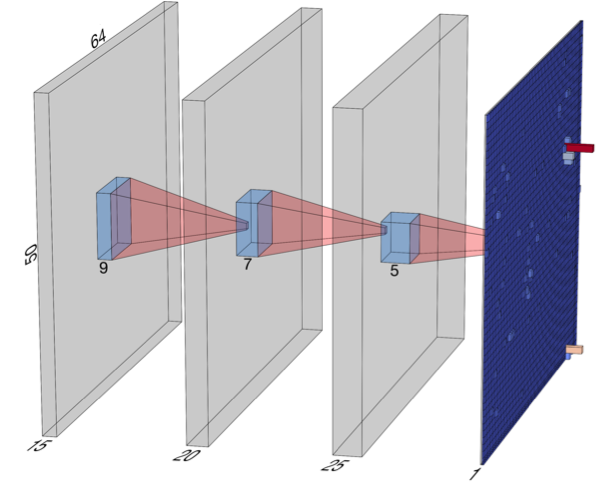

title="{The Optimal use of Segmentation for Sampling Calorimeters}",

eprint="2310.04442",

archivePrefix = "arXiv",

primaryClass = "physics.ins-det",

year = "2023"}

×

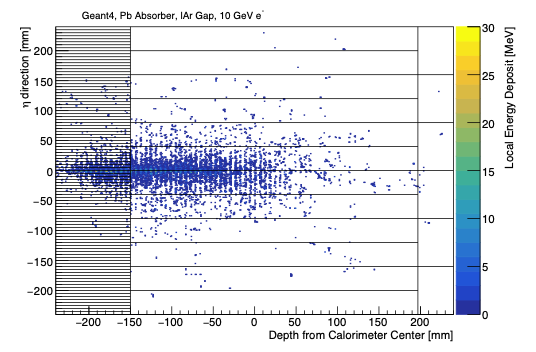

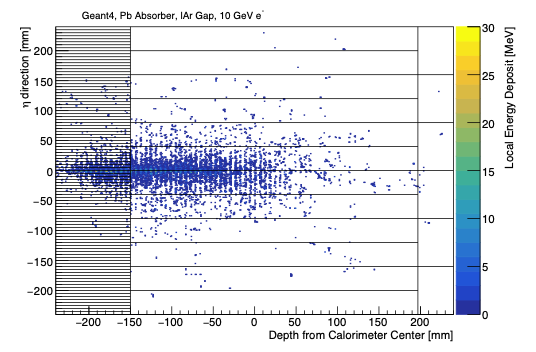

One of the key design choices of any sampling calorimeter is how fine to make the longitudinal and transverse segmentation. To inform this choice, we study the impact of calorimeter segmentation on energy reconstruction. To ensure that the trends are due entirely to hardware and not to a sub-optimal use of segmentation, we deploy deep neural networks to perform the reconstruction. These networks make use of all available information by representing the calorimeter as a point cloud. To demonstrate our approach, we simulate a detector similar to the forward calorimeter system intended for use in the ePIC detector, which will operate at the upcoming Electron Ion Collider. We find that for the energy estimation of isolated charged pion showers, relatively fine longitudinal segmentation is key to achieving an energy resolution that is better than 10% across the full phase space. These results provide a valuable benchmark for ongoing EIC detector optimizations and may also inform future studies involving high-granularity calorimeters in other experiments at various facilities.

×

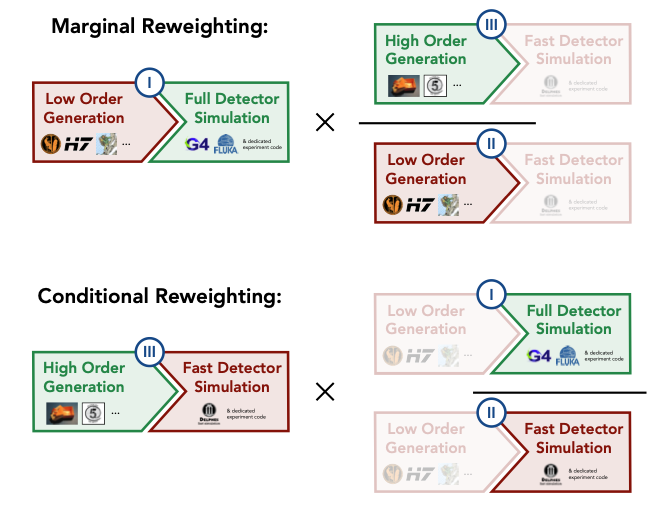

author="{T. Golling, S. Klein, R. Mastandrea, B. Nachman, J. Raine}",

title="{Flows for Flows: Morphing one Dataset into another with Maximum Likelihood Estimation}",

eprint="2309.06472",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

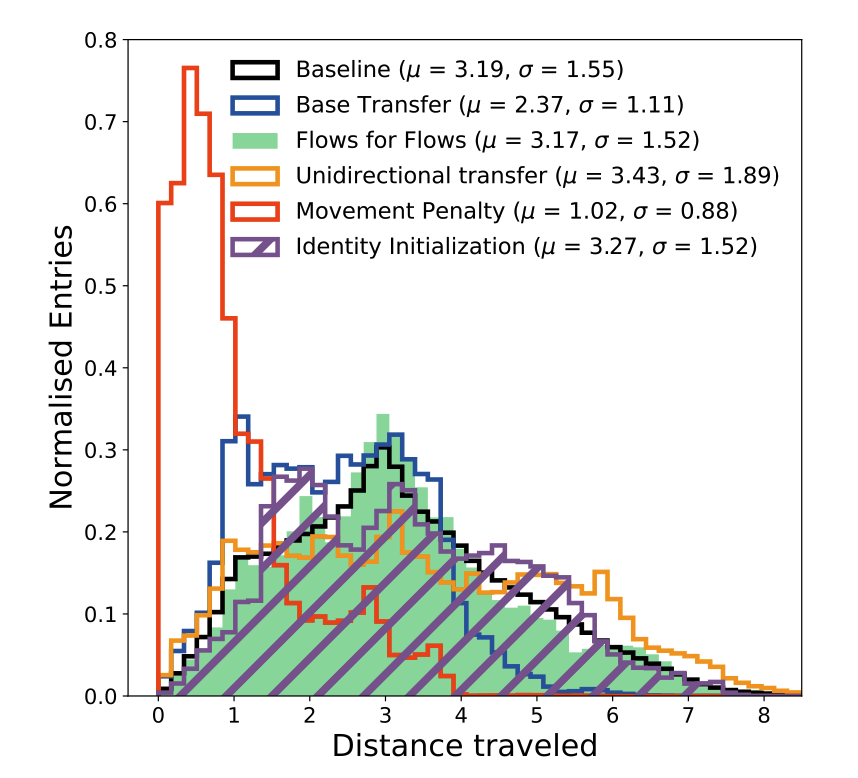

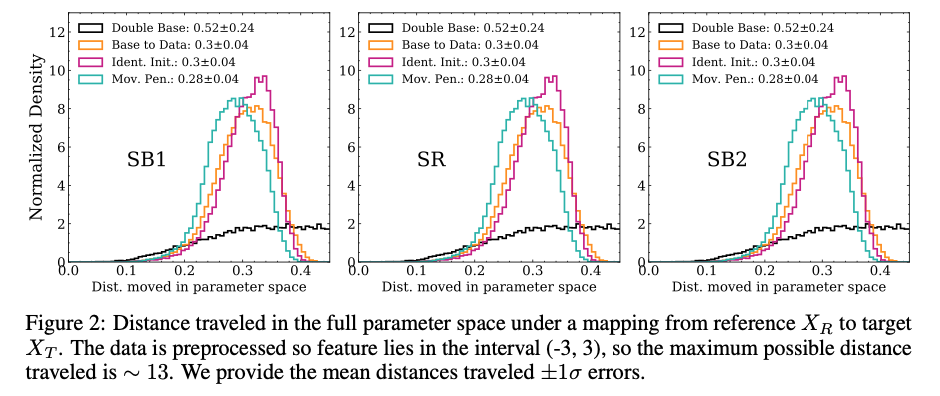

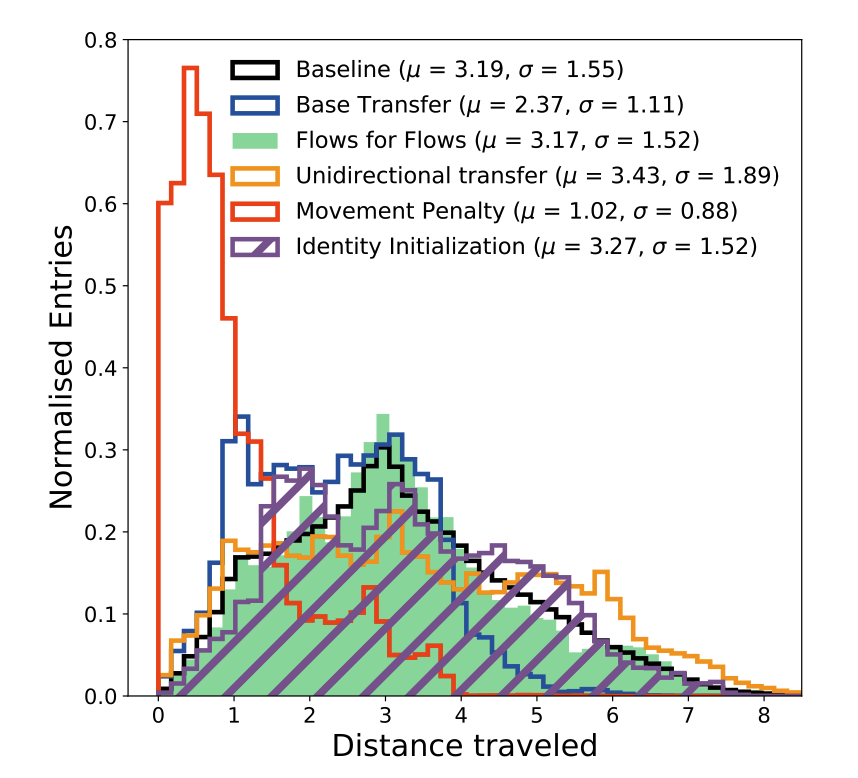

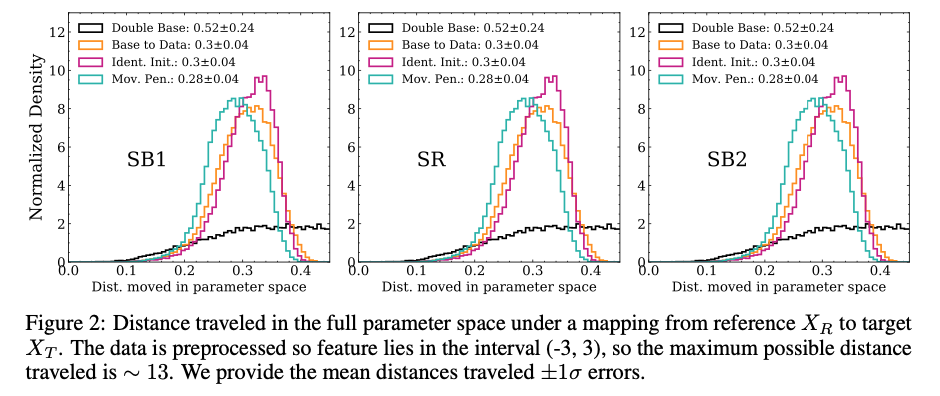

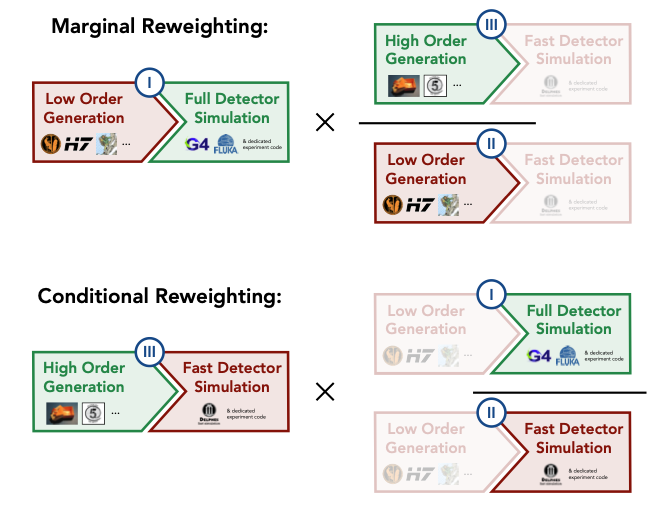

Many components of data analysis in high energy physics and beyond require morphing one dataset into another. This is commonly solved via reweighting, but there are many advantages of preserving weights and shifting the data points instead. Normalizing flows are machine learning models with impressive precision on a variety of particle physics tasks. Naively, normalizing flows cannot be used for morphing because they require knowledge of the probability density of the starting dataset. In most cases in particle physics, we can generate more examples, but we do not know densities explicitly. We propose a protocol called flows for flows for training normalizing flows to morph one dataset into another even if the underlying probability density of neither dataset is known explicitly. This enables a morphing strategy trained with maximum likelihood estimation, a setup that has been shown to be highly effective in related tasks. We study variations on this protocol to explore how far the data points are moved to statistically match the two datasets. Furthermore, we show how to condition the learned flows on particular features in order to create a morphing function for every value of the conditioning feature. For illustration, we demonstrate flows for flows for toy examples as well as a collider physics example involving dijet events

×

author="{S. Diefenbacher, G. Liu, V. Mikuni, B. Nachman, W. Nie}",

title="{Improving Generative Model-based Unfolding with Schrödinger Bridges}",

eprint="2308.12351",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

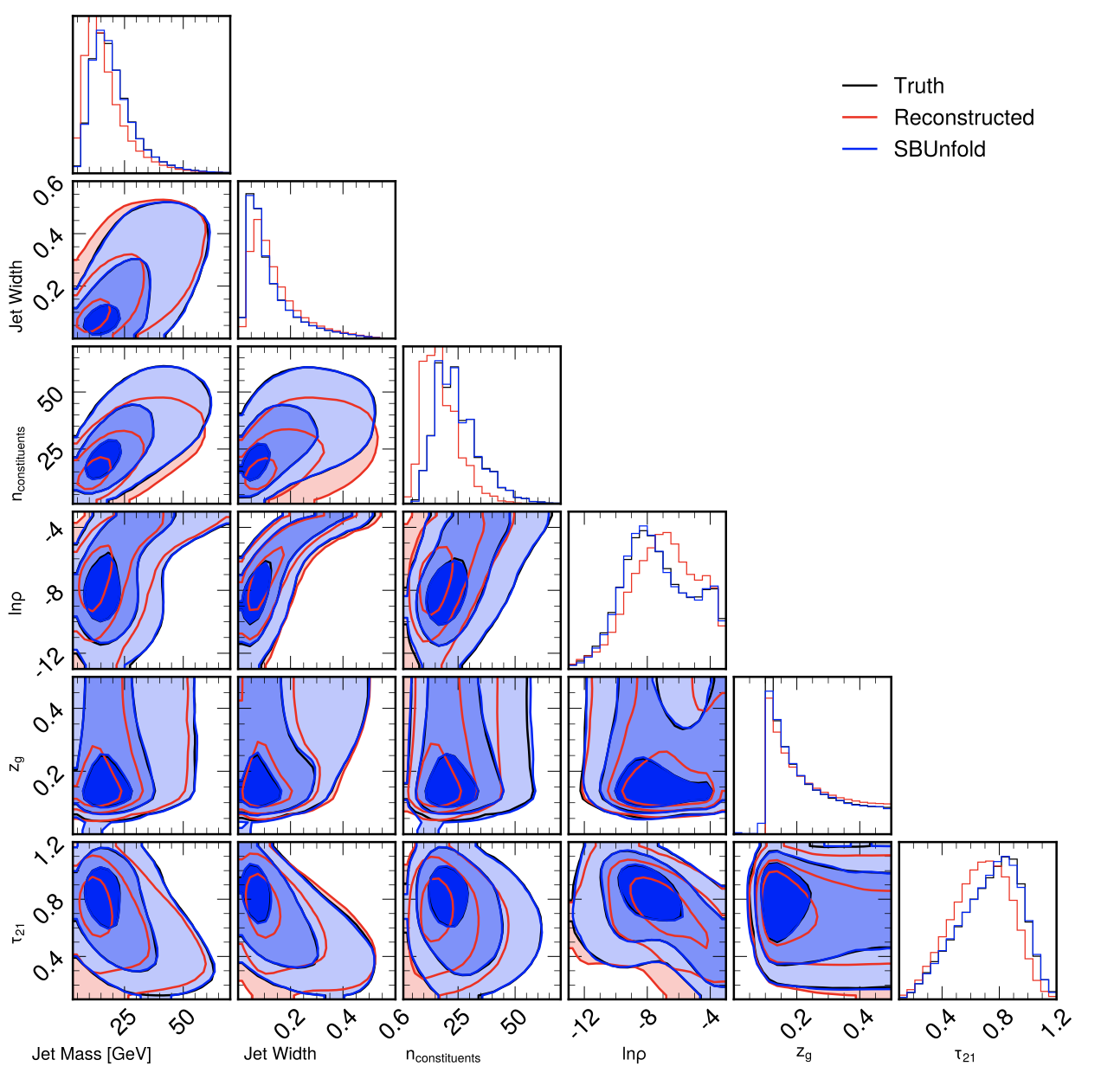

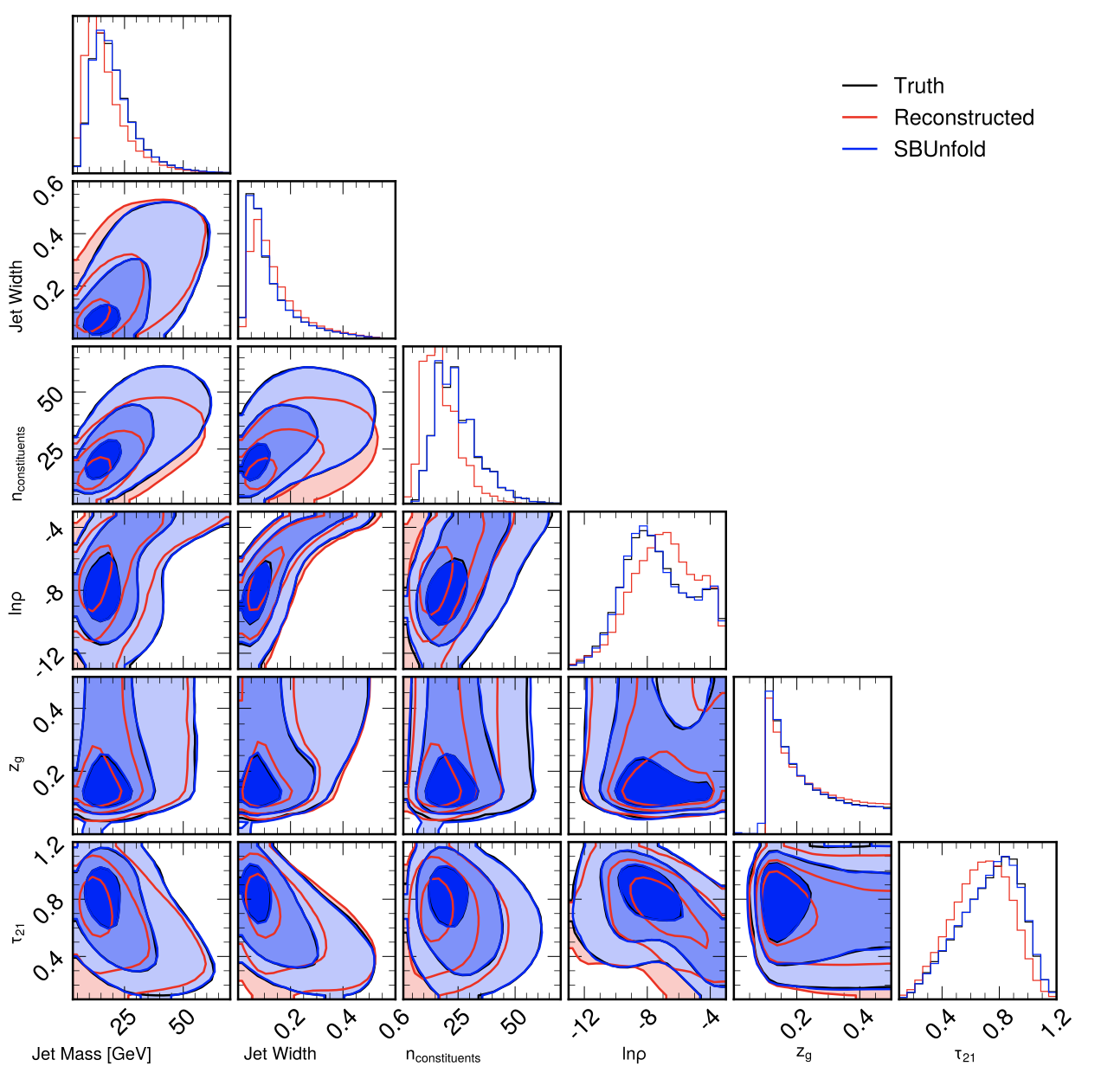

Machine learning-based unfolding has enabled unbinned and high-dimensional differential cross section measurements. Two main approaches have emerged in this research area: one based on discriminative models and one based on generative models. The main advantage of discriminative models is that they learn a small correction to a starting simulation while generative models scale better to regions of phase space with little data. We propose to use Schroedinger Bridges and diffusion models to create SBUnfold, an unfolding approach that combines the strengths of both discriminative and generative models. The key feature of SBUnfold is that its generative model maps one set of events into another without having to go through a known probability density as is the case for normalizing flows and standard diffusion models. We show that SBUnfold achieves excellent performance compared to state of the art methods on a synthetic Z+jets dataset.

×

author="{S. Diefenbacher, V. Mikuni, B. Nachman}",

title="{Refining Fast Calorimeter Simulations with a Schrödinger Bridge}",

eprint="2308.12339",

archivePrefix = "arXiv",

primaryClass = "physics.ins-det",

year = "2023"}

×

Machine learning-based simulations, especially calorimeter simulations, are promising tools for approximating the precision of classical high energy physics simulations with a fraction of the generation time. Nearly all methods proposed so far learn neural networks that map a random variable with a known probability density, like a Gaussian, to realistic-looking events. In many cases, physics events are not close to Gaussian and so these neural networks have to learn a highly complex function. We study an alternative approach: Schrödinger bridge Quality Improvement via Refinement of Existing Lightweight Simulations (SQuIRELS). SQuIRELS leverages the power of diffusion-based neural networks and Schrödinger bridges to map between samples where the probability density is not known explicitly. We apply SQuIRELS to the task of refining a classical fast simulation to approximate a full classical simulation. On simulated calorimeter events, we find that SQuIRELS is able to reproduce highly non-trivial features of the full simulation with a fraction of the generation time.

×

author="{V. Mikuni, B. Nachman}",

title="{CaloScore v2: Single-shot Calorimeter Shower Simulation with Diffusion Models}",

eprint="2308.03847",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

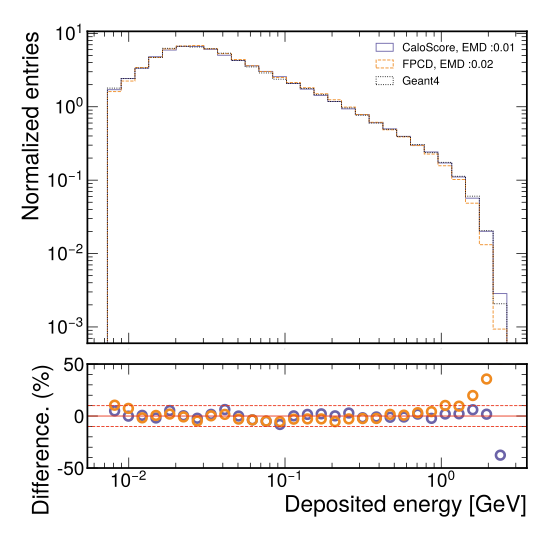

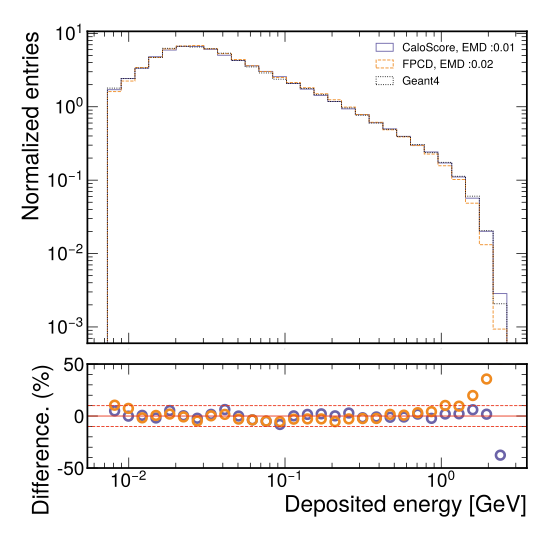

Diffusion generative models are promising alternatives for fast surrogate models, producing high-fidelity physics simulations. However, the generation time often requires an expensive denoising process with hundreds of function evaluations, restricting the current applicability of these models in a realistic setting. In this work, we report updates on the CaloScore architecture, detailing the changes in the diffusion process, which produces higher quality samples, and the use of progressive distillation, resulting in a diffusion model capable of generating new samples with a single function evaluation. We demonstrate these improvements using the Calorimeter Simulation Challenge 2022 dataset.

×

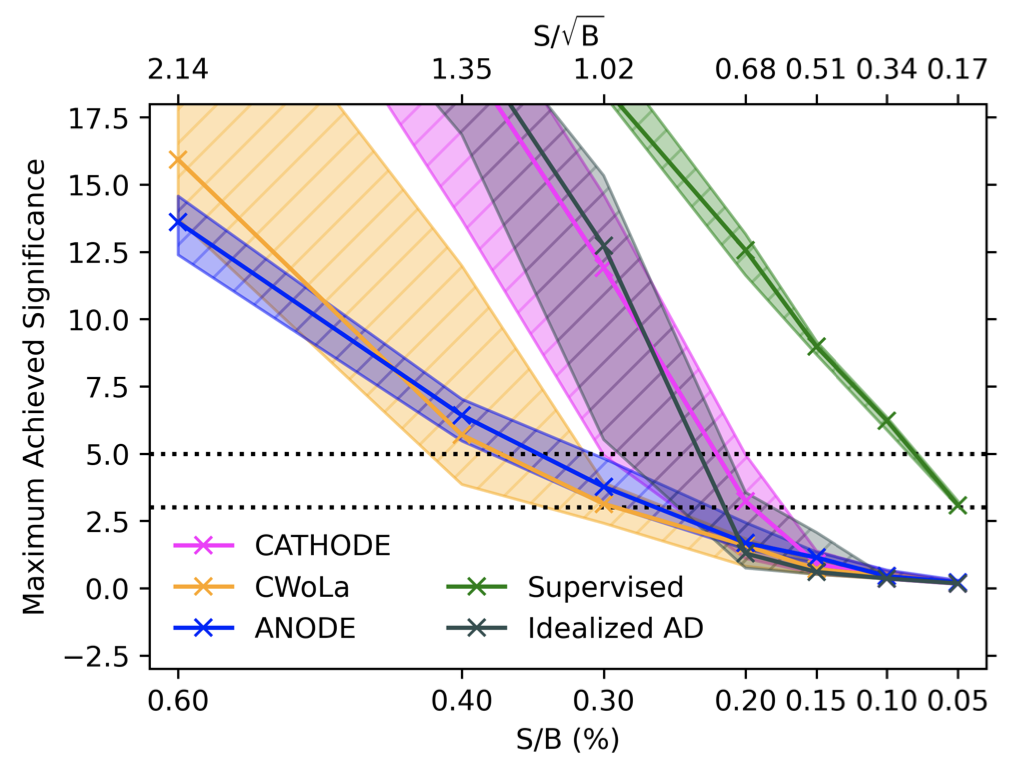

author="{T. Golling, G. Kasieczka, C. Krause, R. Mastandrea, B. Nachman, J. Raine, D. Sengupta, D. Shih, M. Sommerhalder}",

title="{The Interplay of Machine Learning--based Resonant Anomaly Detection Methods}",

eprint="2307.11157",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

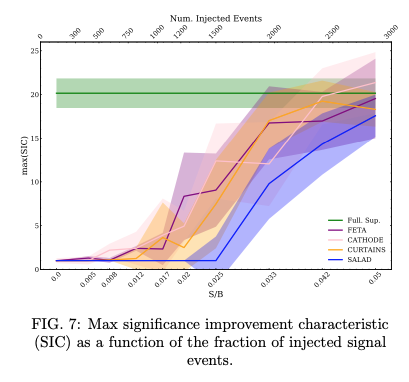

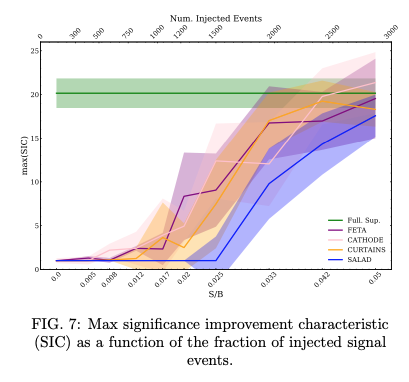

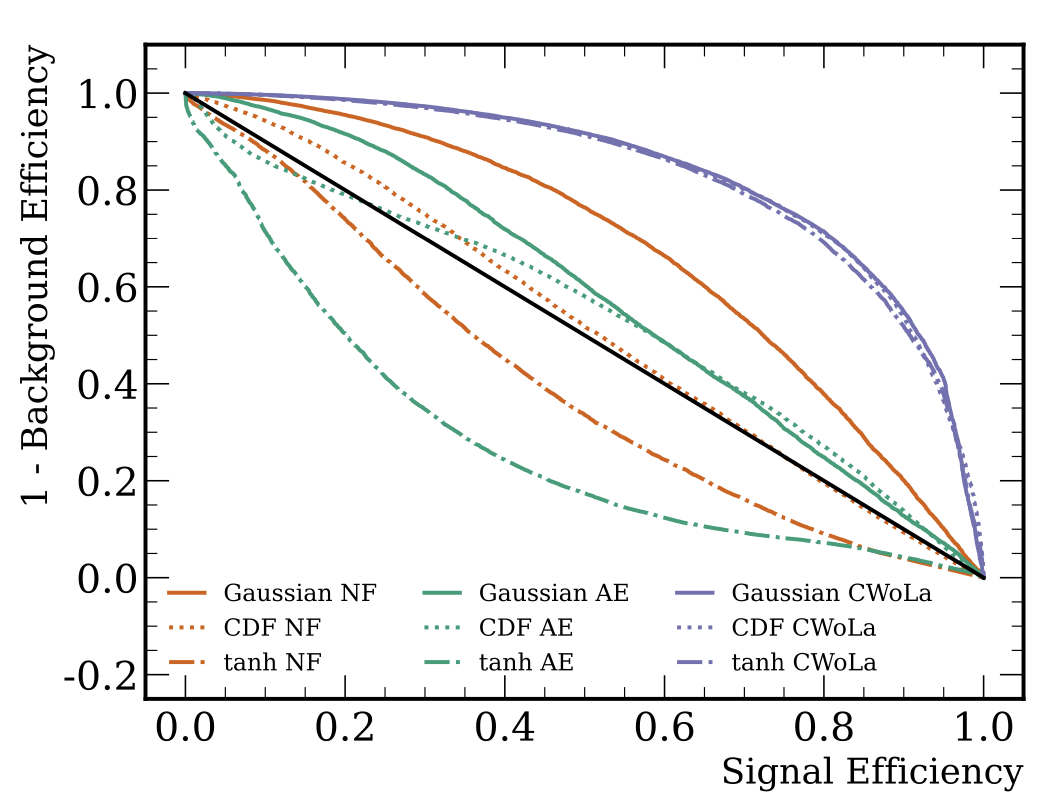

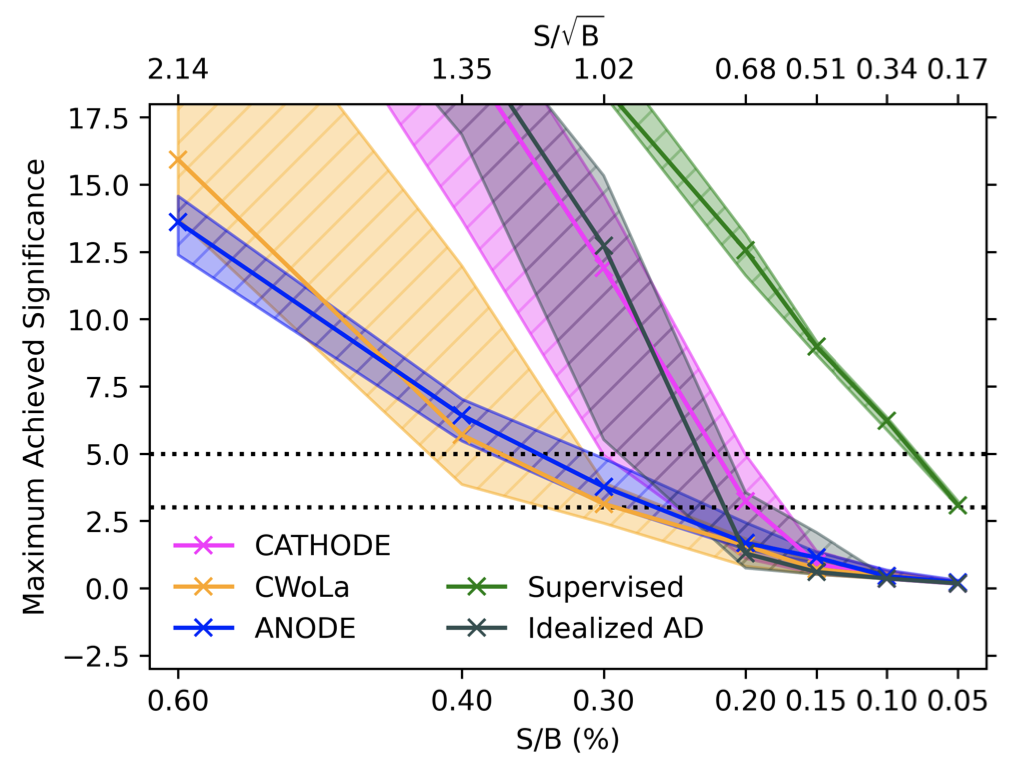

Machine learning--based anomaly detection (AD) methods are promising tools for extending the coverage of searches for physics beyond the Standard Model (BSM). One class of AD methods that has received significant attention is resonant anomaly detection, where the BSM is assumed to be localized in at least one known variable. While there have been many methods proposed to identify such a BSM signal that make use of simulated or detected data in different ways, there has not yet been a study of the methods' complementarity. To this end, we address two questions. First, in the absence of any signal, do different methods pick the same events as signal-like? If not, then we can significantly reduce the false-positive rate by comparing different methods on the same dataset. Second, if there is a signal, are different methods fully correlated? Even if their maximum performance is the same, since we do not know how much signal is present, it may be beneficial to combine approaches. Using the Large Hadron Collider (LHC) Olympics dataset, we provide quantitative answers to these questions. We find that there are significant gains possible by combining multiple methods, which will strengthen the search program at the LHC and beyond.

×

author="{F. Acosta, V. Mikuni, B. Nachman, M. Arratia, K. Barish, B. Karki, R. Milton, P. Karande, A. Angerami}",

title="{Comparison of Point Cloud and Image-based Models for Calorimeter Fast Simulation}",

eprint="2307.04780",

archivePrefix = "arXiv",

primaryClass = "cs.LG",

year = "2023"}

×

Score based generative models are a new class of generative models that have been shown to accurately generate high dimensional calorimeter datasets. Recent advances in generative models have used images with 3D voxels to represent and model complex calorimeter showers. Point clouds, however, are likely a more natural representation of calorimeter showers, particularly in calorimeters with high granularity. Point clouds preserve all of the information of the original simulation, more naturally deal with sparse datasets, and can be implemented with more compact models and data files. In this work, two state-of-the-art score based models are trained on the same set of calorimeter simulation and directly compared.

×

author="{E. Witkowski, B. Nachman, D. Whiteson}",

title="{Learning to Isolate Muons in Data}",

eprint="2306.15737",

archivePrefix = "arXiv",

primaryClass = "hep-ex",

year = "2023"}

×

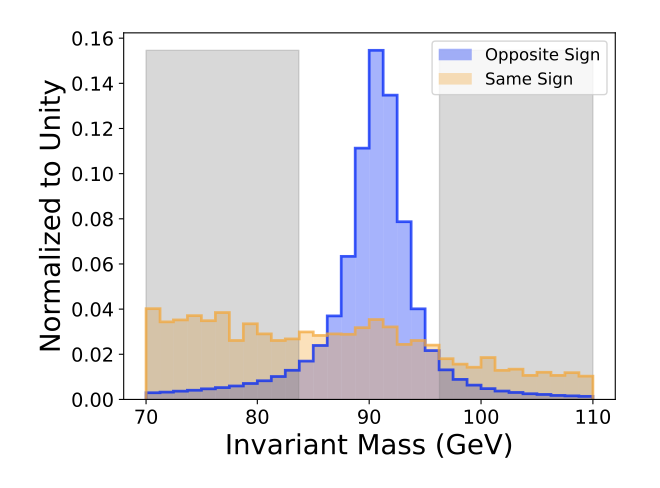

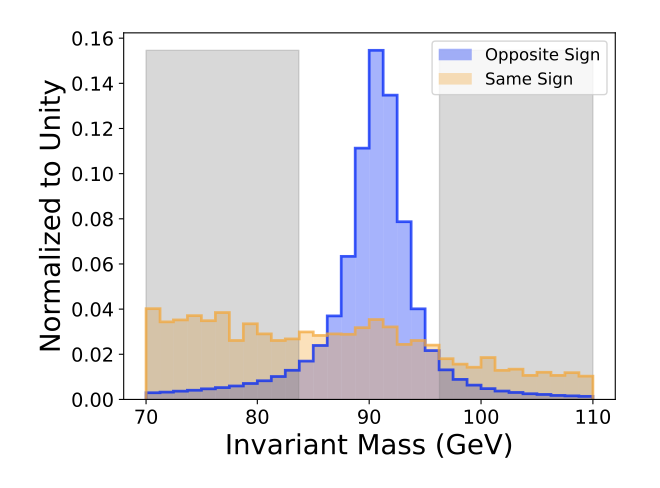

We use unlabeled collision data and weakly-supervised learning to train models which can distinguish prompt muons from non-prompt muons using patterns of low-level particle activity in the vicinity of the muon, and interpret the models in the space of energy flow polynomials. Particle activity associated with muons is a valuable tool for identifying prompt muons, those due to heavy boson decay, from muons produced in the decay of heavy flavor jets. The high-dimensional information is typically reduced to a single scalar quantity, isolation, but previous work in simulated samples suggests that valuable discriminating information is lost in this reduction. We extend these studies in LHC collisions recorded by the CMS experiment, where true class labels are not available, requiring the use of the invariant mass spectrum to obtain macroscopic sample information. This allows us to employ Classification Without Labels (CWoLa), a weakly supervised learning technique, to train models. Our results confirm that isolation does not describe events as well as the full low-level calorimeter information, and we are able to identify single energy flow polynomials capable of closing the performance gap. These polynomials are not the same ones derived from simulation, highlighting the importance of training directly on data.

×

author="{V. Mikuni, B. Nachman}",

title="{High-dimensional and Permutation Invariant Anomaly Detection}",

eprint="2306.03933",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

Methods for anomaly detection of new physics processes are often limited to low-dimensional spaces due to the difficulty of learning high-dimensional probability densities. Particularly at the constituent level, incorporating desirable properties such as permutation invariance and variable-length inputs becomes difficult within popular density estimation methods. In this work, we introduce a permutation-invariant density estimator for particle physics data based on diffusion models, specifically designed to handle variable-length inputs. We demonstrate the efficacy of our methodology by utilizing the learned density as a permutation-invariant anomaly detection score, effectively identifying jets with low likelihood under the background-only hypothesis. To validate our density estimation method, we investigate the ratio of learned densities and compare to those obtained by a supervised classification algorithm.

×

author="{J. Chan, X. Ju, A. Kania, B. Nachman, V. Sangli, A. Siodmok}",

title="{Fitting a Deep Generative Hadronization Model}",

eprint="2305.17169",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

Hadronization is a critical step in the simulation of high-energy particle and nuclear physics experiments. As there is no first principles understanding of this process, physically-inspired hadronization models have a large number of parameters that are fit to data. Deep generative models are a natural replacement for classical techniques, since they are more flexible and may be able to improve the overall precision. Proof of principle studies have shown how to use neural networks to emulate specific hadronization when trained using the inputs and outputs of classical methods. However, these approaches will not work with data, where we do not have a matching between observed hadrons and partons. In this paper, we develop a protocol for fitting a deep generative hadronization model in a realistic setting, where we only have access to a set of hadrons in data. Our approach uses a variation of a Generative Adversarial Network with a permutation invariant discriminator. We find that this setup is able to match the hadronization model in Herwig with multiple sets of parameters. This work represents a significant step forward in a longer term program to develop, train, and integrate machine learning-based hadronization models into parton shower Monte Carlo programs.

×

author="{S. Rizvi, M. Pettee, B. Nachman}",

title="{Learning Likelihood Ratios with Neural Network Classifiers}",

eprint="2305.10500",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"}

×

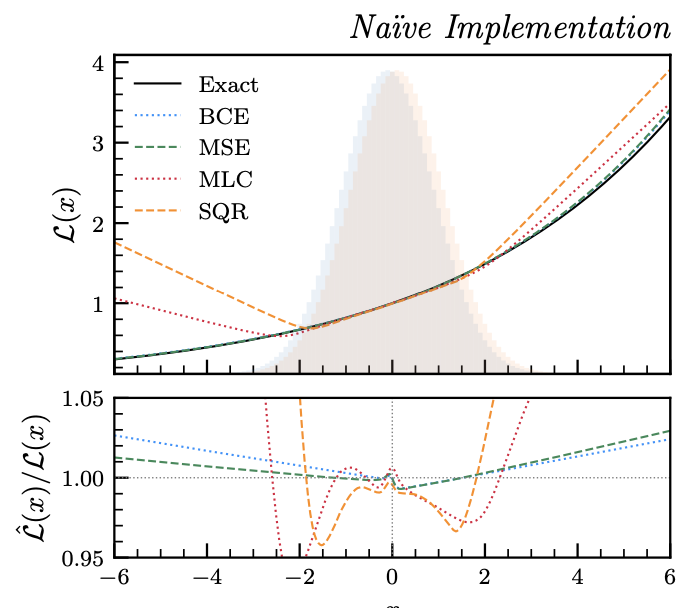

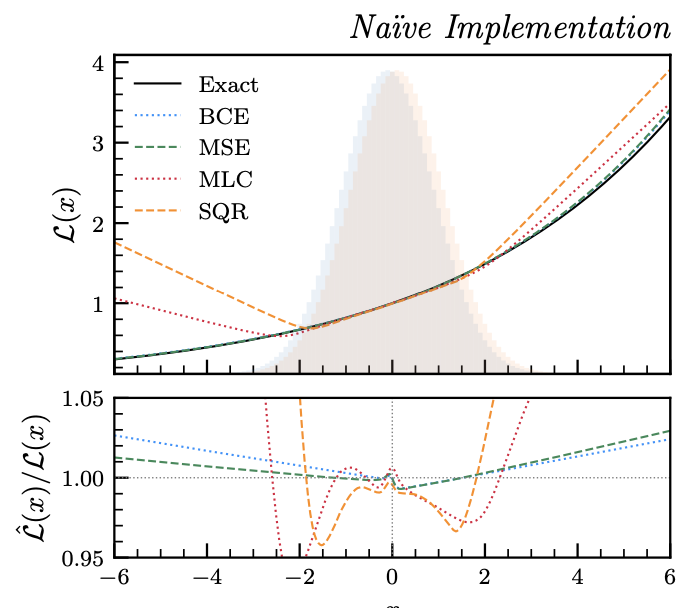

The likelihood ratio is a crucial quantity for statistical inference in science that enables hypothesis testing, construction of confidence intervals, reweighting of distributions, and more. Many modern scientific applications, however, make use of data- or simulation-driven models for which computing the likelihood ratio can be very difficult or even impossible. By applying the so-called ``likelihood ratio trick,'' approximations of the likelihood ratio may be computed using clever parametrizations of neural network-based classifiers. A number of different neural network setups can be defined to satisfy this procedure, each with varying performance in approximating the likelihood ratio when using finite training data. We present a series of empirical studies detailing the performance of several common loss functionals and parametrizations of the classifier output in approximating the likelihood ratio of two univariate and multivariate Gaussian distributions as well as simulated high-energy particle physics datasets.

×

author="B. Nachman, R. Winterhalder",

title="{ELSA -- Enhanced latent spaces for improved collider simulations}",

eprint="2305.07696",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"

×

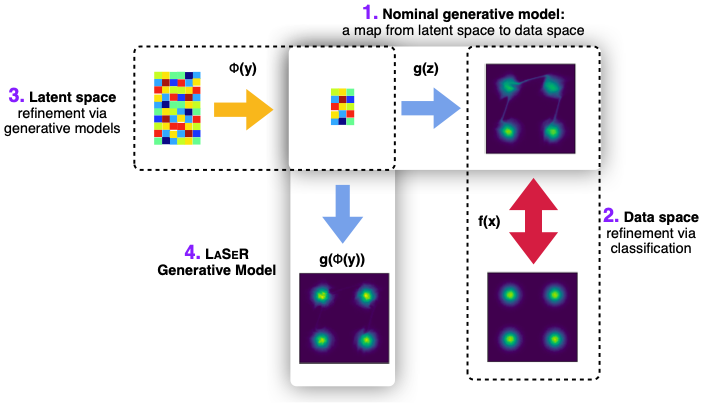

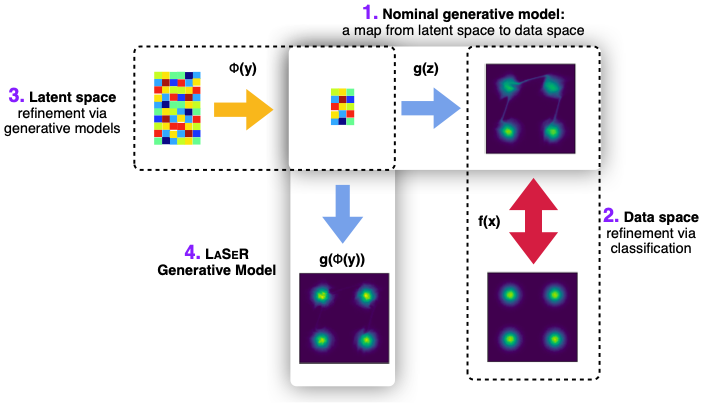

Simulations play a key role for inference in collider physics. We explore various approaches for enhancing the precision of simulations using machine learning, including interventions at the end of the simulation chain (reweighting), at the beginning of the simulation chain (pre-processing), and connections between the end and beginning (latent space refinement). To clearly illustrate our approaches, we use W+jets matrix element surrogate simulations based on normalizing flows as a prototypical example. First, weights in the data space are derived using machine learning classifiers. Then, we pull back the data-space weights to the latent space to produce unweighted examples and employ the Latent Space Refinement (LASER) protocol using Hamiltonian Monte Carlo. An alternative approach is an augmented normalizing flow, which allows for different dimensions in the latent and target spaces. These methods are studied for various pre-processing strategies, including a new and general method for massive particles at hadron colliders that is a tweak on the widely-used RAMBO-on-diet mapping. We find that modified simulations can achieve sub-percent precision across a wide range of phase space.

×

author="{M. Pettee, S. Thanvantri, B. Nachman, D. Shih, M. Buckley, J. Collins}",

title="{Weakly-Supervised Anomaly Detection in the Milky Way}",

eprint="2305.03761",

archivePrefix = "arXiv",

primaryClass = "astro-ph.GA",

year = "2023"}

×

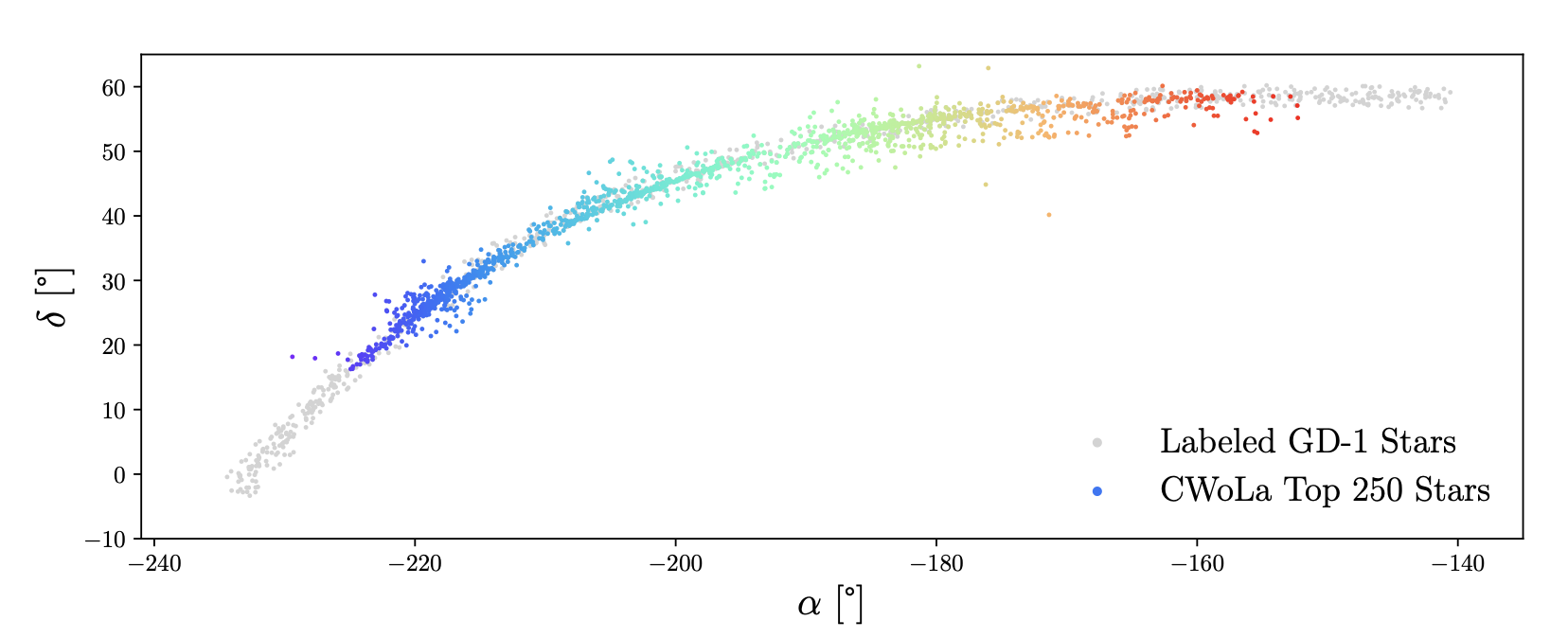

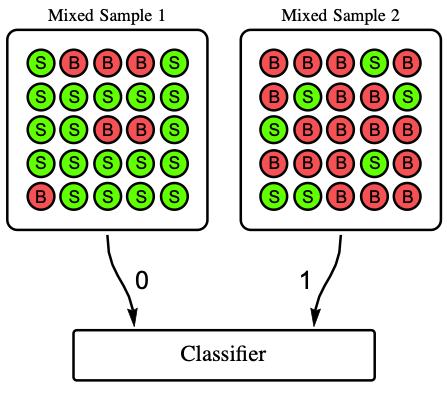

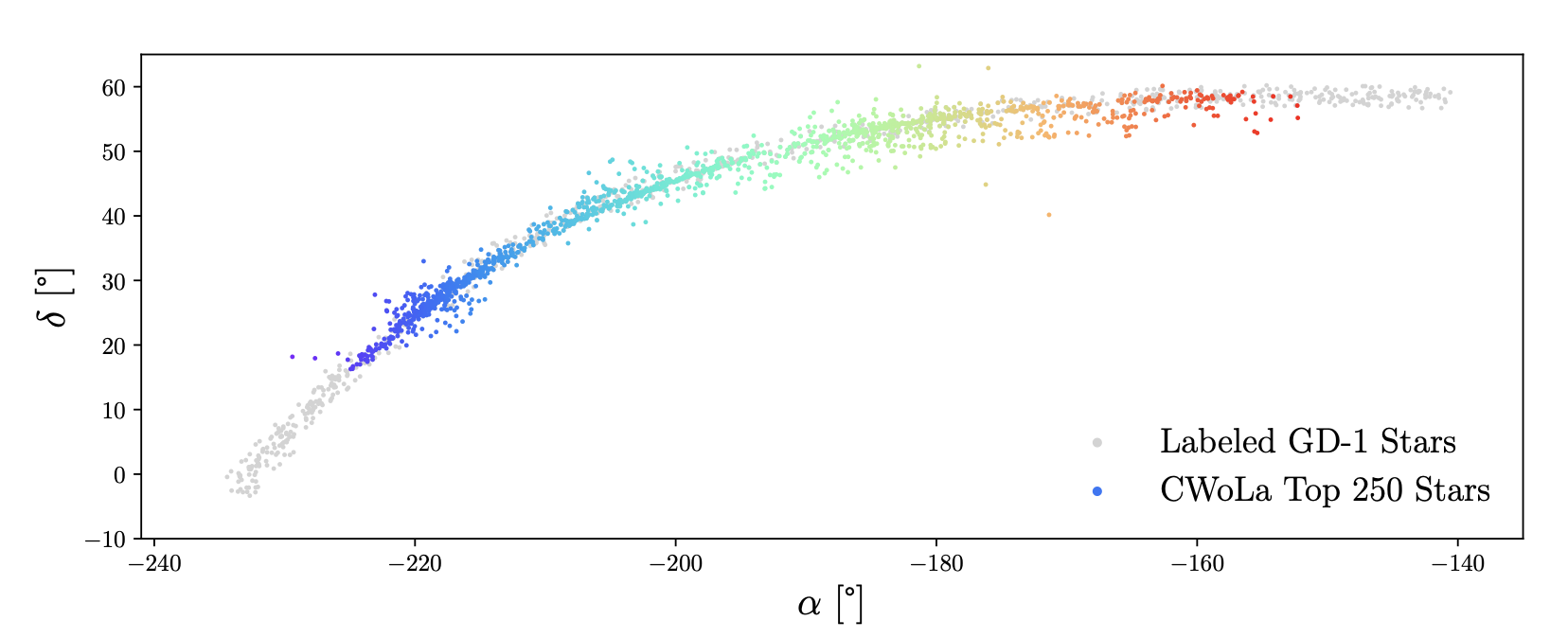

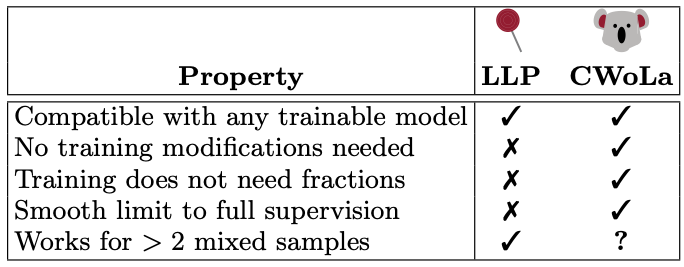

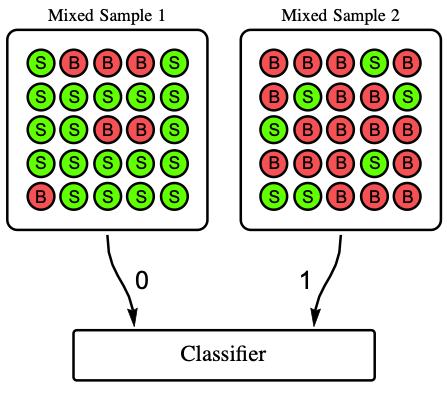

Large-scale astrophysics datasets present an opportunity for new machine learning techniques to identify regions of interest that might otherwise be overlooked by traditional searches. To this end, we use Classification Without Labels (CWoLa), a weakly-supervised anomaly detection method, to identify cold stellar streams within the more than one billion Milky Way stars observed by the Gaia satellite. CWoLa operates without the use of labeled streams or knowledge of astrophysical principles. Instead, we train a classifier to distinguish between mixed samples for which the proportions of signal and background samples are unknown. This computationally lightweight strategy is able to detect both simulated streams and the known stream GD-1 in data. Originally designed for high-energy collider physics, this technique may have broad applicability within astrophysics as well as other domains interested in identifying localized anomalies.

×

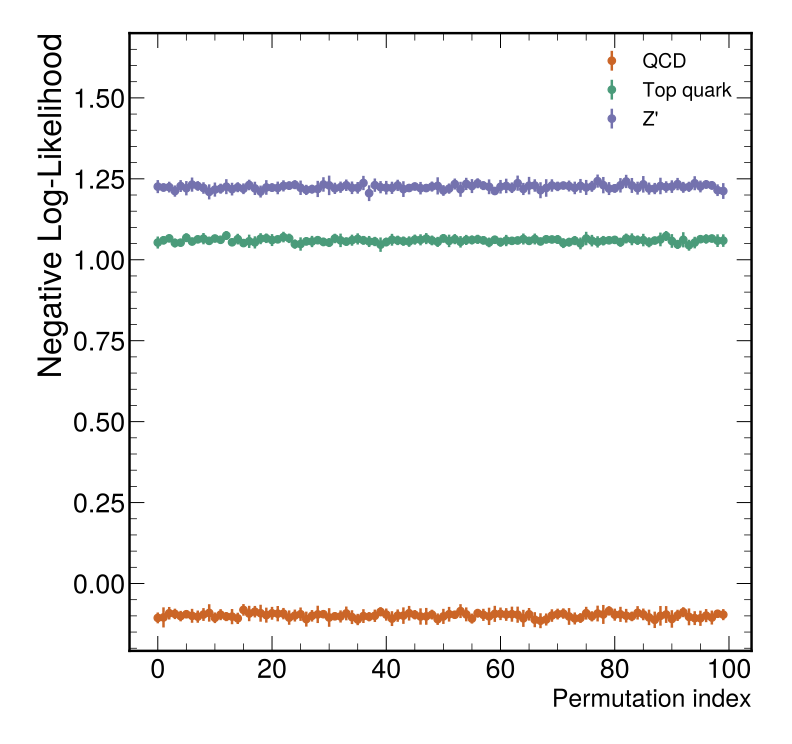

author="S. Qiu, S. Han, X. Ju, B. Nachman, H. Wang",

title="{Parton Labeling without Matching: Unveiling Emergent Labelling Capabilities in Regression Models}",

eprint="2304.09208",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"

×

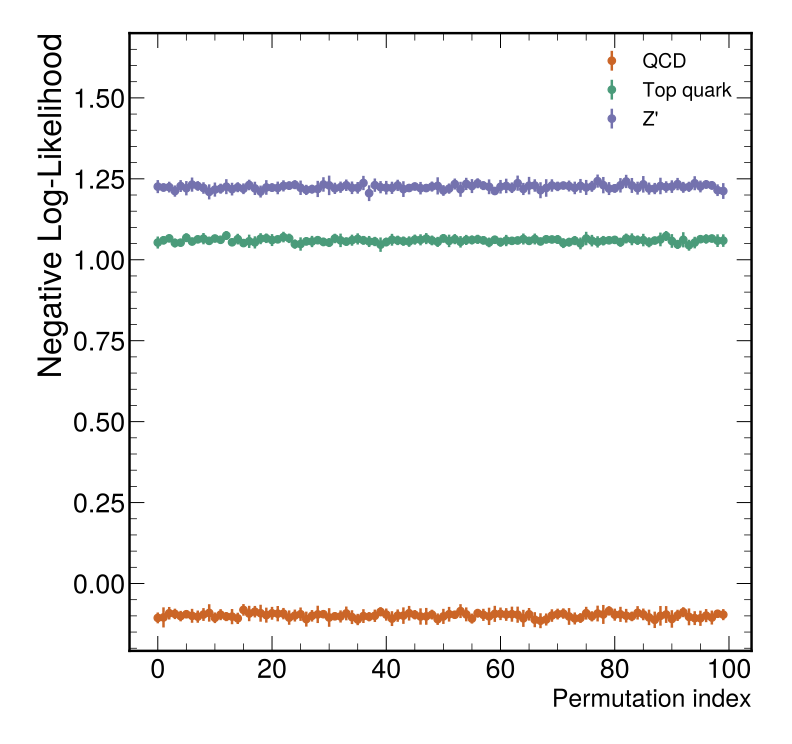

Parton labeling methods are widely used when reconstructing collider events with top quarks or other massive particles. State-of-the-art techniques are based on machine learning and require training data with events that have been matched using simulations with truth information. In nature, there is no unique matching between partons and final state objects due to the properties of the strong force and due to acceptance effects. We propose a new approach to parton labeling that circumvents these challenges by recycling regression models. The final state objects that are most relevant for a regression model to predict the properties of a particular top quark are assigned to said parent particle without having any parton-matched training data. This approach is demonstrated using simulated events with top quarks and outperforms the widely-used chi-squared method.

×

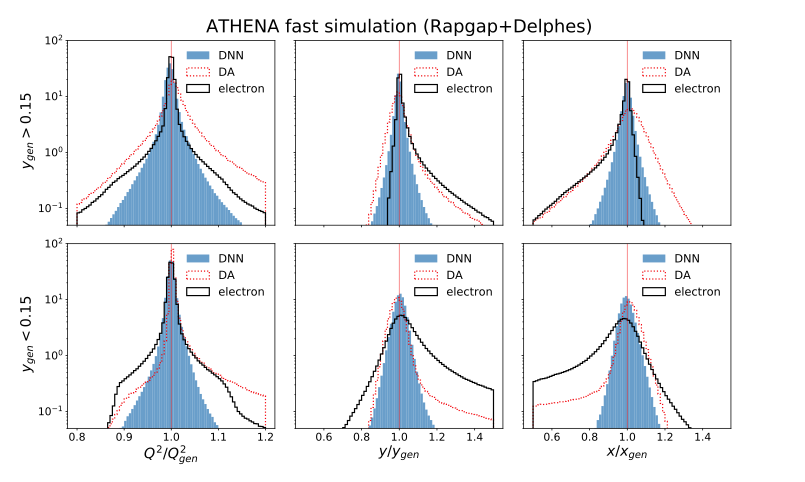

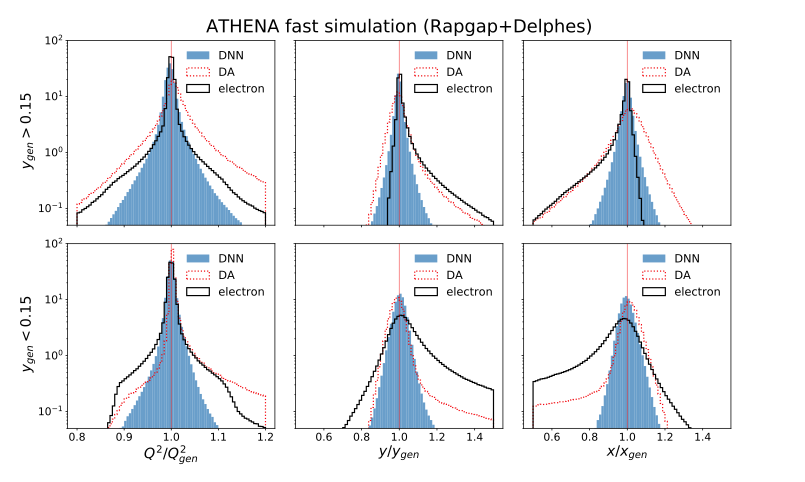

author="H1 Collaboration",

title="{Unbinned Deep Learning Jet Substructure Measurement in High $Q^2$ ep collisions at HERA}",

eprint="2303.13620",

archivePrefix = "arXiv",

primaryClass = "hep-ex",

year = "2023"

×

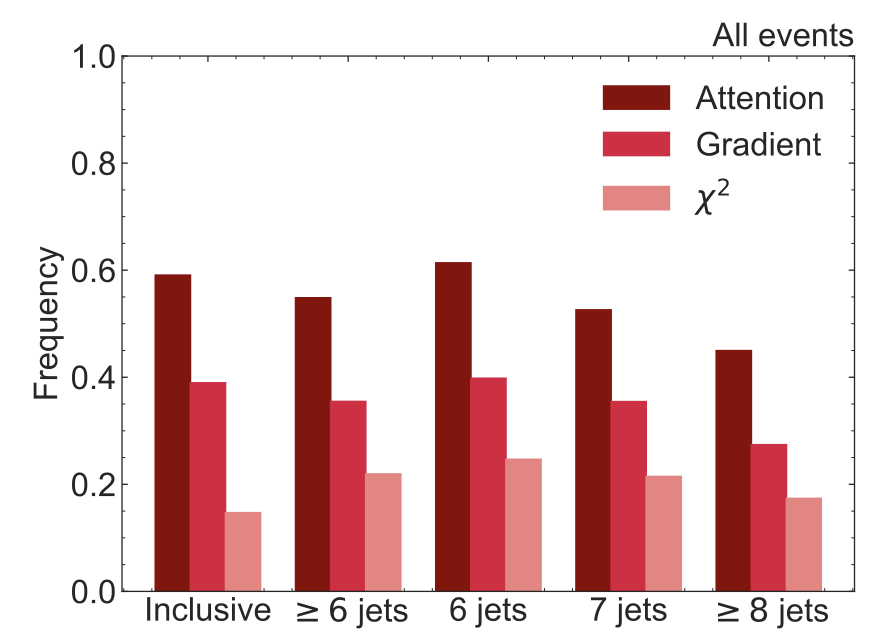

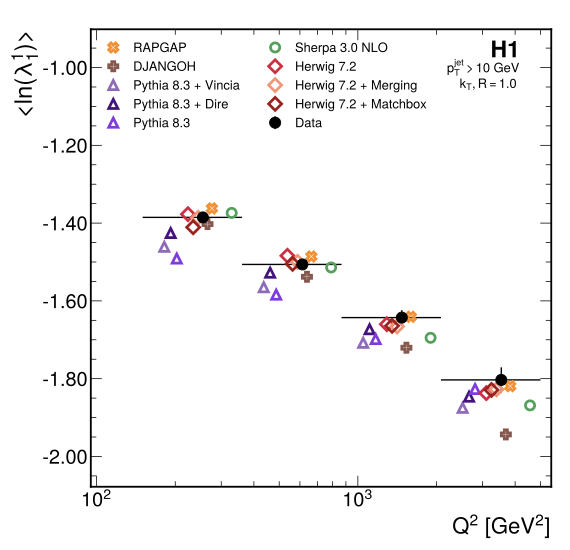

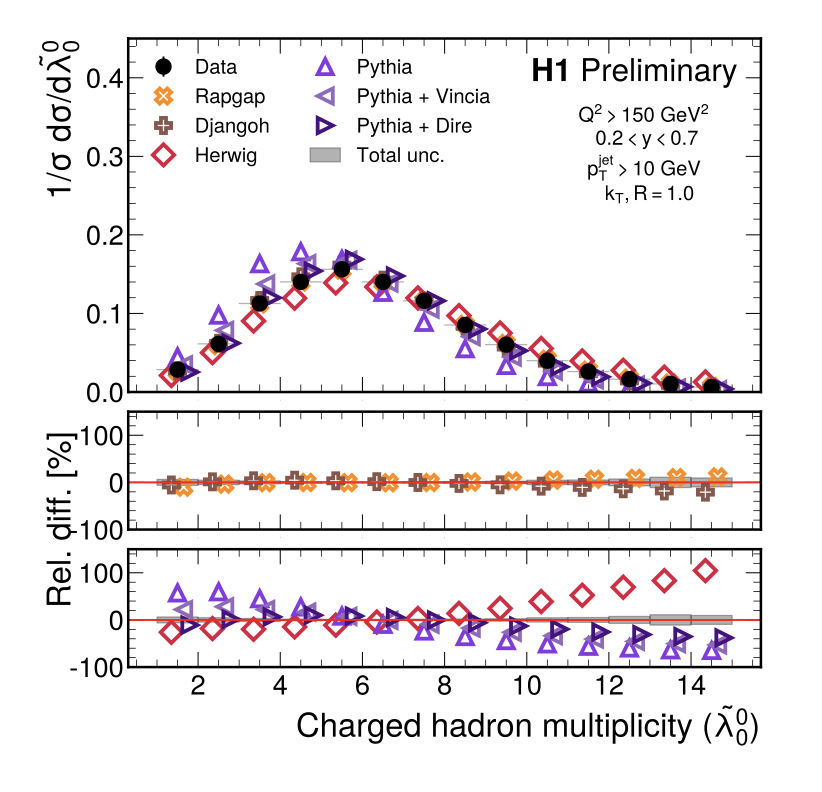

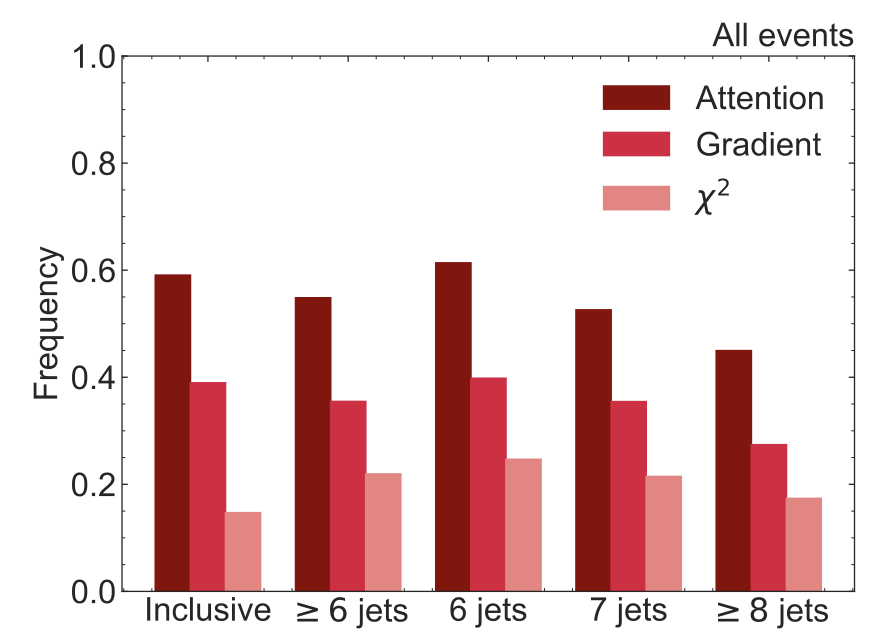

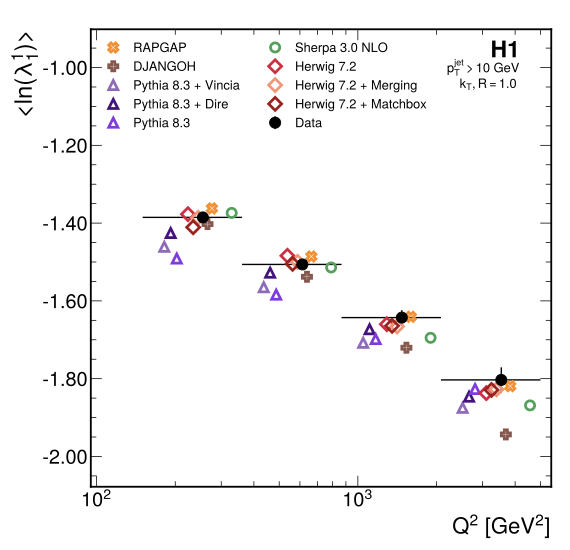

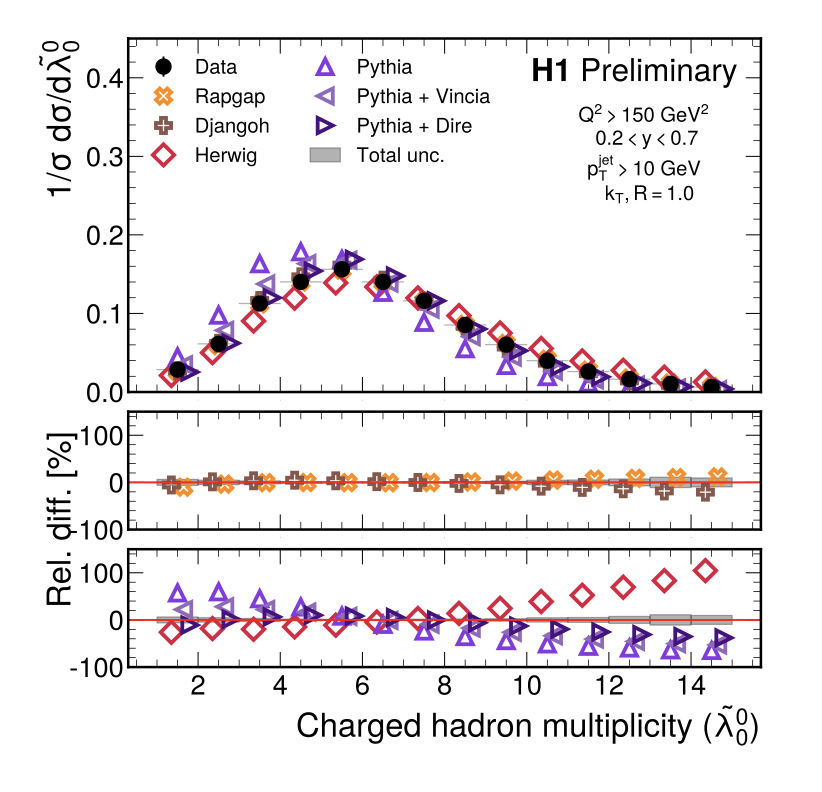

The radiation pattern within high energy quark- and gluon-initiated jets (jet substructure) is used extensively as a precision probe of the strong force as well as an environment for optimizing event generators with numerous applications in high energy particle and nuclear physics. Looking at electron-proton collisions is of particular interest as many of the complications present at hadron colliders are absent. A detailed study of modern jet substructure observables, jet angularities, in electron-proton collisions is presented using data recorded using the H1 detector at HERA. The measurement is unbinned and multi-dimensional, using machine learning to correct for detector effects. All of the available reconstructed object information of the respective jets is interpreted by a graph neural network, achieving superior precision on a selected set of jet angularities. Training these networks was enabled by the use of a large number of GPUs in the Perlmutter supercomputer at Berkeley Lab. The particle jets are reconstructed in the laboratory frame, using the kT jet clustering algorithm. Results are reported at high transverse momentum transfer, and mid inelasticity. The analysis is also performed in sub-regions of Q2, thus probing scale dependencies of the substructure variables. The data are compared with a variety of predictions and point towards possible improvements of such models.

×

author="{H1 Collaboration}",

title="Machine learning-assisted measurement of azimuthal angular asymmetries in deep-inelastic scattering with the H1 detector}",

journal = "H1prelim-23-031",

url = "https://www-h1.desy.de/h1/www/publications/htmlsplit/H1prelim-23-031.long.html",

year = "2023",

}

×

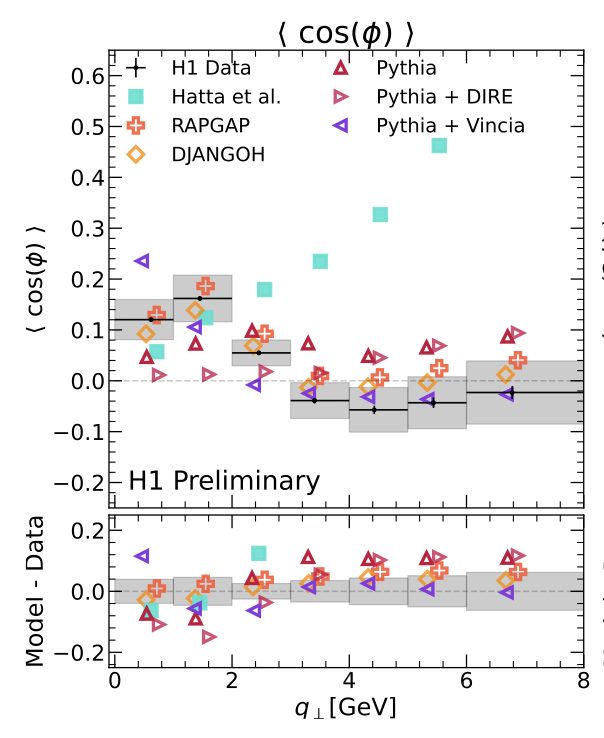

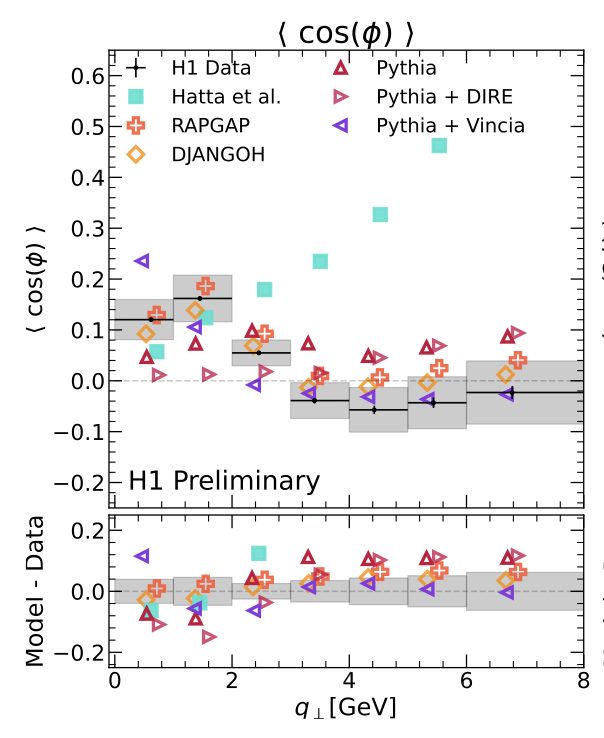

Jet-lepton azimuthal asymmetry harmonics are measured in deep inelastic scattering data collected by the H1 detector using HERA Run II collisions. When the average transverse momentum of the lepton-jet system, is much larger than the total transverse momentum of the system, the asymmetry between them is expected to be generated by initial and final state soft gluon radiation and can be predicted using perturbation theory. Quantifying the angular properties of the asymmetry therefore provides a novel test of the strong force and is also an important background to constrain for future measurements of intrinsic asymmetries generated by the proton's constituents through Transverse Momentum Dependent (TMD) Parton Distribution Functions (PDF). Moments of the azimuthal asymmetries are measured using a machine learning technique that does not require binning and thus does not introduce discretization artifacts.

×

author="J. Chan, B. Nachman",

title="{Unbinned Profiled Unfolding}",

eprint="2302.05390",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2023"

×

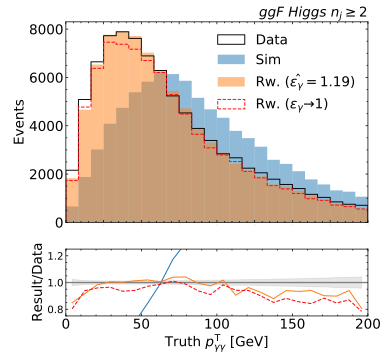

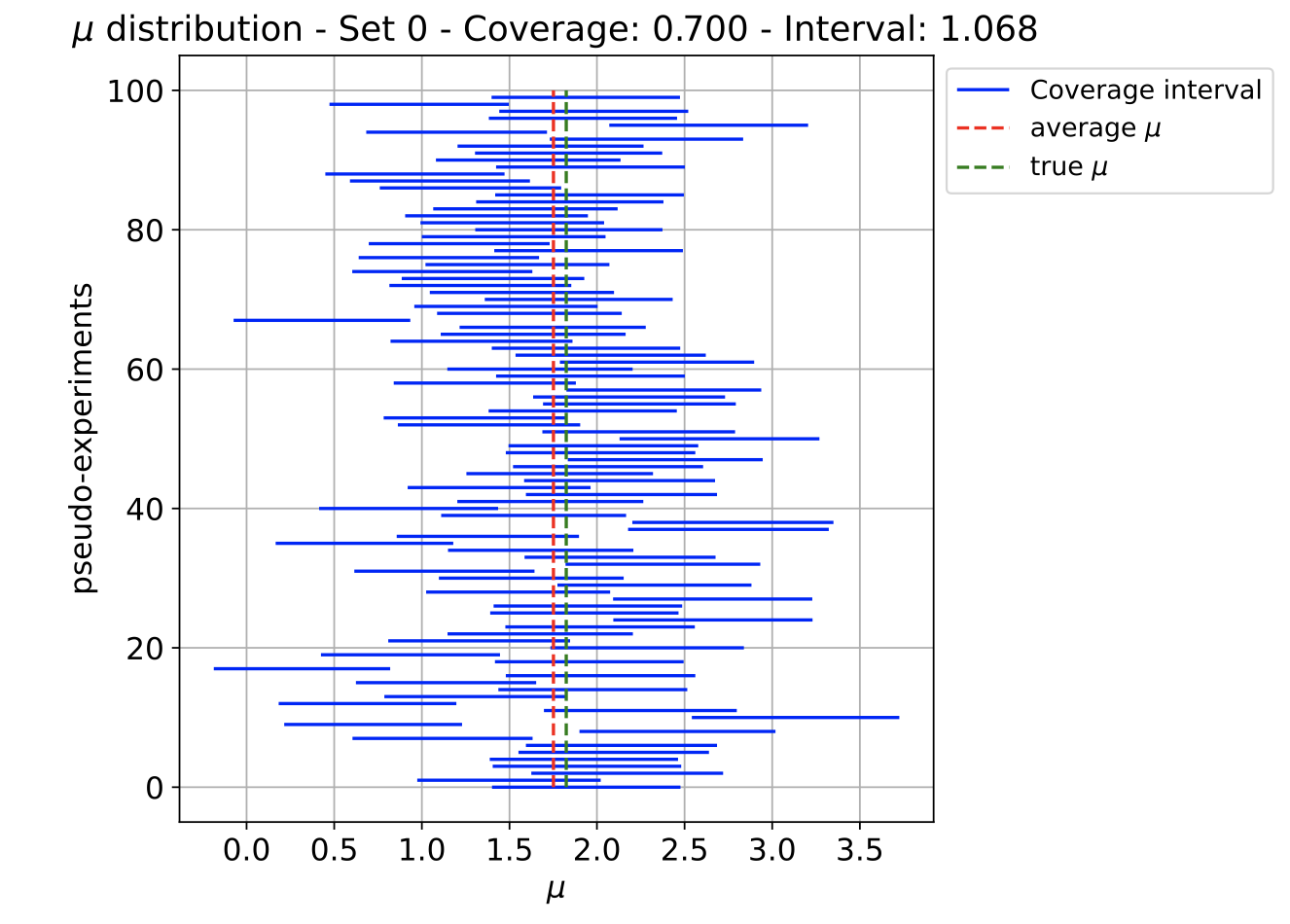

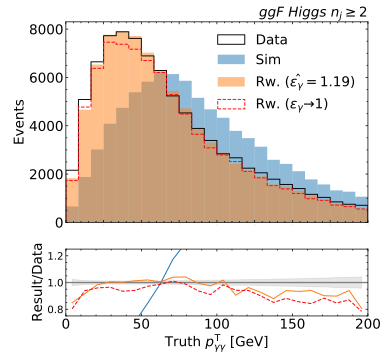

Unfolding is an important procedure in particle physics experiments which corrects for detector effects and provides differential cross section measurements that can be used for a number of downstream tasks, such as extracting fundamental physics parameters. Traditionally, unfolding is done by discretizing the target phase space into a finite number of bins and is limited in the number of unfolded variables. Recently, there have been a number of proposals to perform unbinned unfolding with machine learning. However, none of these methods (like most unfolding methods) allow for simultaneously constraining (profiling) nuisance parameters. We propose a new machine learning-based unfolding method that results in an unbinned differential cross section and can profile nuisance parameters. The machine learning loss function is the full likelihood function, based on binned inputs at detector-level. We first demonstrate the method with simple Gaussian examples and then show the impact on a simulated Higgs boson cross section measurement.

×

author="T. Golling, S. Klein, R. Mastandrea, B. Nachman",

title="{FETA: Flow-Enhanced Transportation for Anomaly Detection}",

eprint="2212.11285",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

Resonant anomaly detection is a promising framework for model-independent searches for new particles. Weakly supervised resonant anomaly detection methods compare data with a potential signal against a template of the Standard Model (SM) background inferred from sideband regions. We propose a means to generate this background template that uses a flow-based model to create a mapping between high-fidelity SM simulations and the data. The flow is trained in sideband regions with the signal region blinded, and the flow is conditioned on the resonant feature (mass) such that it can be interpolated into the signal region. To illustrate this approach, we use simulated collisions from the Large Hadron Collider (LHC) Olympics Dataset. We find that our flow-constructed background method has competitive sensitivity with other recent proposals and can therefore provide complementary information to improve future searches.

×

author="M. F. Chen, B. Nachman, F. Sala",

title="{Resonant Anomaly Detection with Multiple Reference Datasets}",

eprint="2212.11285",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

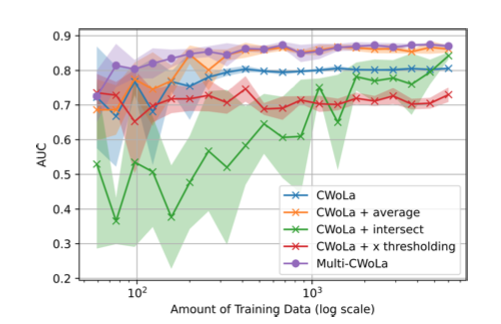

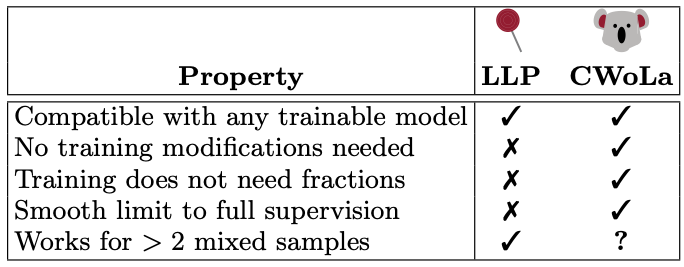

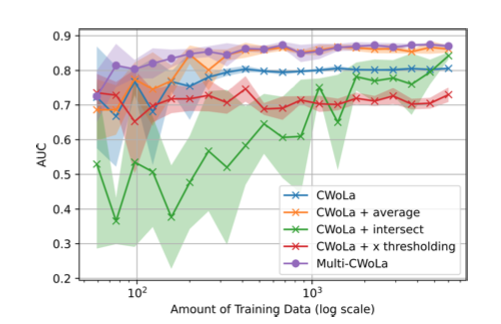

An important class of techniques for resonant anomaly detection in high energy physics builds models that can distinguish between reference and target datasets, where only the latter has appreciable signal. Such techniques, including Classification Without Labels (CWoLa) and Simulation Assisted Likelihood-free Anomaly Detection (SALAD) rely on a single reference dataset. They cannot take advantage of commonly-available multiple datasets and thus cannot fully exploit available information. In this work, we propose generalizations of CWoLa and SALAD for settings where multiple reference datasets are available, building on weak supervision techniques. We demonstrate improved performance in a number of settings with realistic and synthetic data. As an added benefit, our generalizations enable us to provide finite-sample guarantees, improving on existing asymptotic analyses.

×

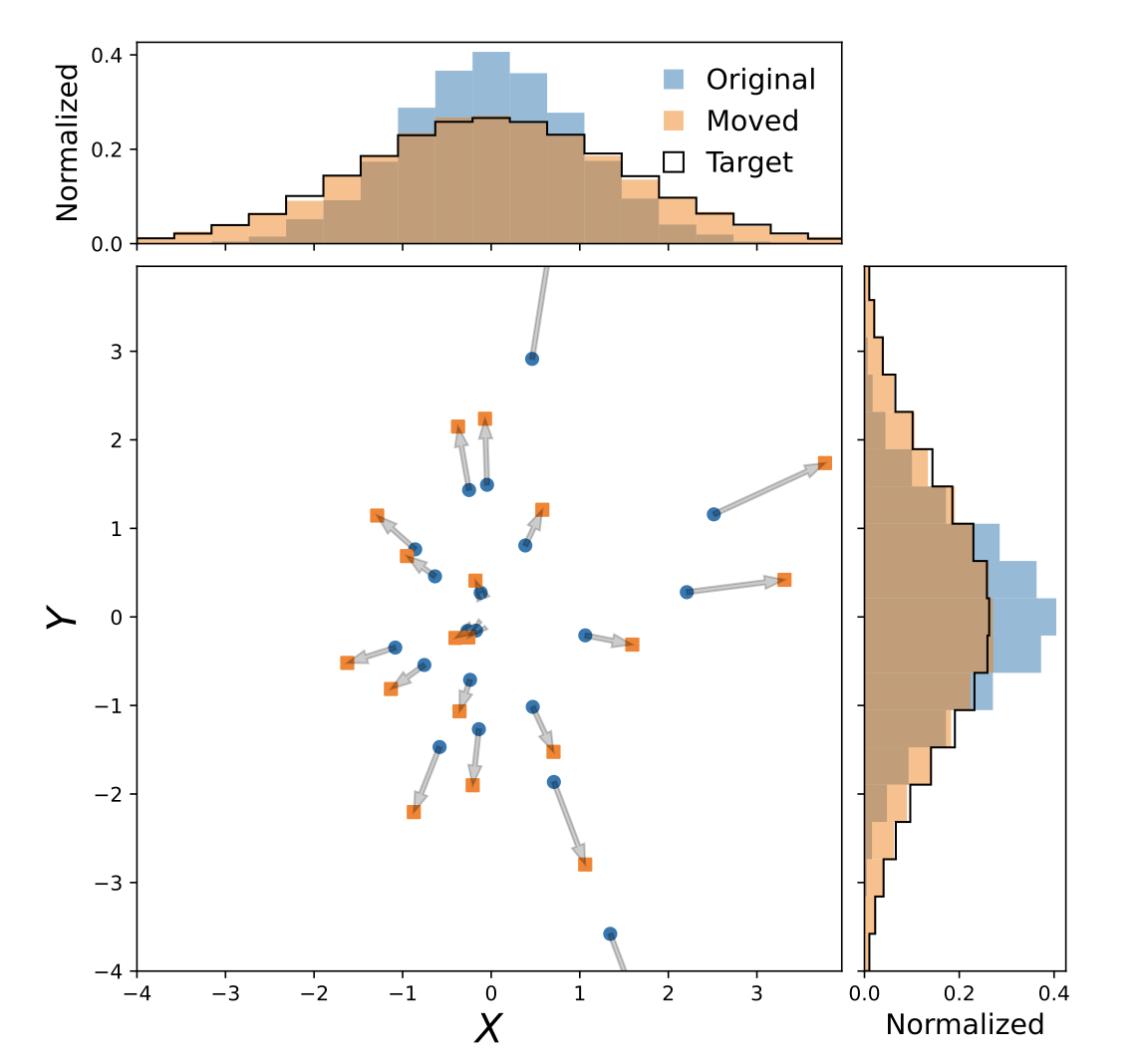

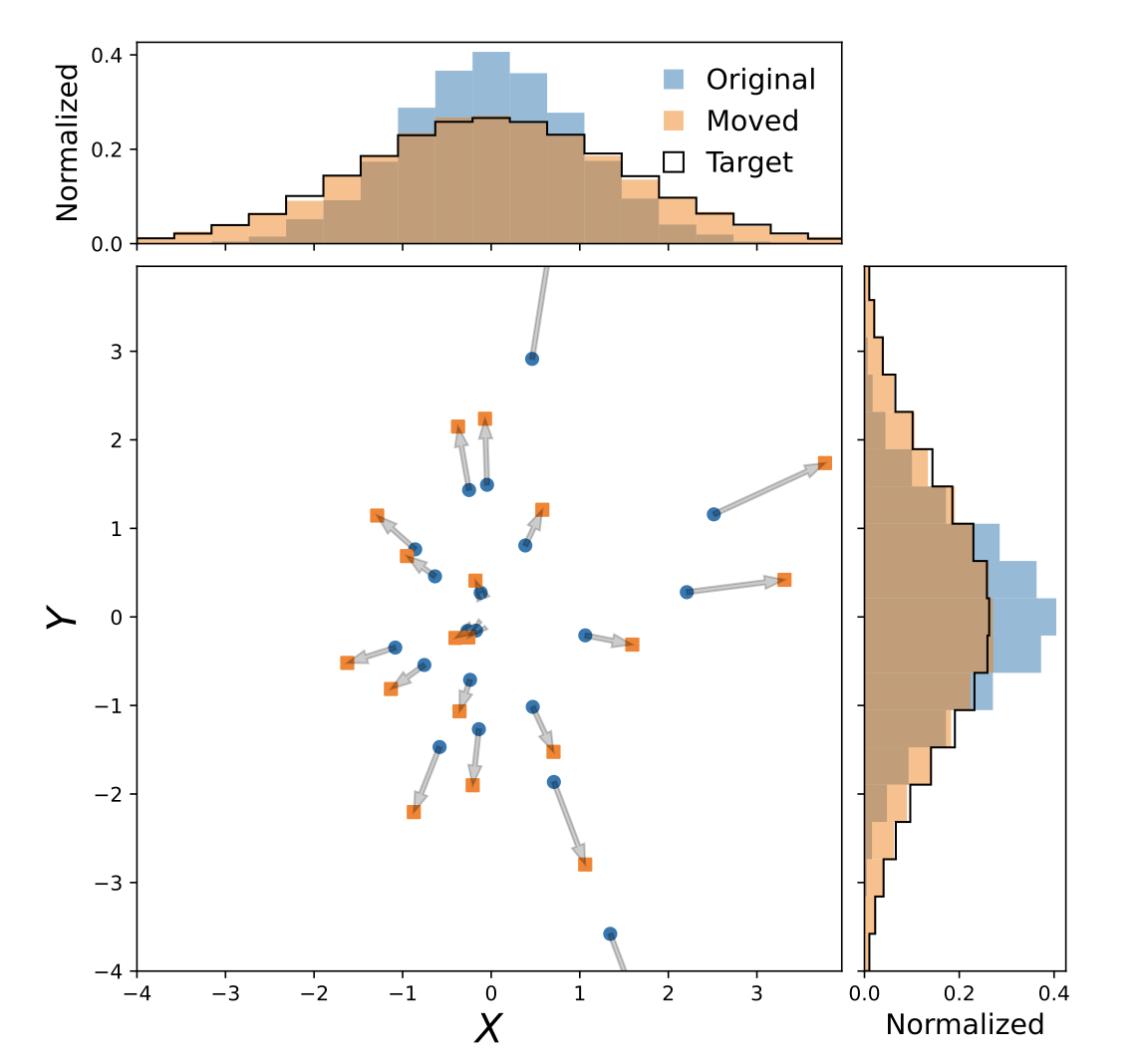

author="R. Mastandrea and B. Nachman",

title="{Efficiently Moving Instead of Reweighting Collider Events with Machine Learning}",

eprint="2212.06155",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

There are many cases in collider physics and elsewhere where a calibration dataset is used to predict the known physics and / or noise of a target region of phase space. This calibration dataset usually cannot be used out-of-the-box but must be tweaked, often with conditional importance weights, to be maximally realistic. Using resonant anomaly detection as an example, we compare a number of alternative approaches based on transporting events with normalizing flows instead of reweighting them. We find that the accuracy of the morphed calibration dataset depends on the degree to which the transport task is set up to carry out optimal transport, which motivates future research into this area.

×

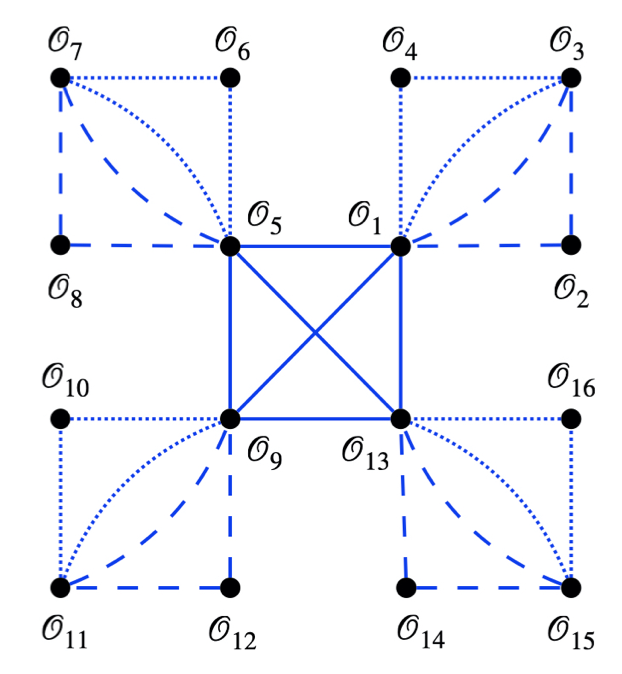

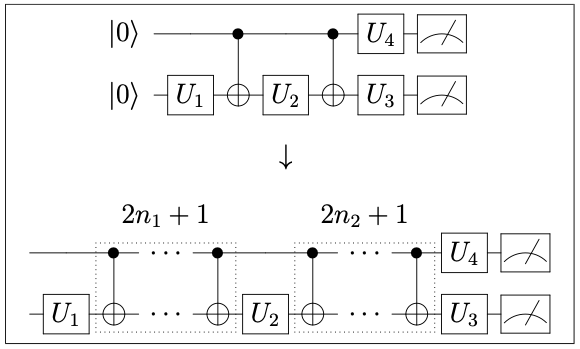

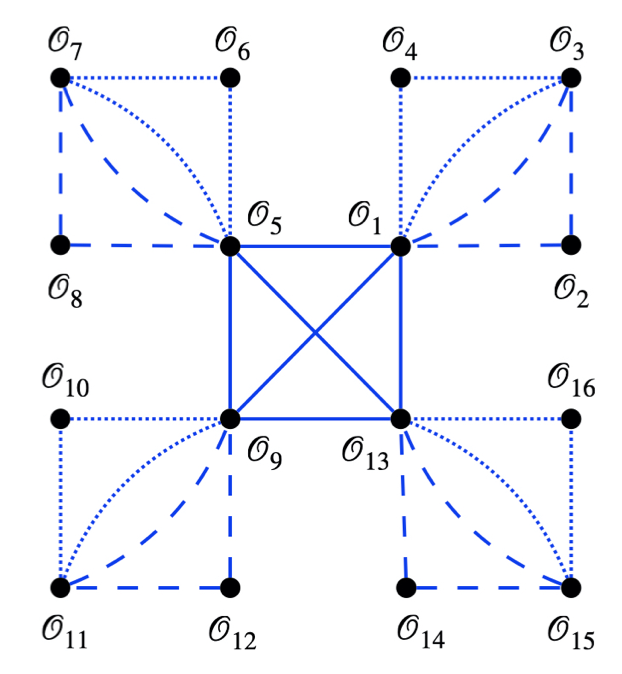

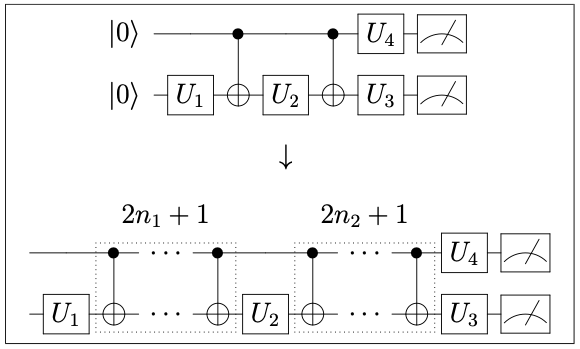

author="C. Kane, D. M. Grabowska, B. Nachman, C. W. Bauer",

title="{Efficient quantum implementation of 2+1 U(1) lattice gauge theories with Gauss law constraints}",

eprint="2211.10497",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

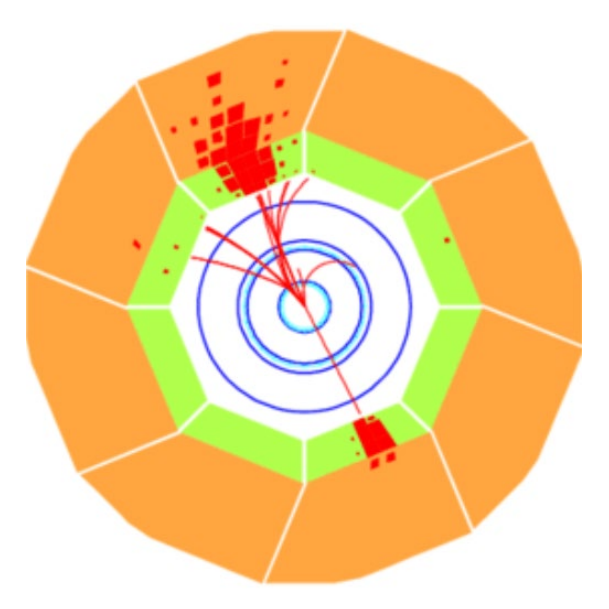

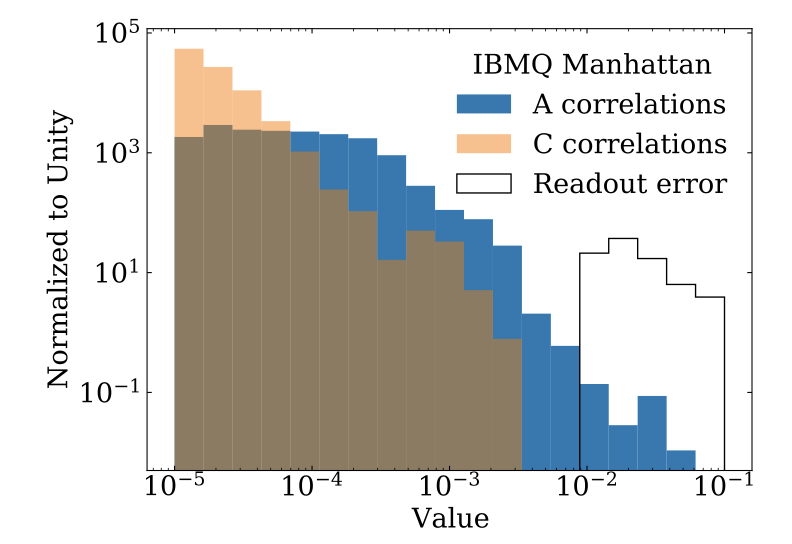

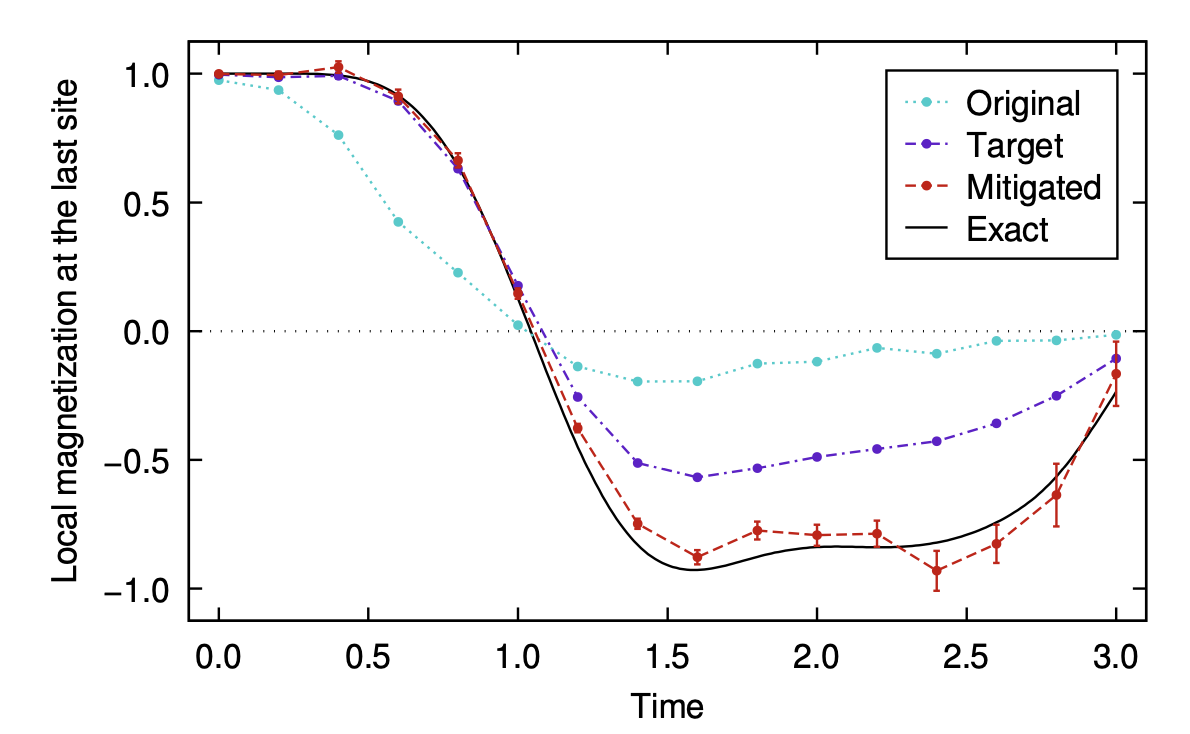

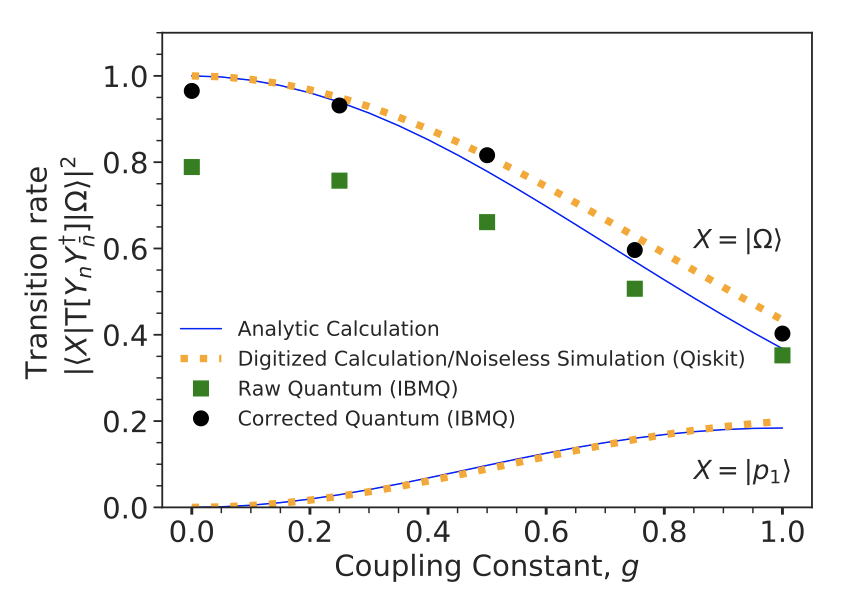

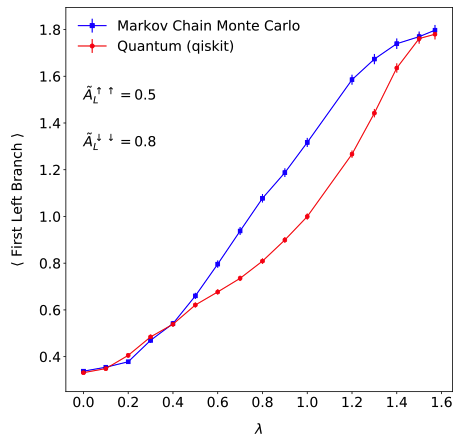

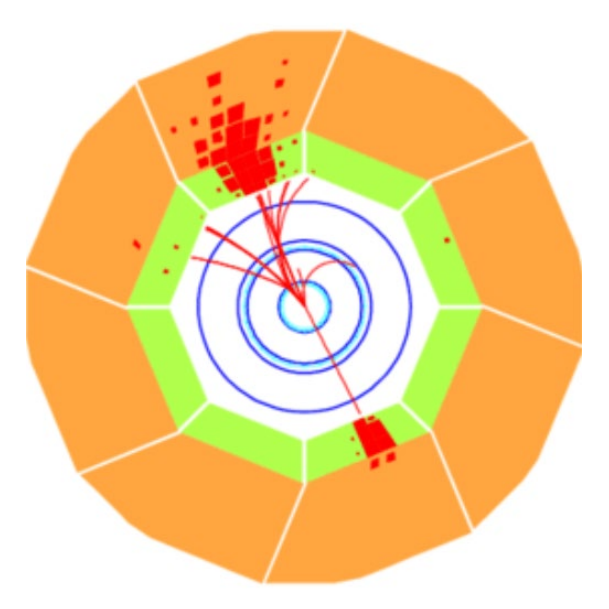

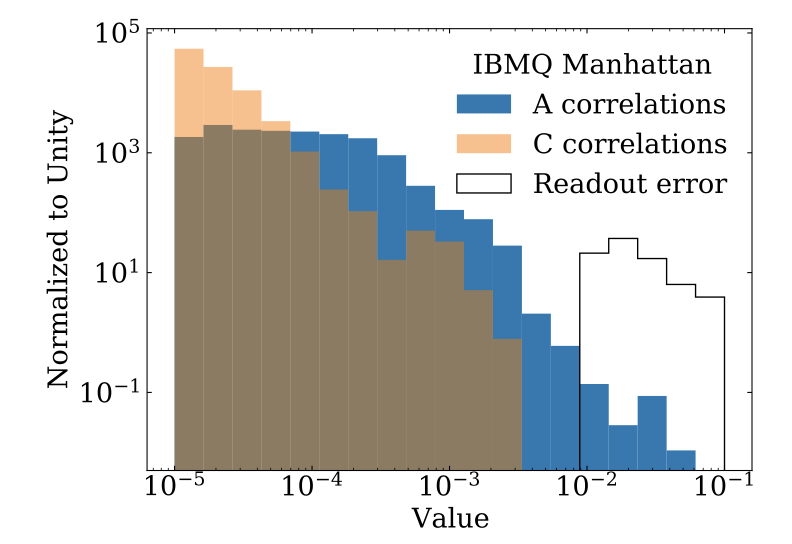

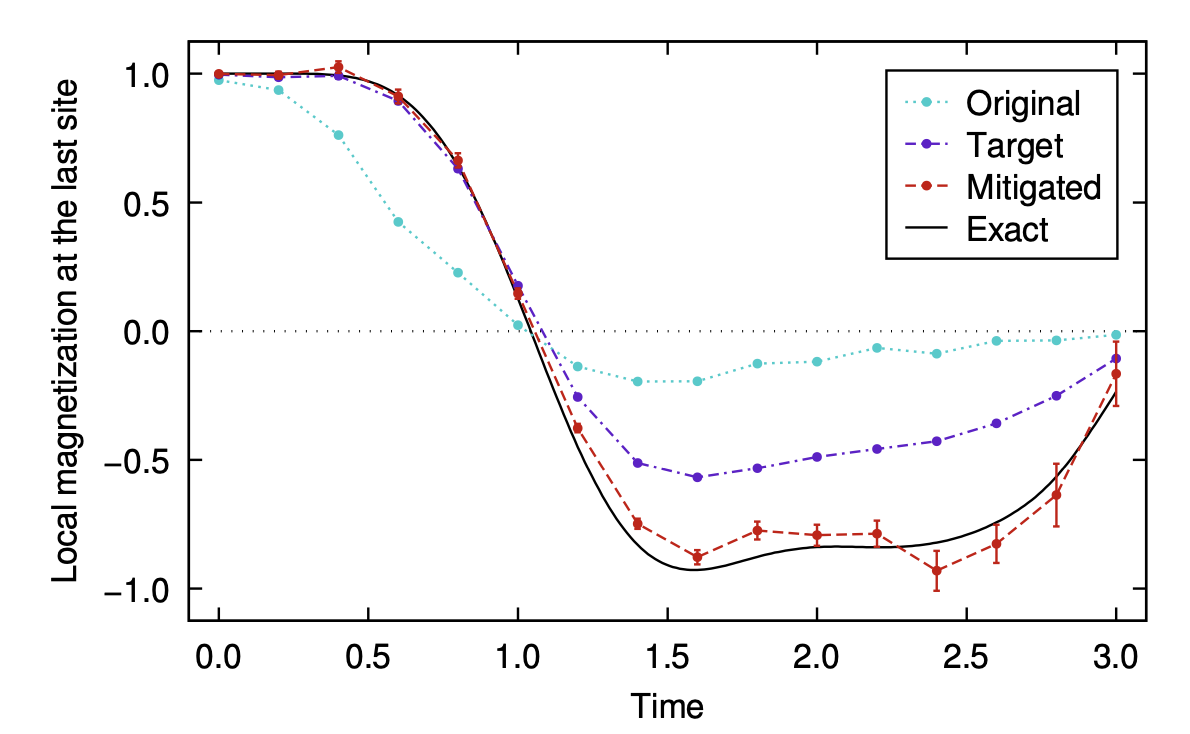

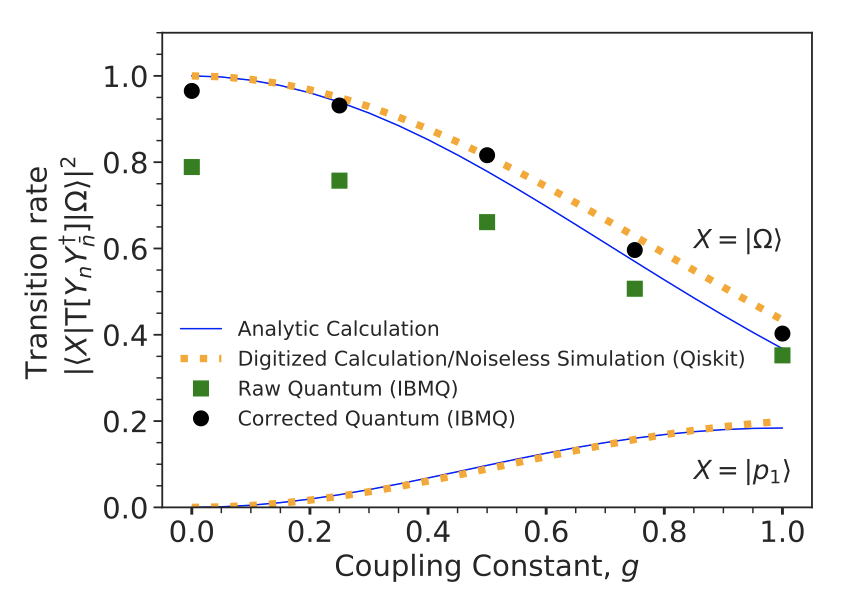

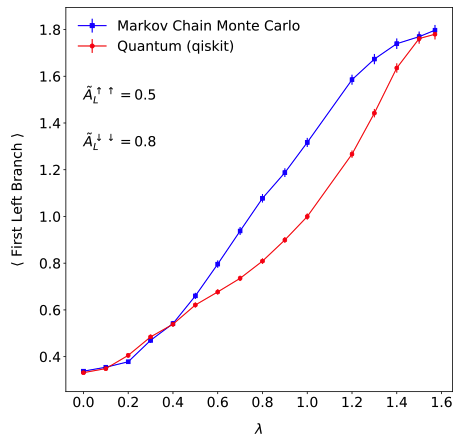

The study of real-time evolution of lattice quantum field theories using classical computers is known to scale exponentially with the number of lattice sites. Due to a fundamentally different computational strategy, quantum computers hold the promise of allowing for detailed studies of these dynamics from first principles. However, much like with classical computations, it is important that quantum algorithms do not have a cost that scales exponentially with the volume. Recently, it was shown how to break the exponential scaling of a naive implementation of a U(1) gauge theory in two spatial dimensions through an operator redefinition. In this work, we describe modifications to how operators must be sampled in the new operator basis to keep digitization errors small. We compare the precision of the energies and plaquette expectation value between the two operator bases and find they are comparable. Additionally, we provide an explicit circuit construction for the Suzuki-Trotter implementation of the theory using the Walsh function formalism. The gate count scaling is studied as a function of the lattice volume, for both exact circuits and approximate circuits where rotation gates with small arguments have been dropped. We study the errors from finite Suzuki-Trotter time-step, circuit approximation, and quantum noise in a calculation of an explicit observable using IBMQ superconducting qubit hardware. We find the gate count scaling for the approximate circuits can be further reduced by up to a power of the volume without introducing larger errors.

×

author="T. Gorordo, S. Knapen, B. Nachman, D. J. Robinson, A. Suresh",

title="{Geometry Optimization for Long-lived Particle Detectors}",

eprint="2211.08450",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

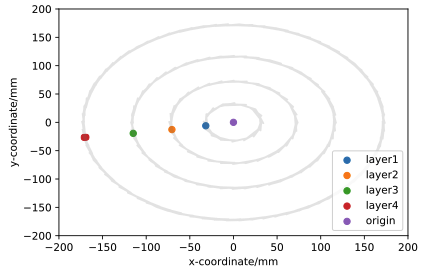

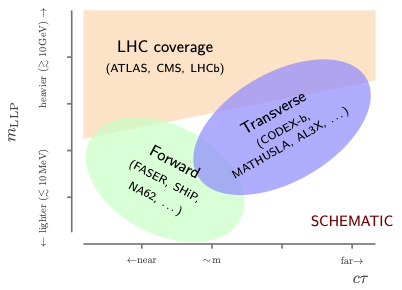

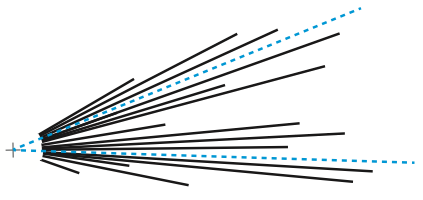

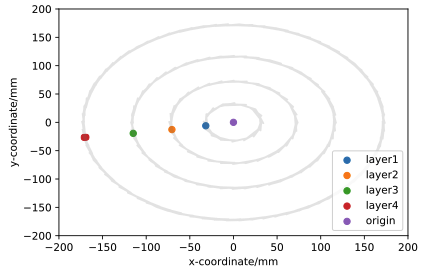

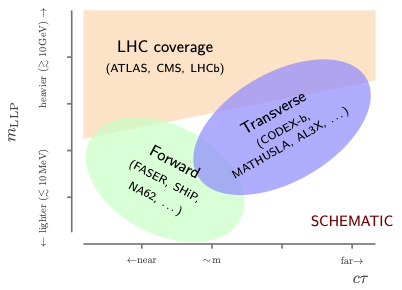

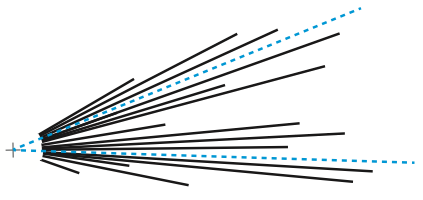

The proposed designs of many auxiliary long-lived particle (LLP) detectors at the LHC call for the instrumentation of a large surface area inside the detector volume, in order to reliably reconstruct tracks and LLP decay vertices. Taking the CODEX-b detector as an example, we provide a proof-of-concept optimization analysis that demonstrates the required instrumented surface area can be substantially reduced for many LLP models, while only marginally affecting the LLP signal efficiency. This optimization permits a significant reduction in cost and installation time, and may also inform the installation order for modular detector elements. We derive a branch-and-bound based optimization algorithm that permits highly computationally efficient determination of optimal detector configurations, subject to any specified LLP vertex and track reconstruction requirements. We outline the features of a newly-developed generalized simulation framework, for the computation of LLP signal efficiencies across a range of LLP models and detector geometries.

×

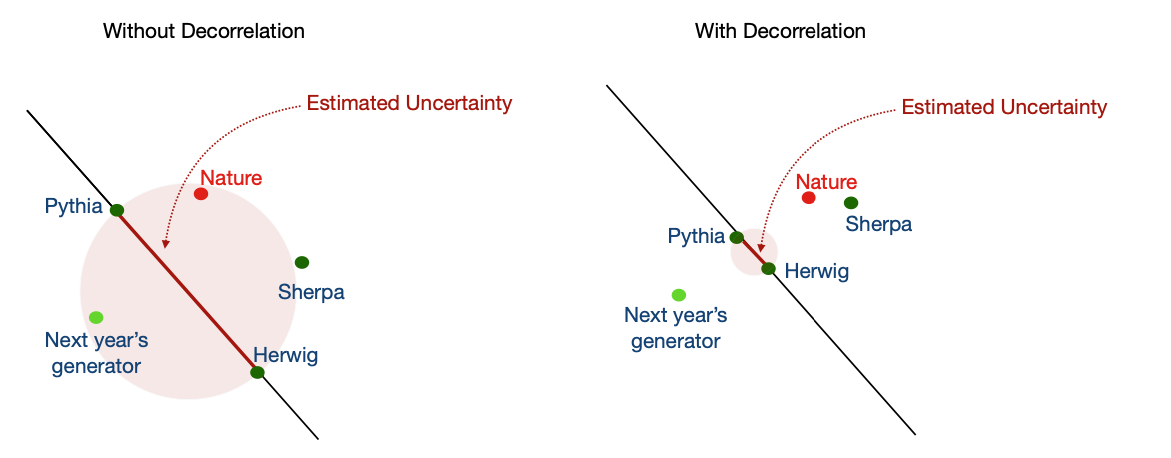

author="A. Ghosh, B. Nachman, T. Plehn, L. Shire, T. M.P. Tait, D. Whiteson",

title="{Statistical Patterns of Theory Uncertainties}",

eprint="2210.15167",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

A comprehensive uncertainty estimation is vital for the precision program of the LHC. While experimental uncertainties are often described by stochastic processes and well-defined nuisance parameters, theoretical uncertainties lack such a description. We study uncertainty estimates for cross-section predictions based on scale variations across a large set of processes. We find patterns similar to a stochastic origin, with accurate uncertainties for processes mediated by the strong force, but a systematic underestimate for electroweak processes. We propose an improved scheme, based on the scale variation of reference processes, which reduces outliers in the mapping from leading order to next-to-leading-order in perturbation theory.

×

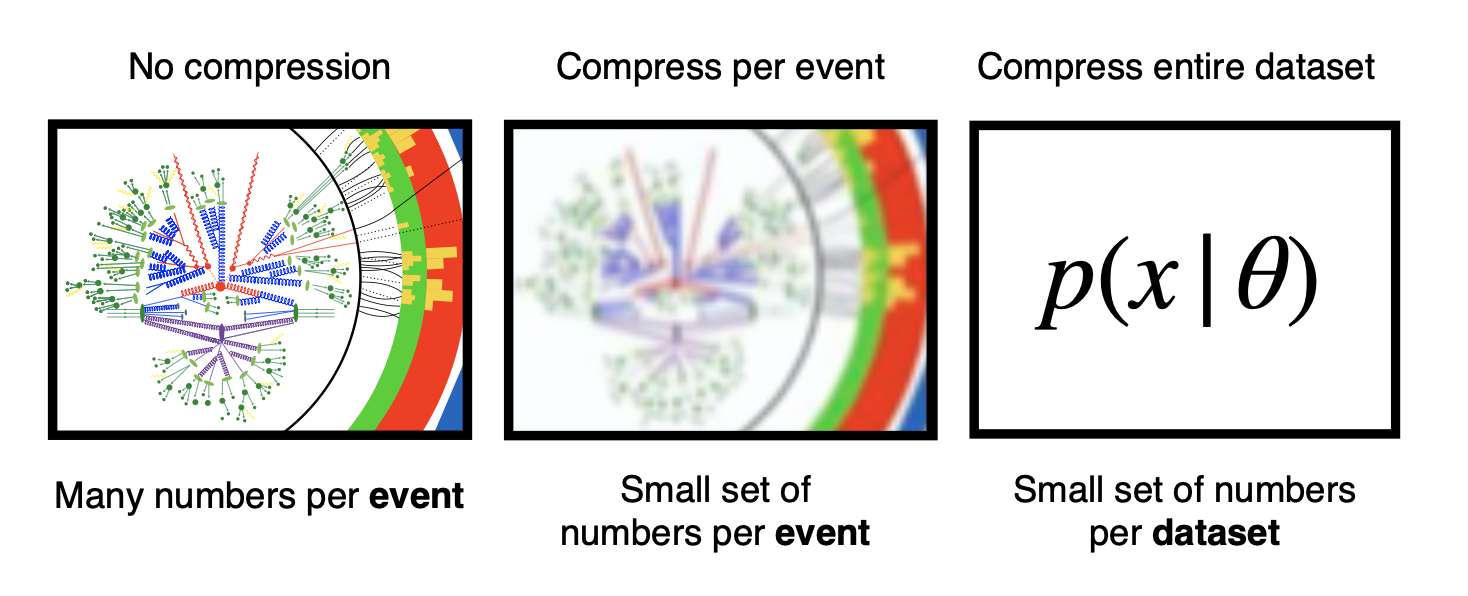

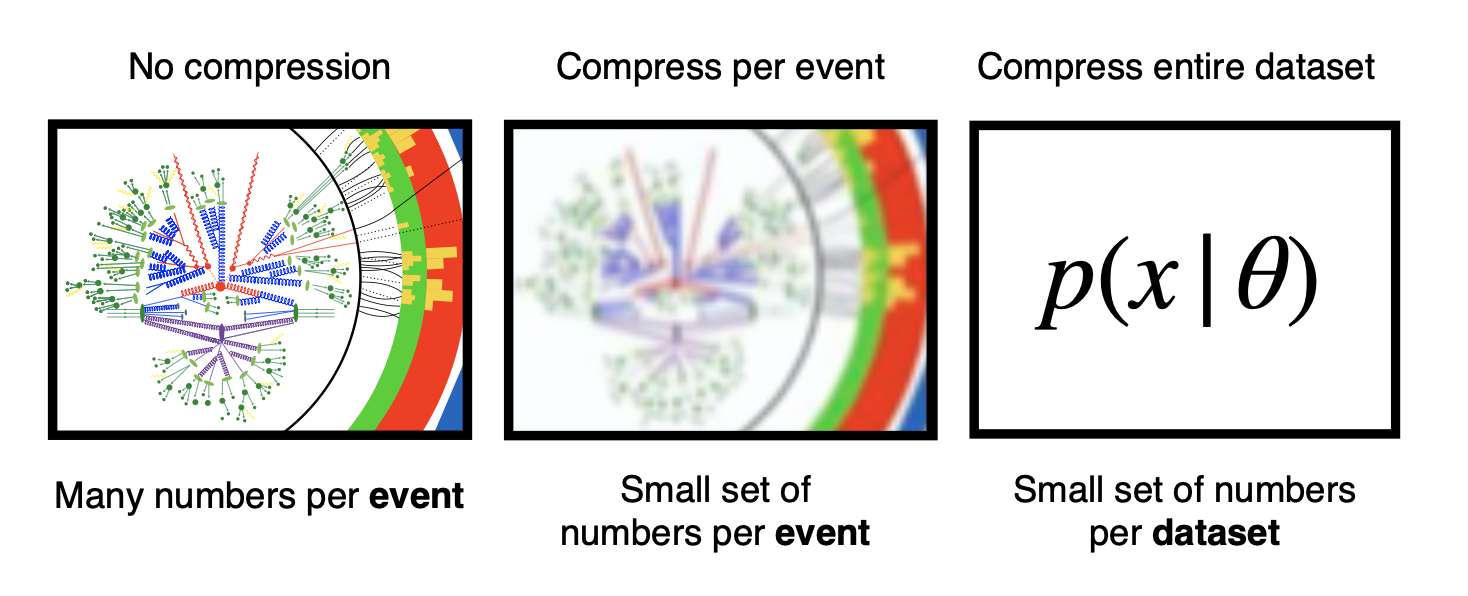

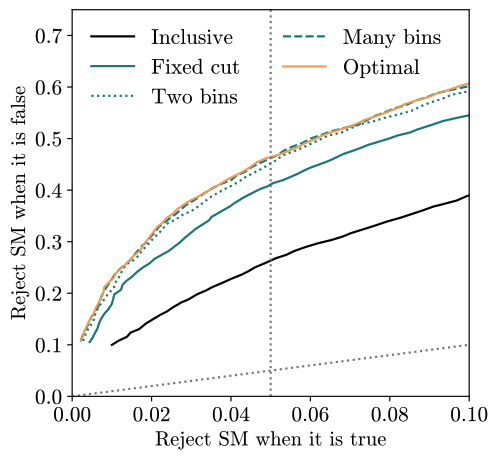

author="J. H. Collins, Y. Huang, S. Knapen, B. Nachman, D. Whiteson",

title="{Machine-Learning Compression for Particle Physics Discoveries}",

eprint="2210.11489",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

journal="NeurIPS Machine Learning and Physical Sciences",

year = "2022",

×

In collider-based particle and nuclear physics experiments, data are produced at such extreme rates that only a subset can be recorded for later analysis. Typically, algorithms select individual collision events for preservation and store the complete experimental response. A relatively new alternative strategy is to additionally save a partial record for a larger subset of events, allowing for later specific analysis of a larger fraction of events. We propose a strategy that bridges these paradigms by compressing entire events for generic offline analysis but at a lower fidelity. An optimal-transport-based β Variational Autoencoder (VAE) is used to automate the compression and the hyperparameter beta controls the compression fidelity. We introduce a new approach for multi-objective learning functions by simultaneously learning a VAE appropriate for all values of beta through parameterization. We present an example use case, a di-muon resonance search at the Large Hadron Collider (LHC), where we show that simulated data compressed by our beta-VAE has enough fidelity to distinguish distinct signal morphologies.

×

author="{V. D. Elvira, S. Gottlieb, O. Gutsche, B. Nachman (frontier conveners), et al.}",

title="{The Future of High Energy Physics Software and Computing}",

eprint="2210.05822",

archivePrefix = "arXiv",

primaryClass = "hep-ex",

year = "2022,

}

×

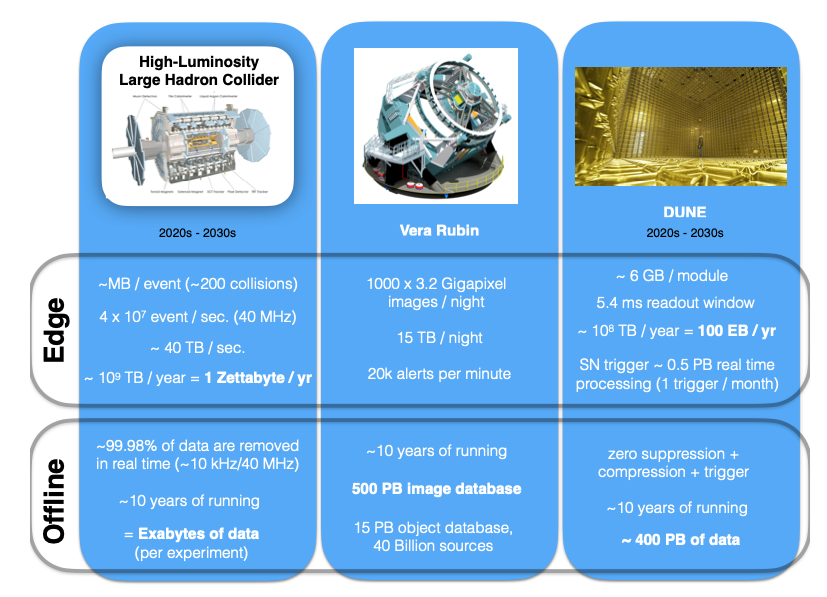

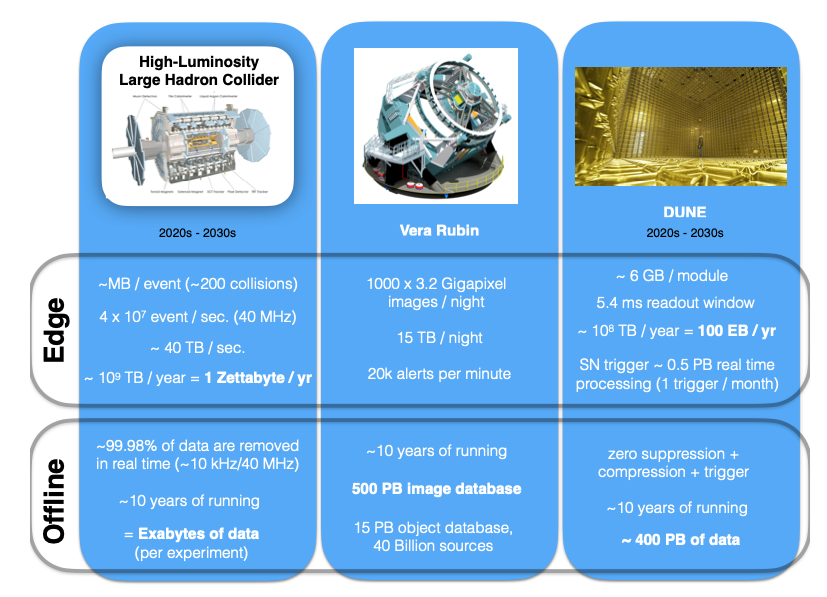

Software and Computing (S&C) are essential to all High Energy Physics (HEP) experiments and many theoretical studies. The size and complexity of S&C are now commensurate with that of experimental instruments, playing a critical role in experimental design, data acquisition/instrumental control, reconstruction, and analysis. Furthermore, S&C often plays a leading role in driving the precision of theoretical calculations and simulations. Within this central role in HEP, S&C has been immensely successful over the last decade. This report looks forward to the next decade and beyond, in the context of the 2021 Particle Physics Community Planning Exercise ("Snowmass") organized by the Division of Particles and Fields (DPF) of the American Physical Society.

×

author="{G. Kasieczka, R. Mastandrea, V. Mikuni, B. Nachman, M. Pettee, D. Shih}",

title="{Anomaly Detection under Coordinate Transformations}",

eprint="2209.06225",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

×

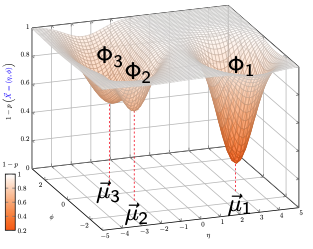

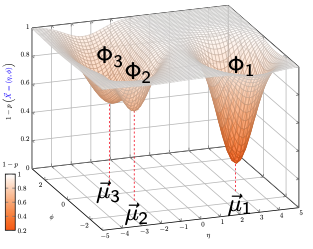

There is a growing need for machine learning-based anomaly detection strategies to broaden the search for Beyond-the-Standard-Model (BSM) physics at the Large Hadron Collider (LHC) and elsewhere. The first step of any anomaly detection approach is to specify observables and then use them to decide on a set of anomalous events. One common choice is to select events that have low probability density. It is a well-known fact that probability densities are not invariant under coordinate transformations, so the sensitivity can depend on the initial choice of coordinates. The broader machine learning community has recently connected coordinate sensitivity with anomaly detection and our goal is to bring awareness of this issue to the growing high energy physics literature on anomaly detection. In addition to analytical explanations, we provide numerical examples from simple random variables and from the LHC Olympics Dataset that show how using probability density as an anomaly score can lead to events being classified as anomalous or not depending on the coordinate frame.

×

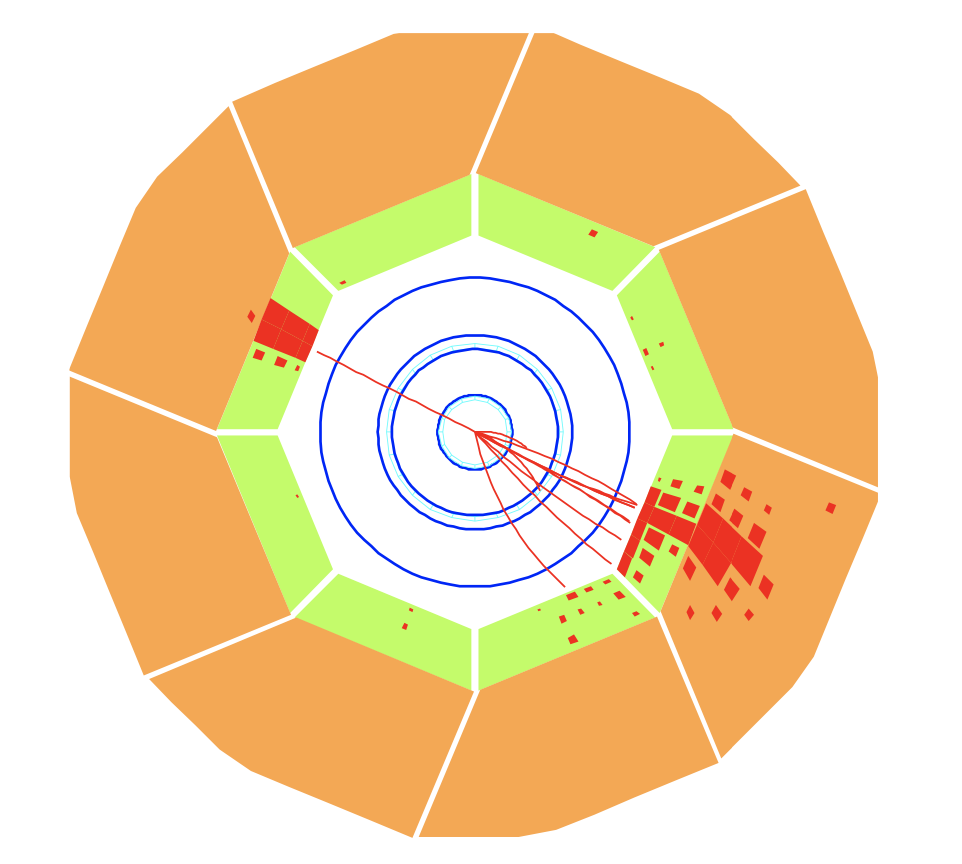

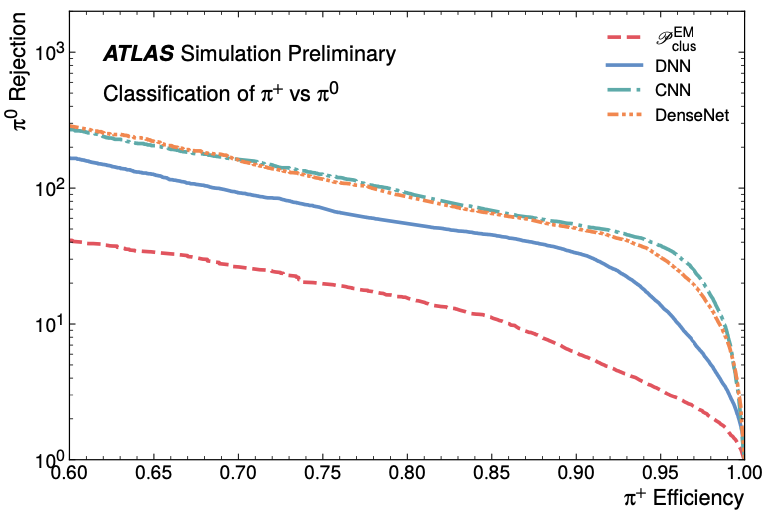

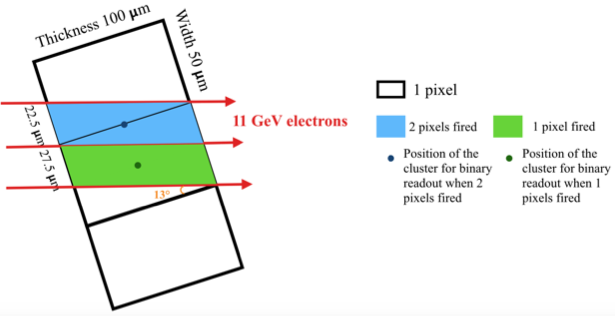

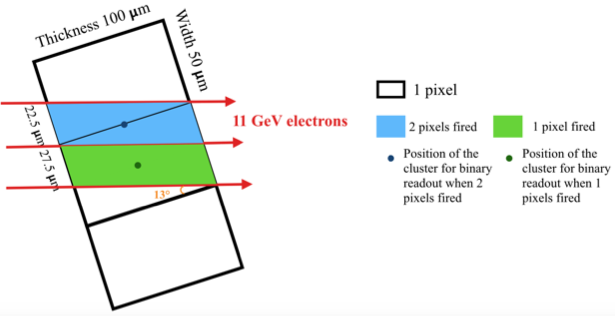

author="{ATLAS Collaboration}",

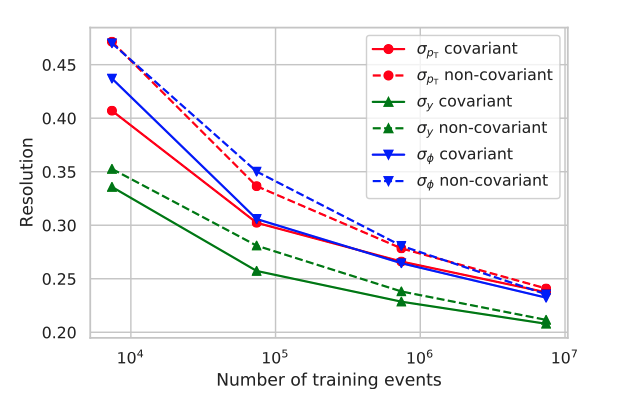

title="{Point Cloud Deep Learning Methods for Pion Reconstruction in the ATLAS Experiment}",

journal = "ATL-PHYS-PUB-2022-040",

url = "https://cds.cern.ch/record/2825379",

year = "2022",

}

×

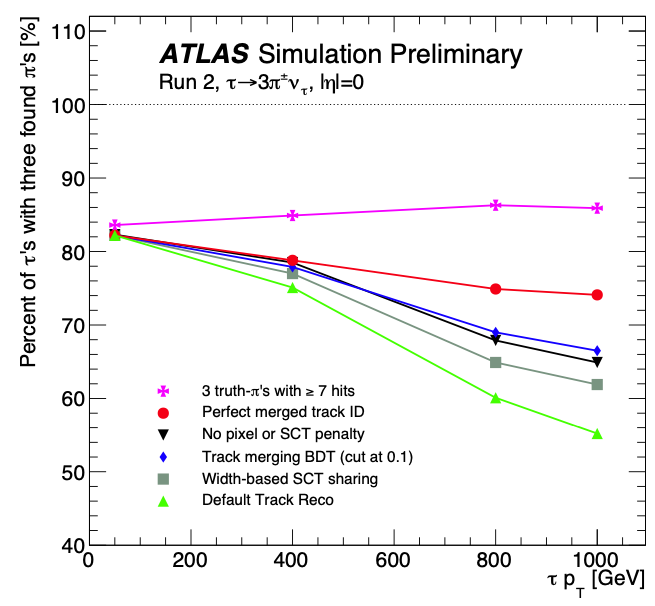

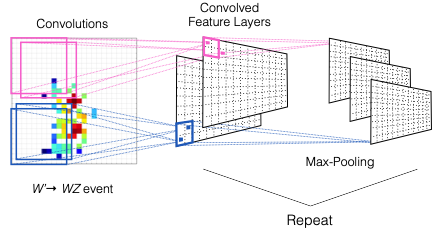

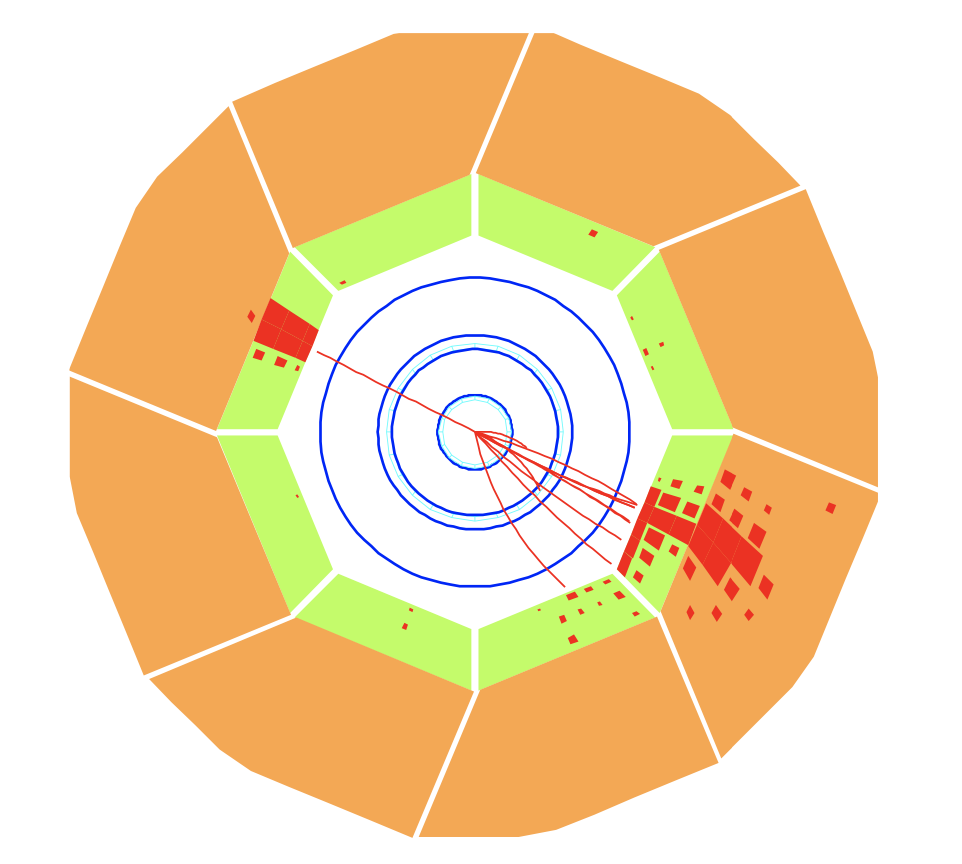

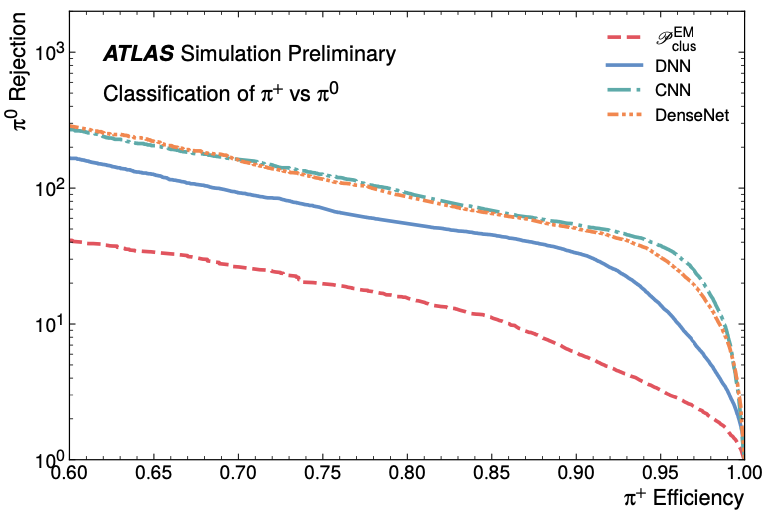

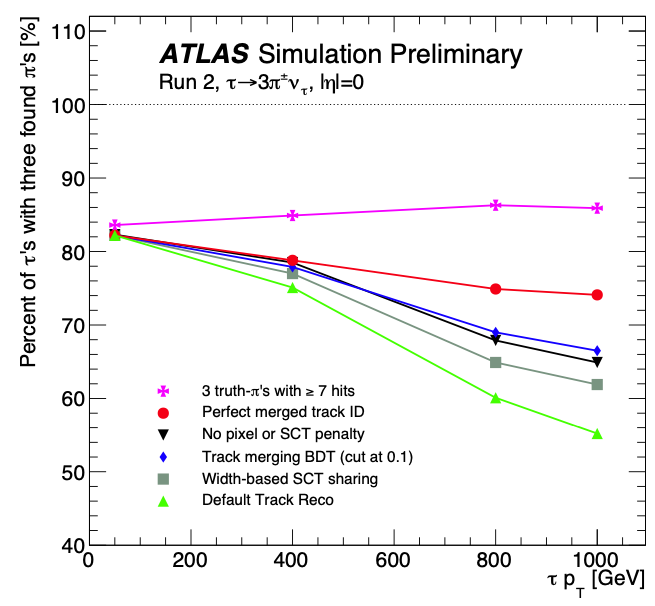

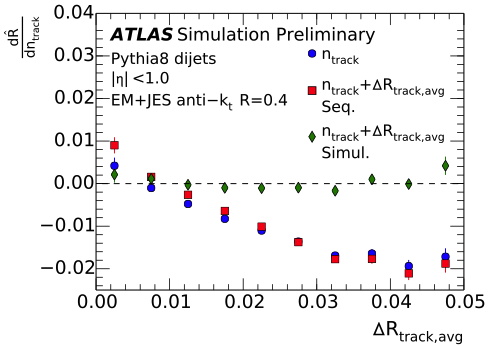

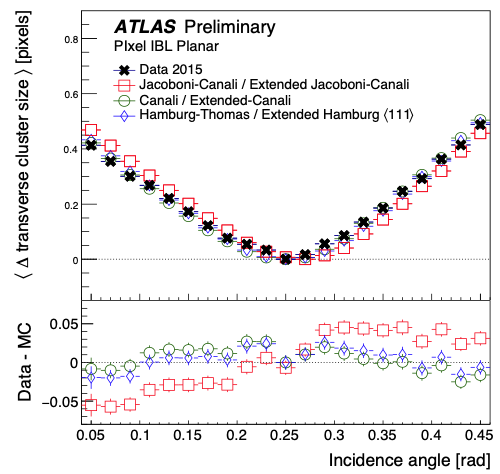

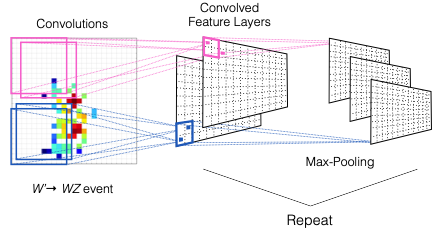

The reconstruction and calibration of hadronic final states in the ATLAS detector present complex experimental challenges. For isolated pions in particular, classifying pi0 versus charged pions and calibrating pion energy deposits in the ATLAS calorimeters are key steps in the hadronic reconstruction process. The baseline methods for local hadronic calibration were optimized early in the lifetime of the ATLAS experiment. This note presents a significant improvement over existing techniques using machine learning methods that do not require the input variables to be projected onto a fixed and regular grid. Instead, Transformer, Deep Sets, and Graph Neural Network architectures are used to process calorimeter clusters and particle tracks as point clouds, or a collection of data points representing a three-dimensional object in space. This note demonstrates the performance of these new approaches as an important step towards a low-level hadronic reconstruction scheme that fully takes advantage of deep learning to improve its performance.

×

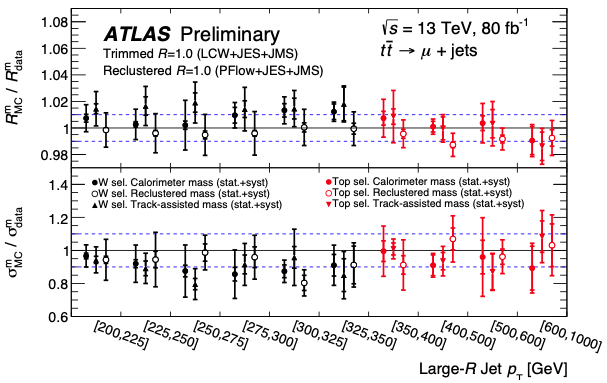

author="{ATLAS Collaboration}",

title="{Constituent-Based Top-Quark Tagging with the ATLAS Detector}",

journal = "ATL-PHYS-PUB-2022-039",

url = "https://cds.cern.ch/record/2825328",

year = "2022",

}

×

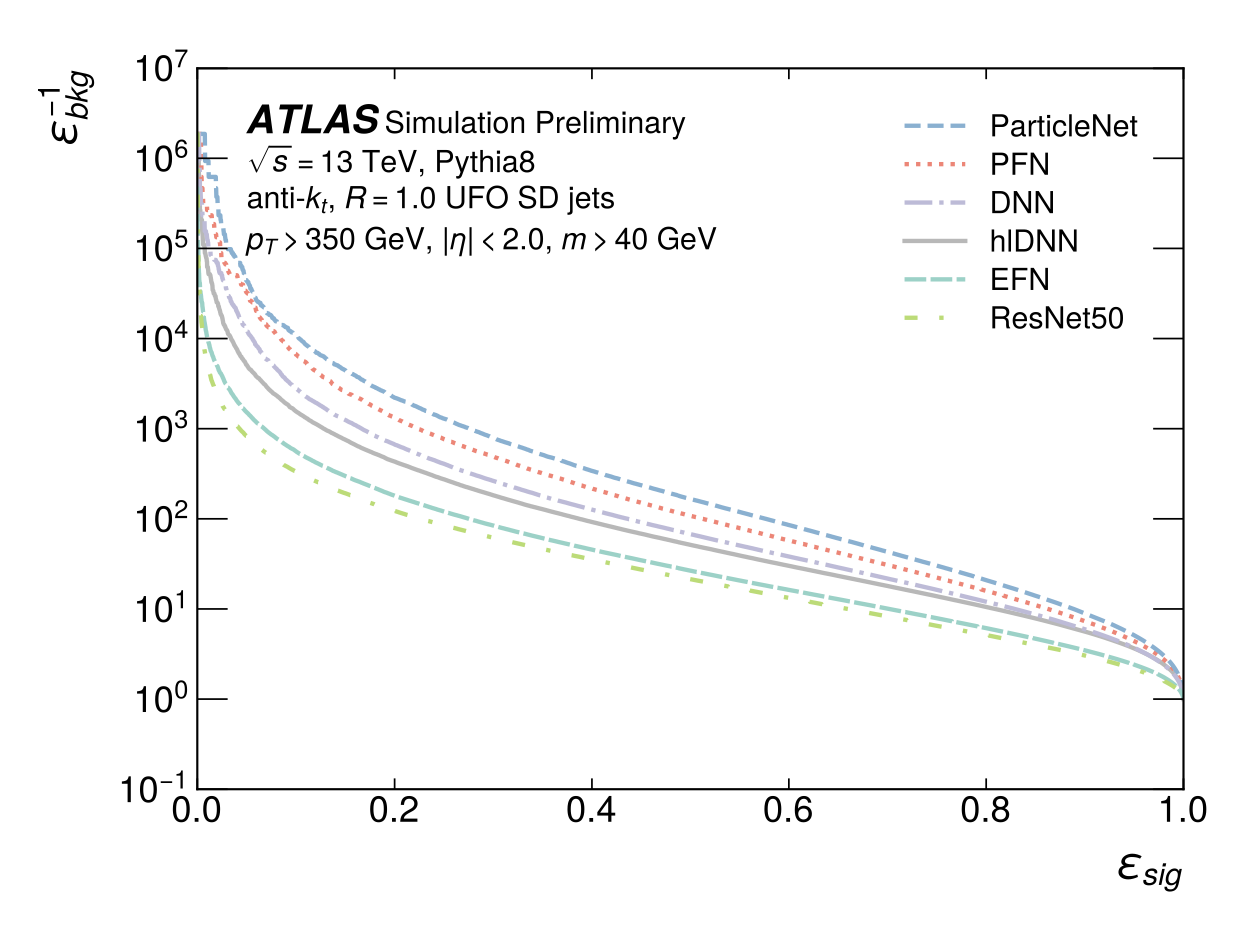

This note presents the performance of constituent-based jet taggers on large radius boosted top quark jets reconstructed from optimized jet input objects in simulated collisions at sqrt(s) = 13 TeV. Several taggers which consider all the information contained in the kinematic information of the jet constituents are tested, and compared to a tagger which relies on high-level summary quantities similar to the taggers used by ATLAS in Runs 1 and 2. Several constituent based taggers are found to out-perform the high level quantity based tagger, with the best achieving a factor of two increase in background rejection across the kinematic range. To enable further development and study, the data set described in this note is made publicly available.

×

author="D. M. Grabowska, C. Kane, B. Nachman, C. W. Bauer",

title="{Overcoming exponential scaling with system size in Trotter-Suzuki implementations of constrained Hamiltonians: 2+1 U(1) lattice gauge theories}",

eprint="2208.03333",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

For many quantum systems of interest, the classical computational cost of simulating their time evolution scales exponentially in the system size. At the same time, quantum computers have been shown to allow for simulations of some of these systems using resources that scale polynomially with the system size. Given the potential for using quantum computers for simulations that are not feasible using classical devices, it is paramount that one studies the scaling of quantum algorithms carefully. This work identifies a term in the Hamiltonian of a class of constrained systems that naively requires quantum resources that scale exponentially in the system size. An important example is a compact U(1) gauge theory on lattices with periodic boundary conditions. Imposing the magnetic Gauss' law a priori introduces a constraint into that Hamiltonian that naively results in an exponentially deep circuit. A method is then developed that reduces this scaling to polynomial in the system size, using a redefinition of the operator basis. An explicit construction, as well as the scaling of the associated computational cost, of the matrices defining the operator basis is given.

×

author="B. Nachman and S. Prestel",

title="{Morphing parton showers with event derivatives}",

eprint="2208.02274",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

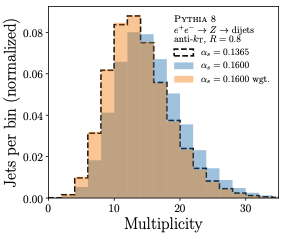

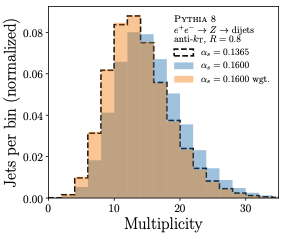

We develop EventMover, a differentiable parton shower event generator. This tool generates high- and variable-length scattering events that can be moved with simulation derivatives to change the value of the scale Lambda QCD defining the strong coupling constant, without introducing statistical variations between samples. To demonstrate the potential for EventMover, we compare the output of the simulation with electron positron data to show how one could fit Lambda QCD with only a single event sample. This is a critical step towards a fully differentiable event generator for particle and nuclear physics.

×

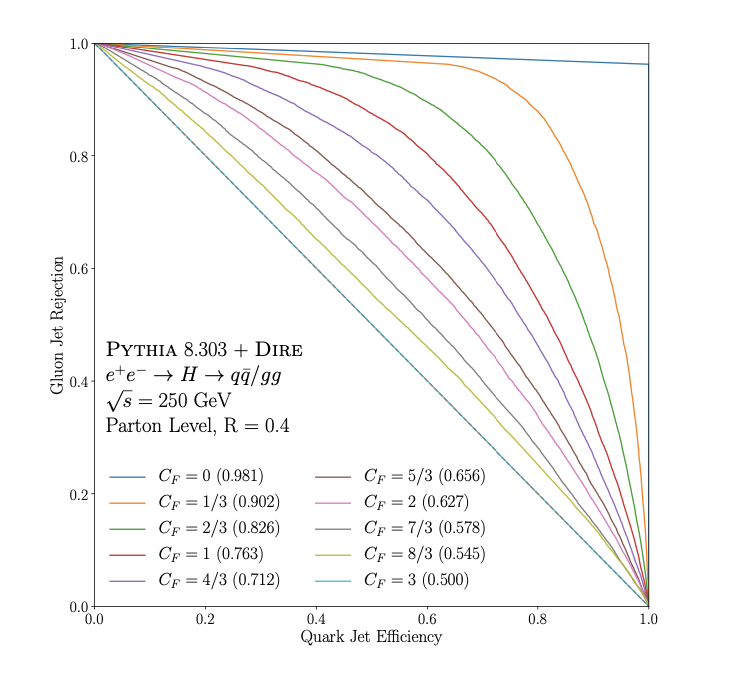

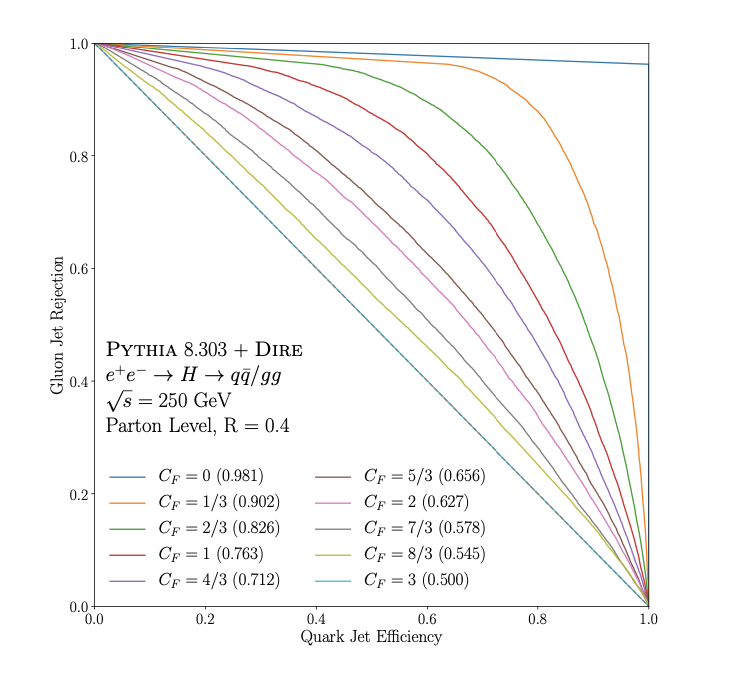

author="S. Bright-Thonney, I. Moult, B. Nachman, S. Prestel",

title="{Systematic Quark/Gluon Identification with Ratios of Likelihoods}",

eprint="2207.12411",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

×

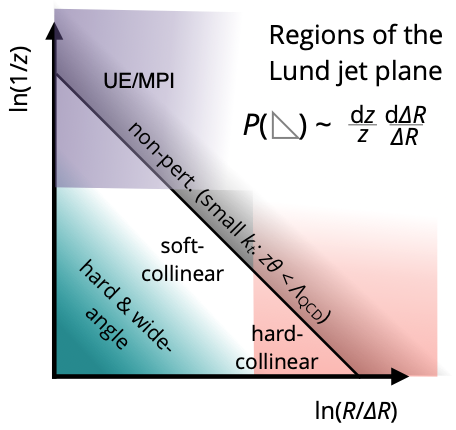

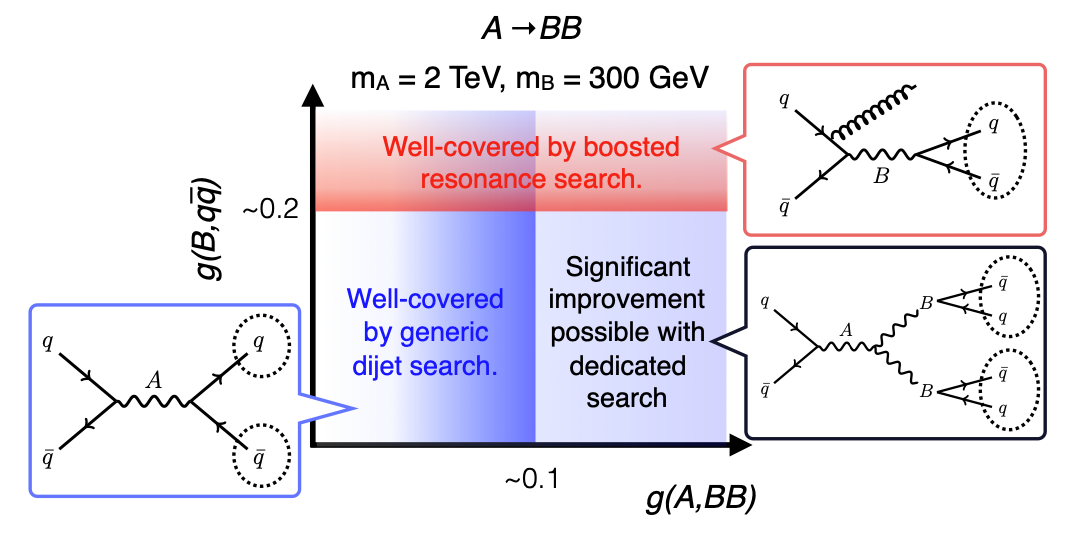

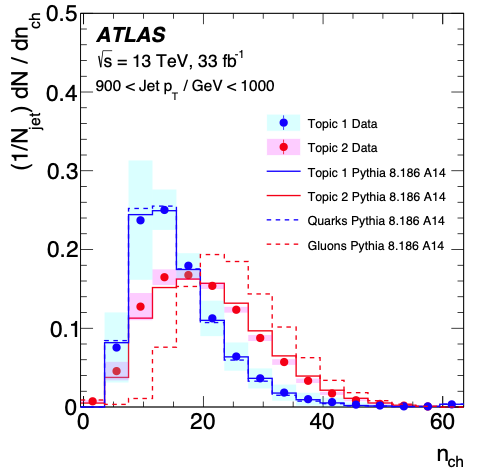

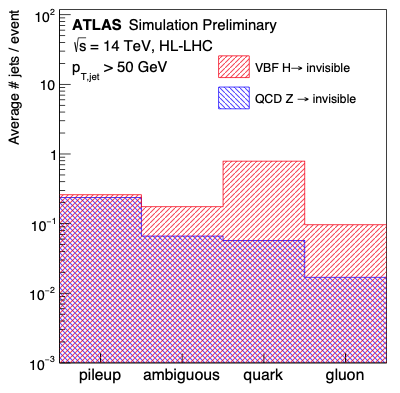

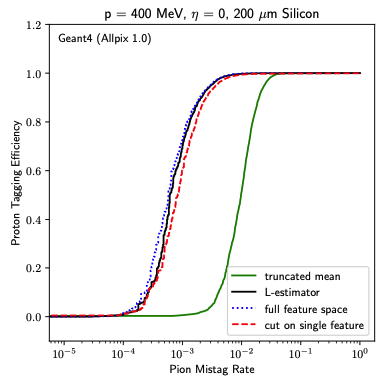

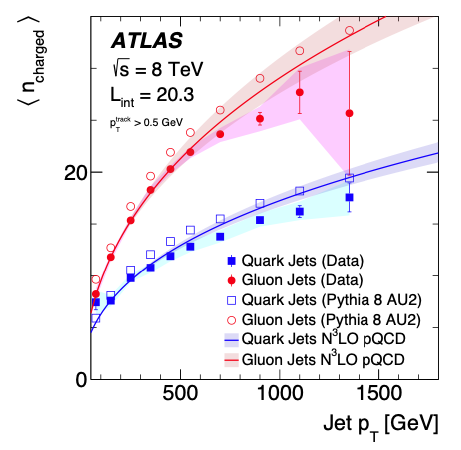

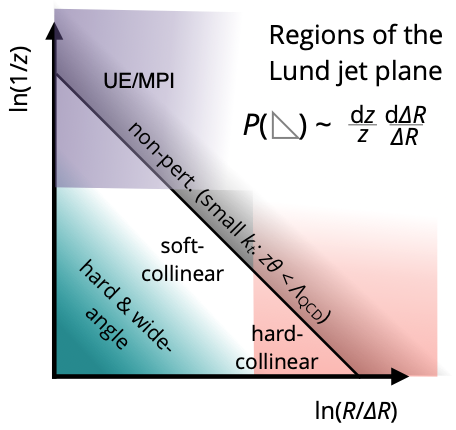

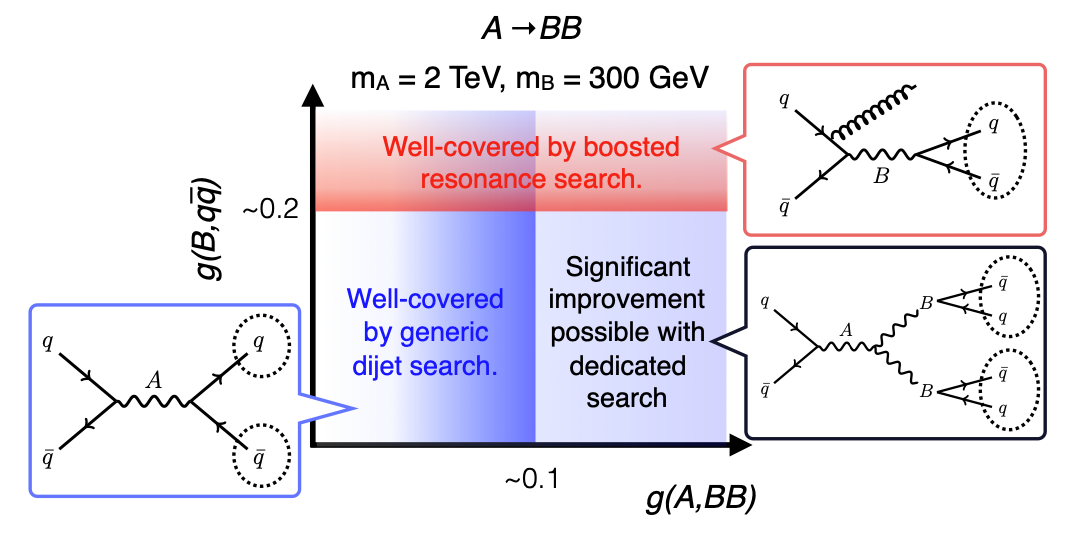

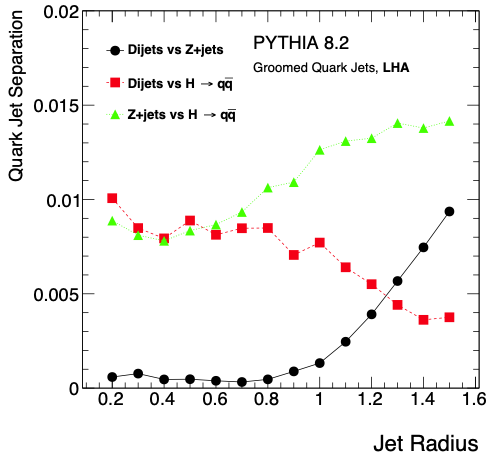

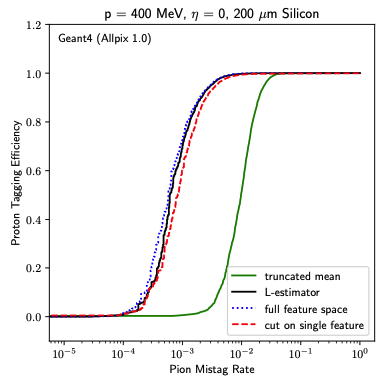

Discriminating between quark- and gluon-initiated jets has long been a central focus of jet substructure, leading to the introduction of numerous observables and calculations to high perturbative accuracy. At the same time, there have been many attempts to fully exploit the jet radiation pattern using tools from statistics and machine learning. We propose a new approach that combines a deep analytic understanding of jet substructure with the optimality promised by machine learning and statistics. After specifying an approximation to the full emission phase space, we show how to construct the optimal observable for a given classification task. This procedure is demonstrated for the case of quark and gluons jets, where we show how to systematically capture sub-eikonal corrections in the splitting functions, and prove that linear combinations of weighted multiplicity is the optimal observable. In addition to providing a new and powerful framework for systematically improving jet substructure observables, we demonstrate the performance of several quark versus gluon jet tagging observables in parton-level Monte Carlo simulations, and find that they perform at or near the level of a deep neural network classifier. Combined with the rapid recent progress in the development of higher order parton showers, we believe that our approach provides a basis for systematically exploiting subleading effects in jet substructure analyses at the Large Hadron Collider (LHC) and beyond.

×

author="{H1 Collaboration}",

title="Machine learning-assisted measurement of multi-differential lepton-jet correlations in deep-inelastic scattering with the H1 detector}",

journal = "H1prelim-22-031",

url = "https://www-h1.desy.de/h1/www/publications/htmlsplit/H1prelim-22-031.long.html",

year = "2022",

}

×

The lepton-jet momentum imbalance in deep inelastic scattering events offers a useful set of observables for unifying collinear and transverse-momentum-dependent frameworks for describing high energy Quantum Chromodynamics (QCD) interactions. We recently performed a measurement of this imbalance in the laboratory frame using positron-proton collisions from HERA Run II [1]. With a new machine learning method, the measurement was performed simultaneously and unbinned in eight dimensions. The results in Ref. [1] were presented projected onto four key observables. This paper extends those results by showing the multi-differential nature of the unfolded result. In particular, we present lepton-jet correlation observables deferentially in kinematic properties of the scat- tering process, Q^2 and y. We compare these results with parton shower Monte Carlo predictions as well as calculations from perturbative QCD and from a Transverse Momentum Dependent (TMD) factorization framework.

×

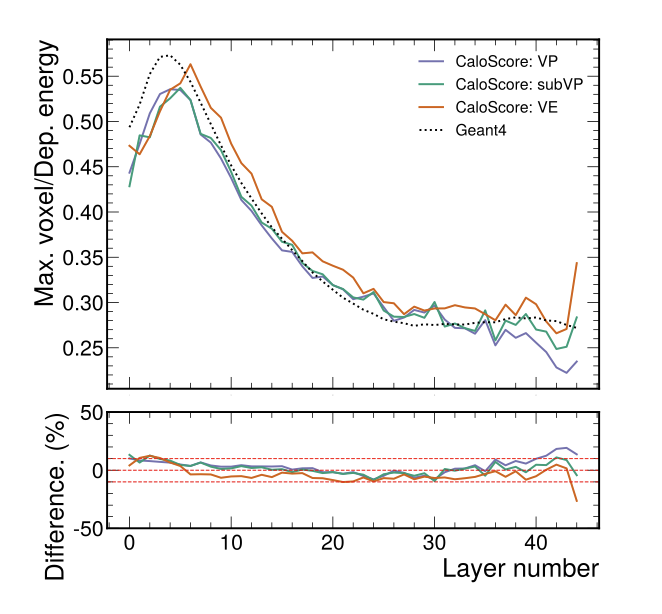

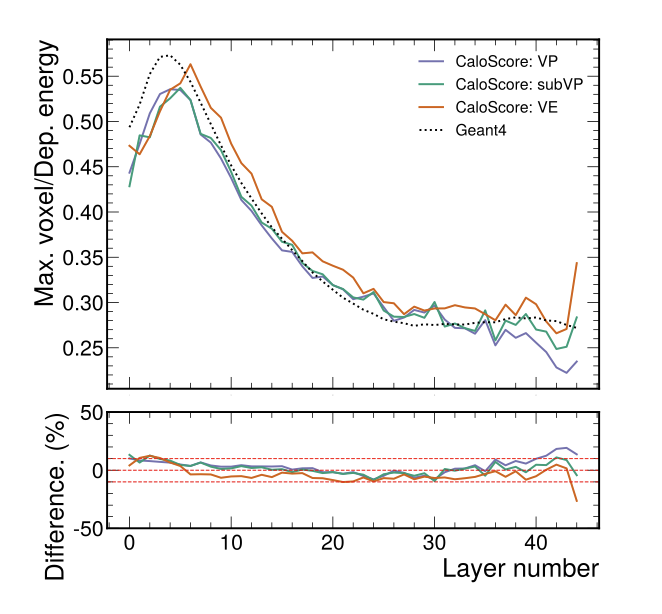

author="{V. Mikuni, B. Nachman}",

title="{Score-based Generative Models for Calorimeter Shower Simulation}",

eprint="2206.11898",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

×

Score-based generative models are a new class of generative algorithms that have been shown to produce realistic images even in high dimensional spaces, currently surpassing other state-of-the-art models for different benchmark categories and applications. In this work we introduce CaloScore, a score-based generative model for collider physics applied to calorimeter shower generation. Three different diffusion models are investigated using the Fast Calorimeter Simulation Challenge 2022 dataset. CaloScore is the first application of a score-based generative model in collider physics and is able to produce high-fidelity calorimeter images for all datasets, providing an alternative paradigm for calorimeter shower simulation.

×

author="{M. LeBlanc, B. Nachman, C. Sauer}",

title="{Going off topics to demix quark and gluon jets in $\alpha_S$ extractions}",

eprint="2206.10642",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

×

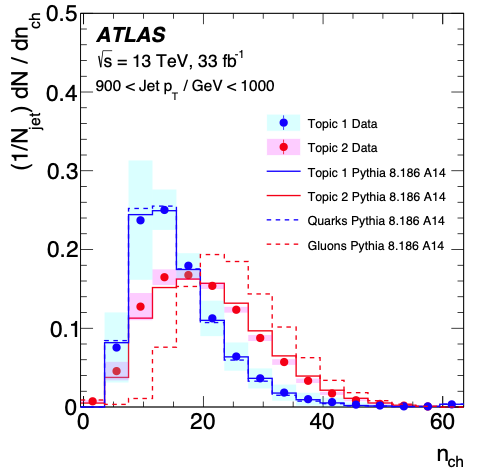

Quantum chromodynamics is the theory of the strong interaction between quarks and gluons; the coupling strength of the interaction, $\alpha_S$, is the least precisely-known of all interactions in nature. An extraction of the strong coupling from the radiation pattern within jets would provide a complementary approach to conventional extractions from jet production rates and hadronic event shapes, and would be a key achievement of jet substructure at the Large Hadron Collider (LHC). Presently, the relative fraction of quark and gluon jets in a sample is the limiting factor in such extractions, as this fraction is degenerate with the value of $\alpha_S$ for the most well-understood observables. To overcome this limitation, we apply recently proposed techniques to statistically demix multiple mixtures of jets and obtain purified quark and gluon distributions based on an operational definition. We illustrate that studying quark and gluon jet substructure separately can significantly improve the sensitivity of such extractions of the strong coupling. We also discuss how using machine learning techniques or infrared- and collinear-unsafe information can improve the demixing performance without the loss of theoretical control. While theoretical research is required to connect the extract topics with the quark and gluon objects in cross section calculations, our study illustrates the potential of demixing to reduce the dominant uncertainty for the $\alpha_S$ extraction from jet substructure at the LHC.

×

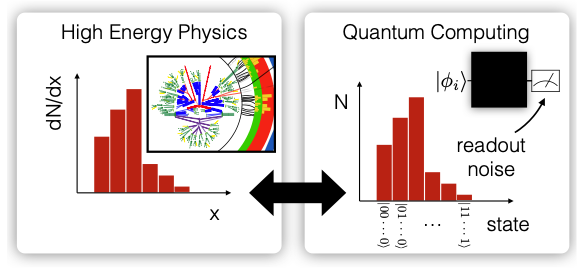

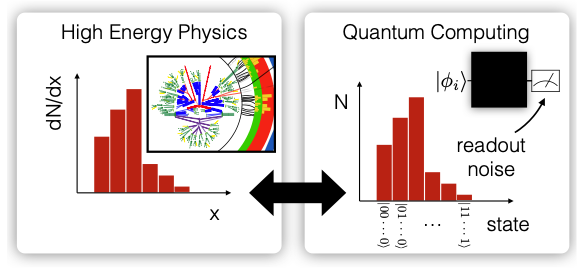

author="{S. Alvi, C. Bauer, B. Nachman}",

title="{Quantum Anomaly Detection for Collider Physics}",

eprint="2206.08391",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

×

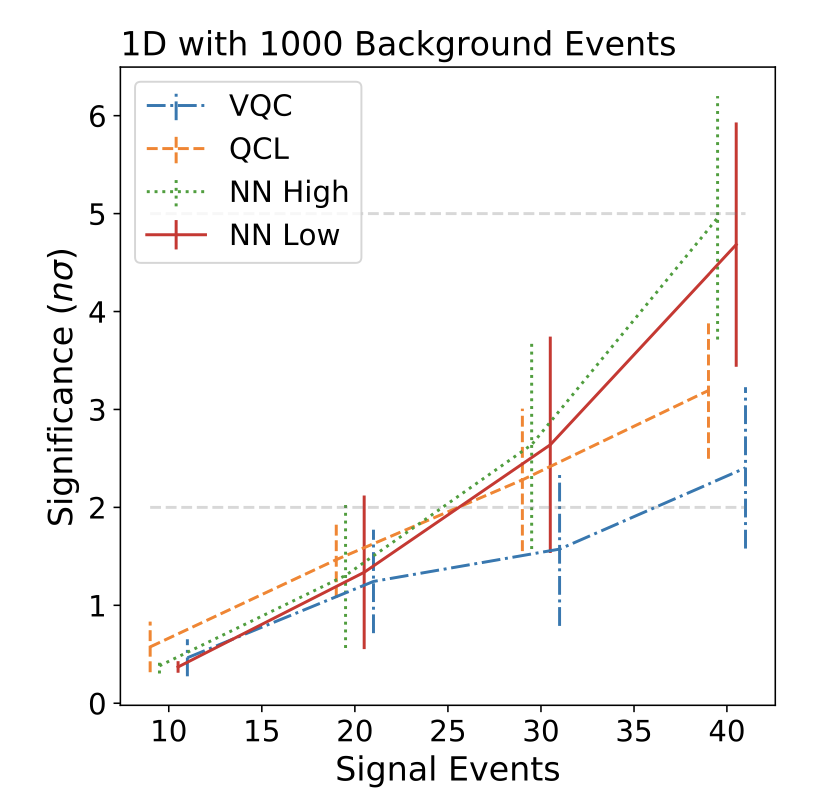

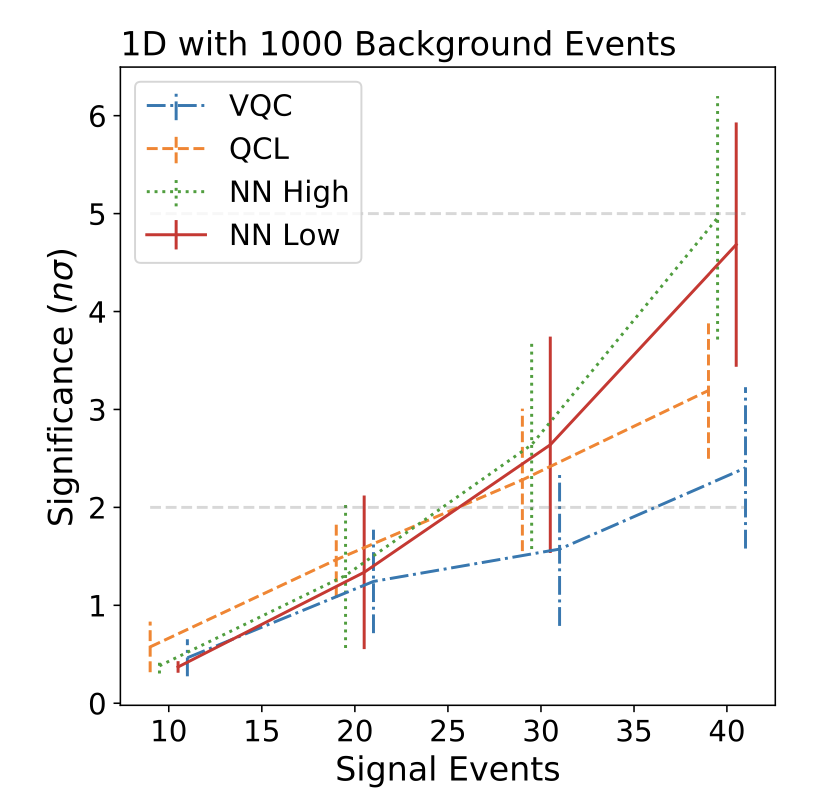

Quantum Machine Learning (QML) is an exciting tool that has received significant recent attention due in part to advances in quantum computing hardware. While there is currently no formal guarantee that QML is superior to classical ML for relevant problems, there have been many claims of an empirical advantage with high energy physics datasets. These studies typically do not claim an exponential speedup in training, but instead usually focus on an improved performance with limited training data. We explore an analysis that is characterized by a low statistics dataset. In particular, we study an anomaly detection task in the four-lepton final state at the Large Hadron Collider that is limited by a small dataset. We explore the application of QML in a semi-supervised mode to look for new physics without specifying a particular signal model hypothesis. We find no evidence that QML provides any advantage over classical ML. It could be that a case where QML is superior to classical ML for collider physics will be established in the future, but for now, classical ML is a powerful tool that will continue to expand the science of the LHC and beyond.

×

author="{B. M. Dillon, R. Mastandrea, B. Nachman}",

title="{Self-supervised Anomaly Detection for New Physics}",

eprint="2205.10380",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

×

We investigate a method of model-agnostic anomaly detection through studying jets, collimated sprays of particles produced in high-energy collisions. We train a transformer neural network to encode simulated QCD "event space" dijets into a low-dimensional "latent space" representation. We optimize the network using the self-supervised contrastive loss, which encourages the preservation of known physical symmetries of the dijets. We then train a binary classifier to discriminate a BSM resonant dijet signal from a QCD dijet background both in the event space and the latent space representations. We find the classifier performances on the event and latent spaces to be comparable. We finally perform an anomaly detection search using a weakly supervised bump hunt on the latent space dijets, finding again a comparable performance to a search run on the physical space dijets. This opens the door to using low-dimensional latent representations as a computationally efficient space for resonant anomaly detection in generic particle collision events.

×

author="{R. Gambhir, B. Nachman, J. Thaler}",

title="{Bias and Priors in Machine Learning Calibrations for High Energy Physics}",

eprint="2205.05084",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

journal="Phys. Rev. D",

volume="106",

pages="036011",

doi="10.1103/PhysRevD.106.036011",

year = "2022",

}

×

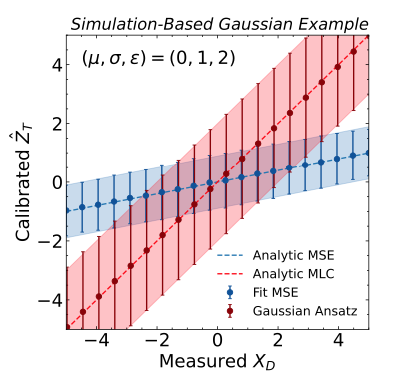

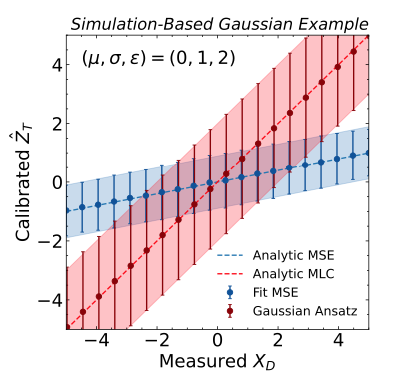

Machine learning offers an exciting opportunity to improve the calibration of nearly all reconstructed objects in high-energy physics detectors. However, machine learning approaches often depend on the spectra of examples used during training, an issue known as prior dependence. This is an undesirable property of a calibration, which needs to be applicable in a variety of environments. The purpose of this paper is to explicitly highlight the prior dependence of some machine learning-based calibration strategies. We demonstrate how some recent proposals for both simulation-based and data-based calibrations inherit properties of the sample used for training, which can result in biases for downstream analyses. In the case of simulation-based calibration, we argue that our recently proposed Gaussian Ansatz approach can avoid some of the pitfalls of prior dependence, whereas prior-independent data-based calibration remains an open problem.

×

author="{R. Gambhir, B. Nachman, J. Thaler}",

title="{Learning Uncertainties the Frequentist Way: Calibration and Correlation in High Energy Physics}",

eprint="2205.03413",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

journal="Phys. Rev. Lett.",

volume="129",

pages="082001",

doi="10.1103/PhysRevLett.129.082001",

year = "2022",

}

×

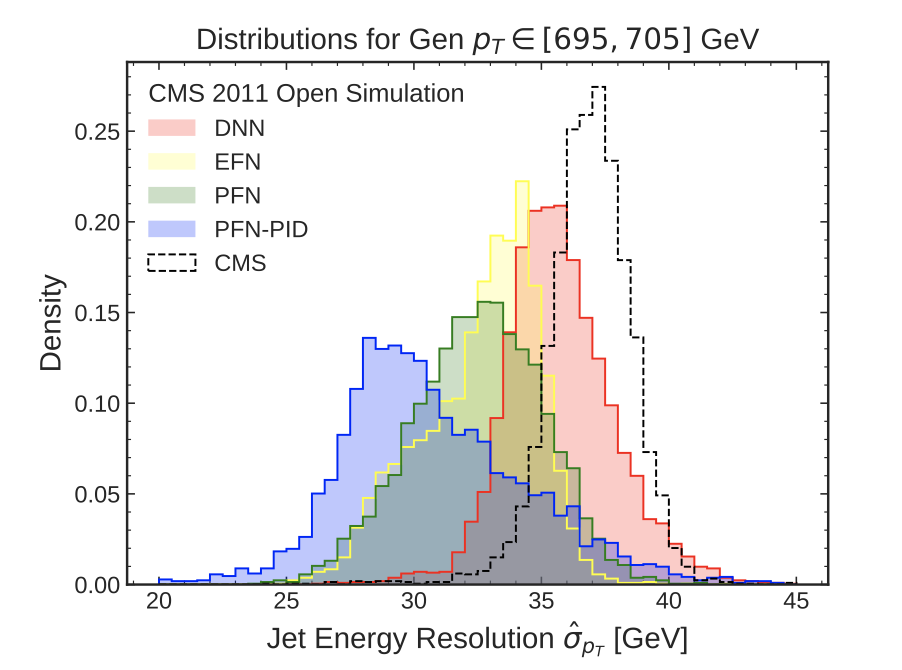

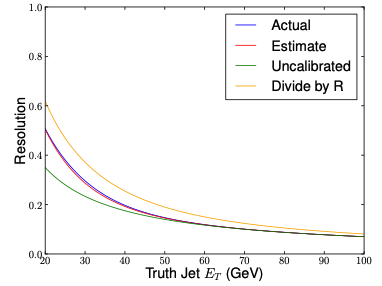

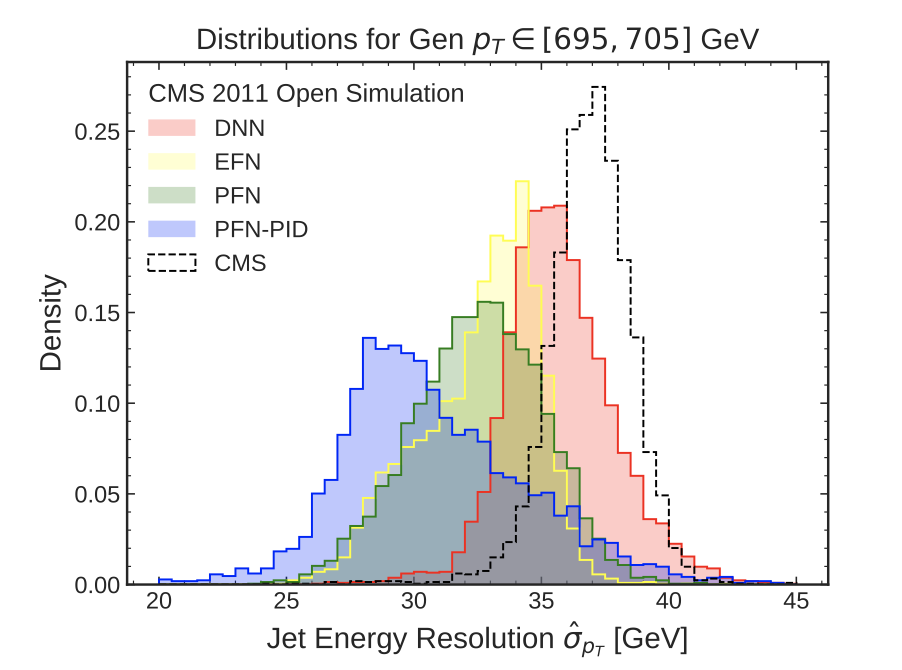

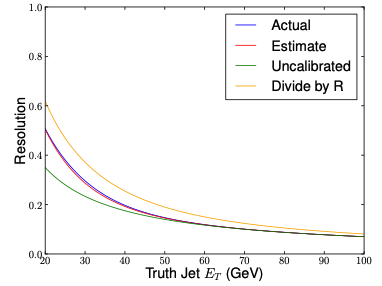

Calibration is a common experimental physics problem, whose goal is to infer the value and uncertainty of an unobservable quantity Z given a measured quantity X. Additionally, one would like to quantify the extent to which X and Z are correlated. In this paper, we present a machine learning framework for performing frequentist maximum likelihood inference with Gaussian uncertainty estimation, which also quantifies the mutual information between the unobservable and measured quantities. This framework uses the Donsker-Varadhan representation of the Kullback-Leibler divergence -- parametrized with a novel Gaussian Ansatz -- to enable a simultaneous extraction of the maximum likelihood values, uncertainties, and mutual information in a single training. We demonstrate our framework by extracting jet energy corrections and resolution factors from a simulation of the CMS detector at the Large Hadron Collider. By leveraging the high-dimensional feature space inside jets, we improve upon the nominal CMS jet resolution by upwards of 15%.

×

author="{H1 Collaboration}",

title="Multi-differential Jet Substructure Measurement in High $Q^2$ DIS Events with HERA-II Data}",

journal = "H1prelim-22-034",

url = "https://www-h1.desy.de/h1/www/publications/htmlsplit/H1prelim-22-034.long.html",

year = "2022",

}

×

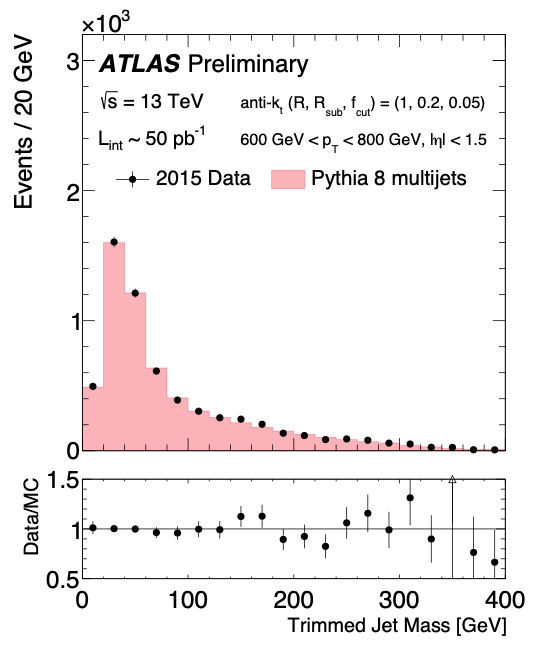

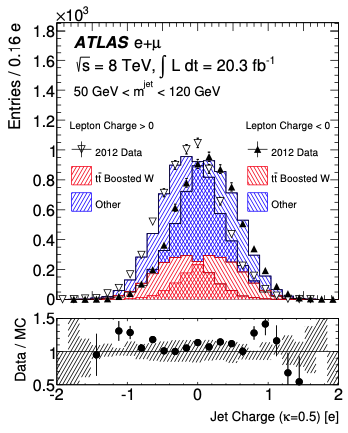

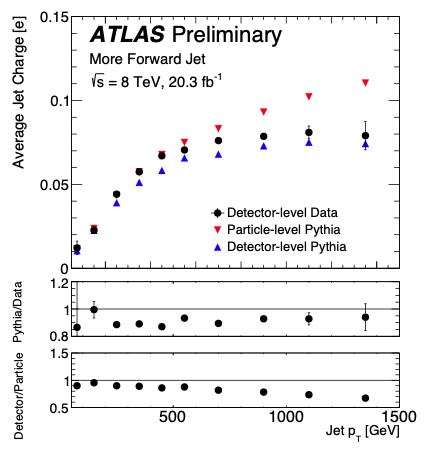

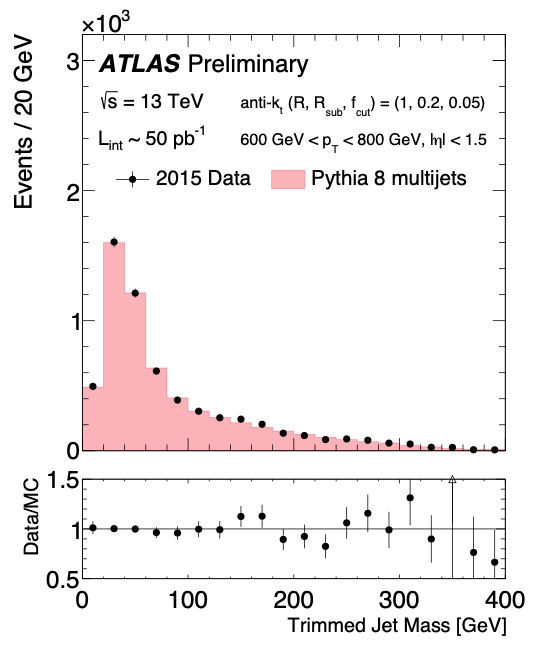

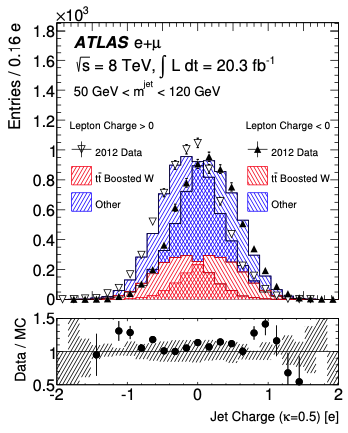

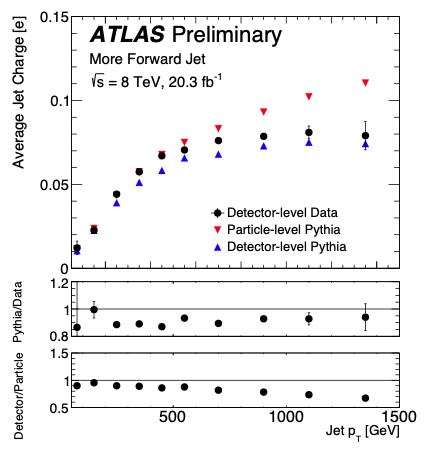

A measurement of different jet substructure observables in high Q^2 neutral-current deep-inelastic scattering events close to the Born kinematics is presented. Differential and multi-differential cross-sections are presented as a function of the jet’s charged constituent multiplicity, momentum dispersion, jet charge, as well as three values of jet angularities. Results are split into multiple Q^ intervals, probing the evolution of jet observables with energy scale. These measurements probe the descrip- tion of parton showers and provide insight into non-perturbative QCD. Unfolded results are derived without binning using the machine learning-based method Omnifold. All observables are unfolded simultaneously by using reconstructed particles inside jets as inputs to a graph neural network. Results are compared with a variety of predictions

×

author="{K. Cheung, Y. Chung, S. Hsu, B. Nachman}",

title="{Exploring the Universality of Hadronic Jet Classification}",

eprint="2204.03812",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

×

The modeling of jet substructure significantly differs between Parton Shower Monte Carlo (PSMC) programs. Despite this, we observe that machine learning classifiers trained on different PSMCs learn nearly the same function. This means that when these classifiers are applied to the same PSMC for testing, they result in nearly the same performance. This classifier universality indicates that a machine learning model trained on one simulation and tested on another simulation (or data) will likely be optimal. Our observations are based on detailed studies of shallow and deep neural networks applied to simulated Lorentz boosted Higgs jet tagging at the LHC.

×

author="{M. Arratia, D. Britzger, O. Long, B. Nachman}",

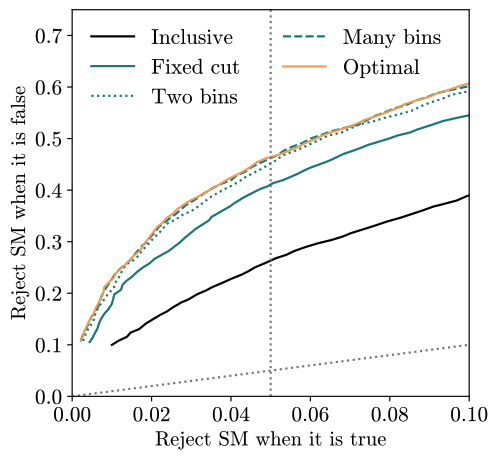

title="{Optimizing Observables with Machine Learning for Better Unfolding}",

eprint="2203.16722",

archivePrefix = "arXiv",

primaryClass = "hep-ex",

journal = "JINST",

volume = "17",

pages = "P07009",

doi = "10.1088/1748-0221/17/07/P07009",

year = "2022",

}

×

Most measurements in particle and nuclear physics use matrix-based unfolding algorithms to correct for detector effects. In nearly all cases, the observable is defined analogously at the particle and detector level. We point out that while the particle-level observable needs to be physically motivated to link with theory, the detector-level need not be and can be optimized. We show that using deep learning to define detector-level observables has the capability to improve the measurement when combined with standard unfolding methods.

×

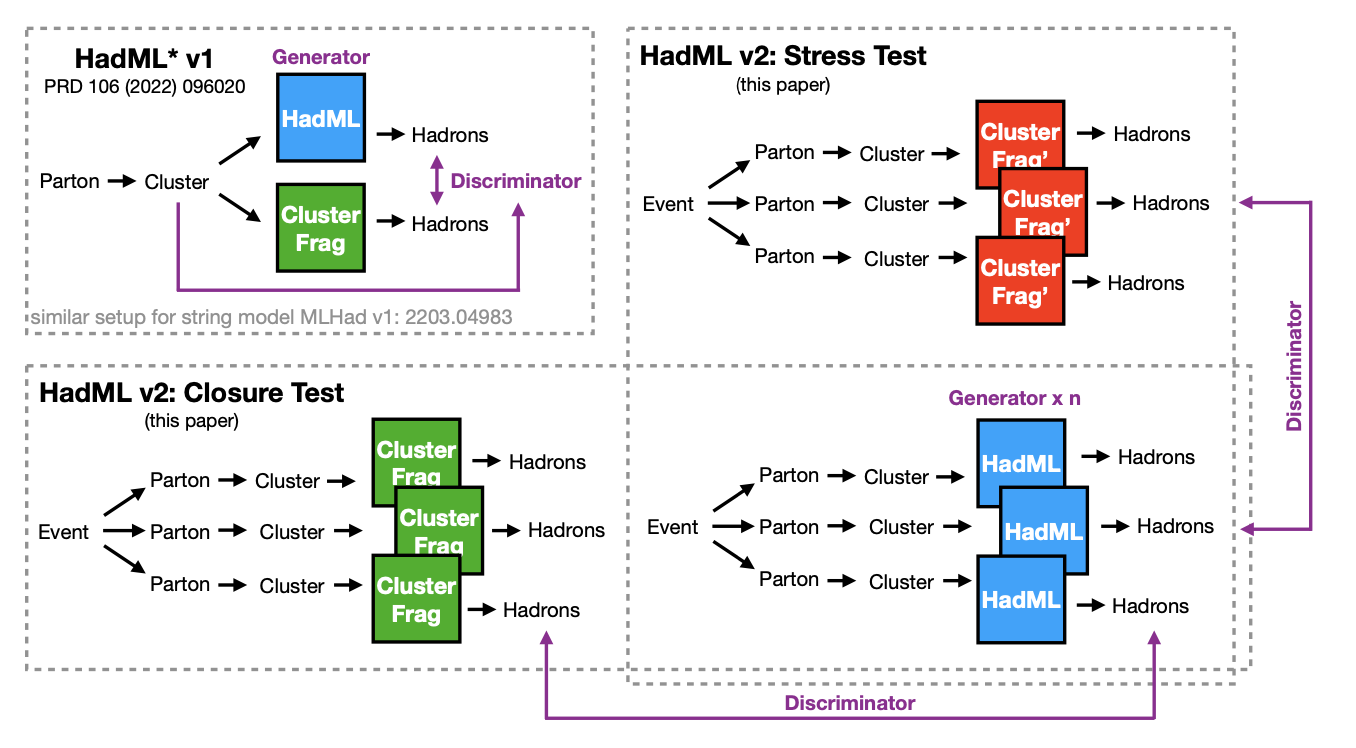

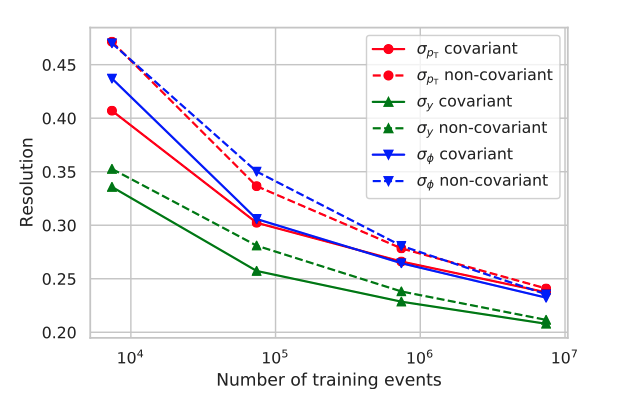

author="{A. Ghosh, X. Ju, B. Nachman, A. Siodmok}",

title="{Towards a Deep Learning Model for Hadronization}",

eprint="2203.12660",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

}

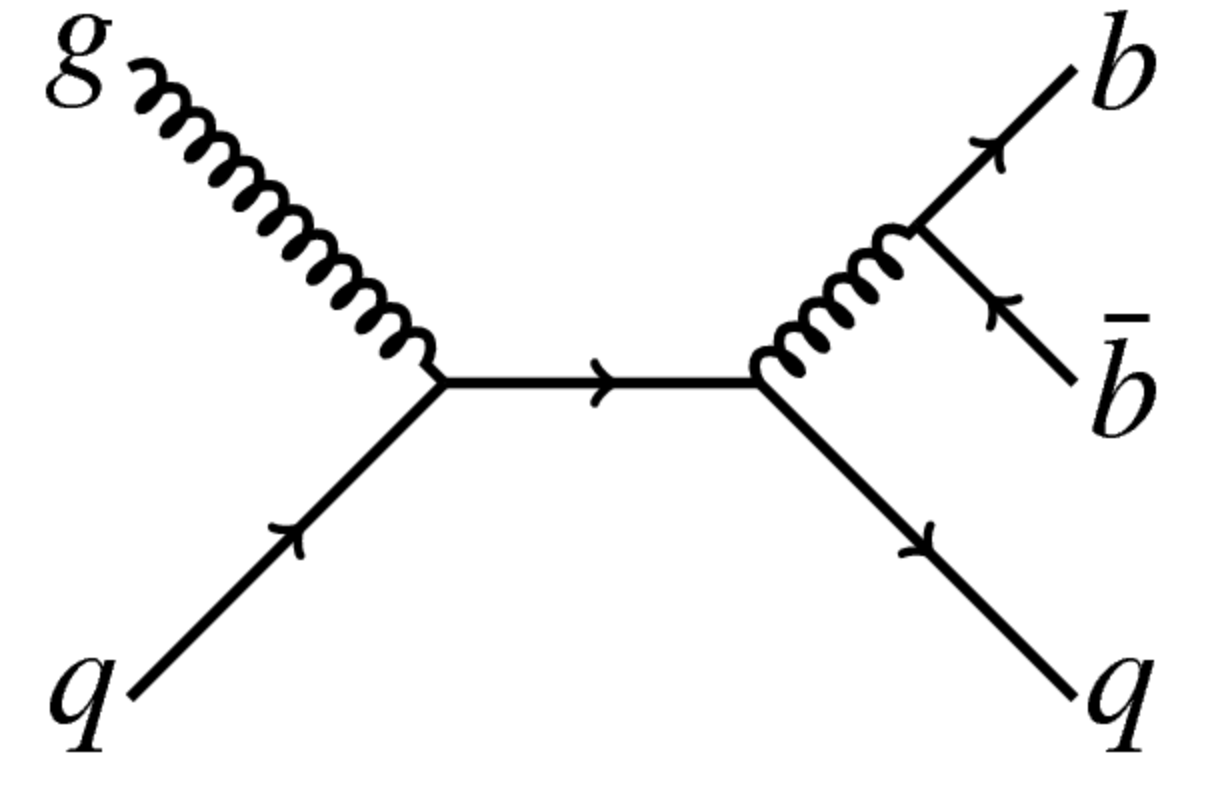

×

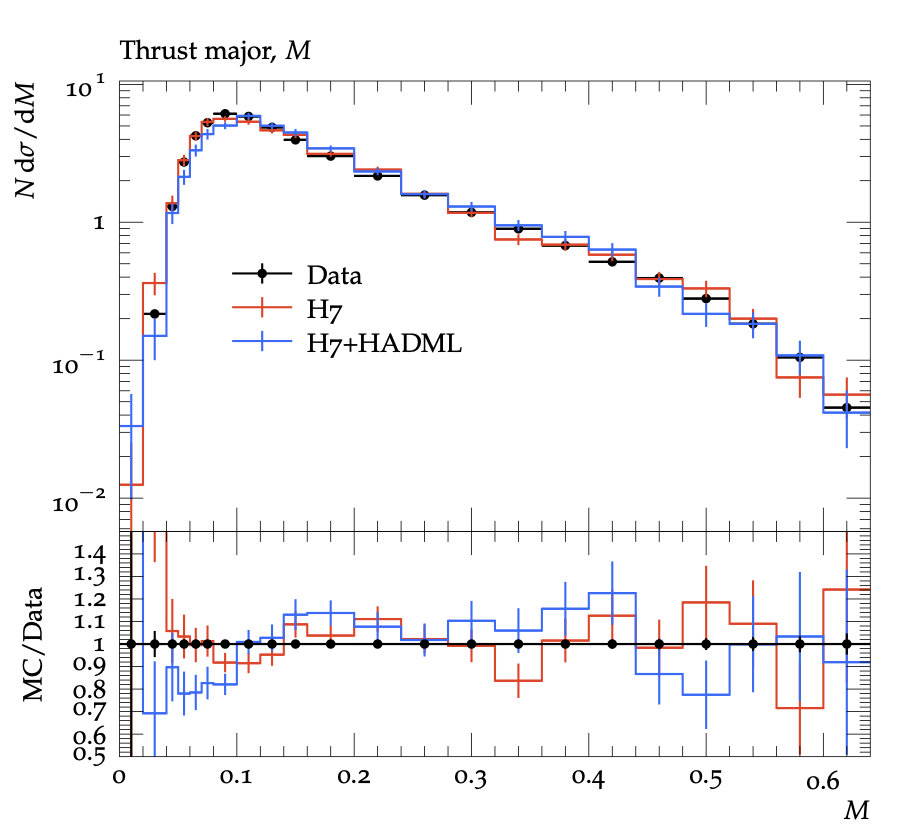

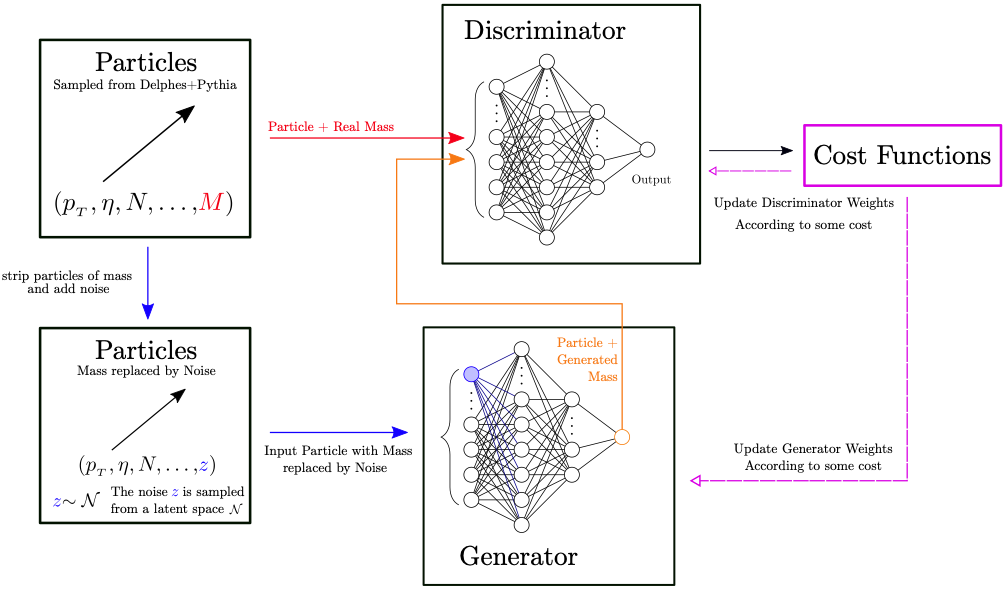

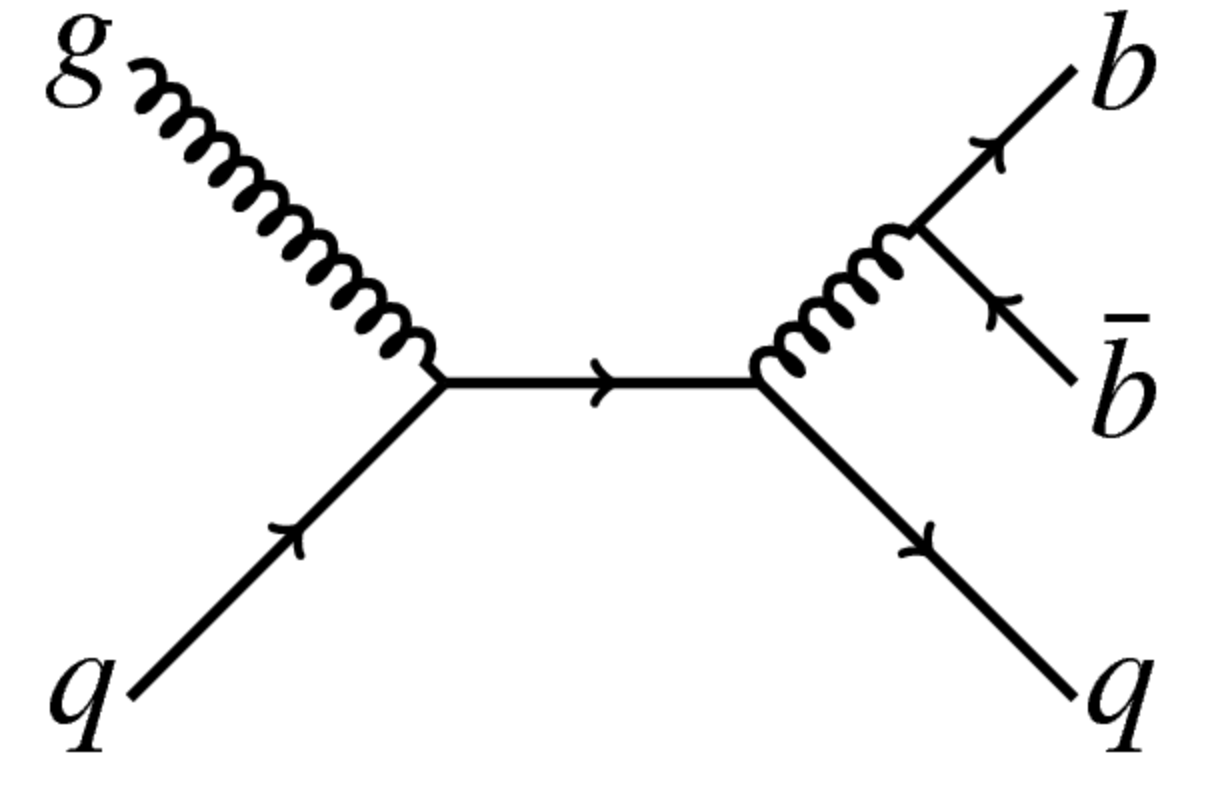

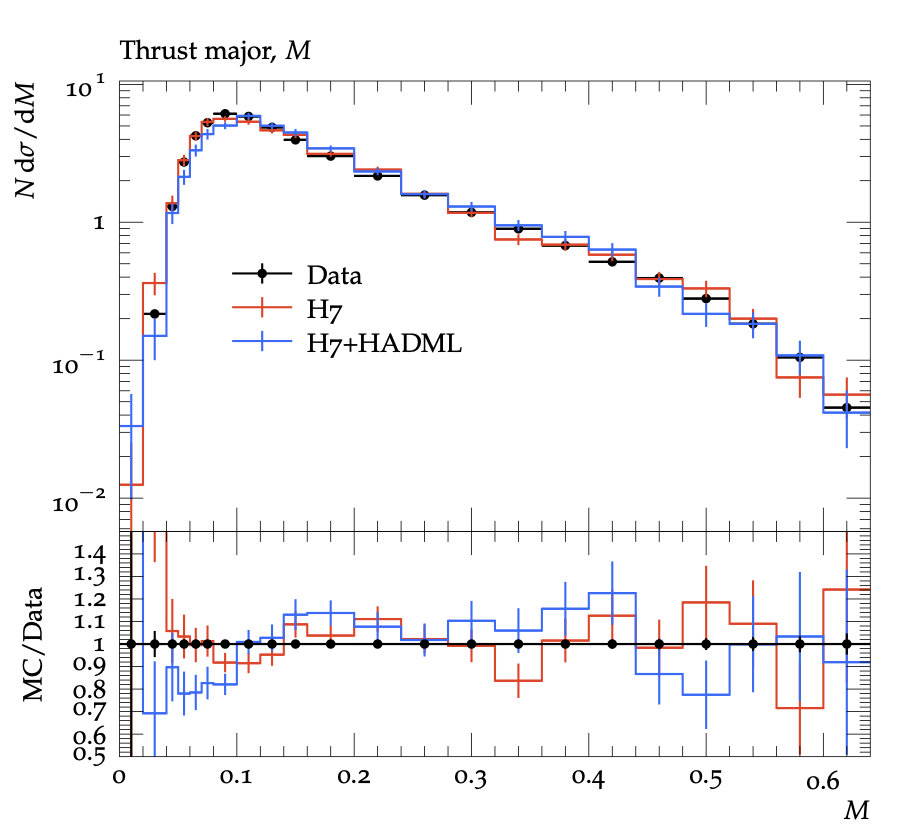

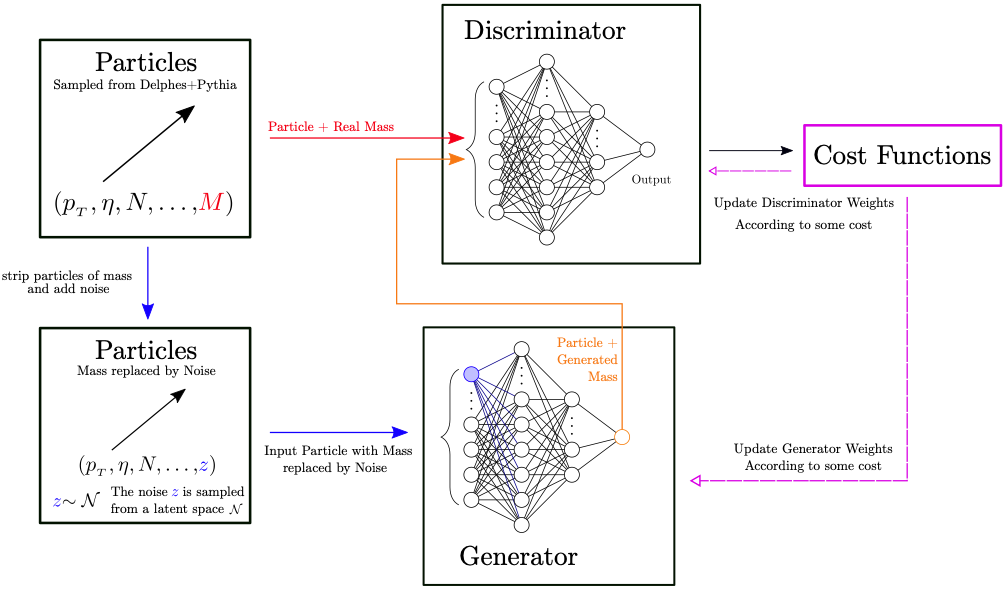

Hadronization is a complex quantum process whereby quarks and gluons become hadrons. The widely-used models of hadronization in event generators are based on physically-inspired phenomenological models with many free parameters. We propose an alternative approach whereby neural networks are used instead. Deep generative models are highly flexible, differentiable, and compatible with Graphical Processing Unit (GPUs). We make the first step towards a data-driven machine learning-based hadronization model by replacing a compont of the hadronization model within the Herwig event generator (cluster model) with a Generative Adversarial Network (GAN). We show that a GAN is capable of reproducing the kinematic properties of cluster decays. Furthermore, we integrate this model into Herwig to generate entire events that can be compared with the output of the public Herwig simulator as well as with electron-positron data.

×

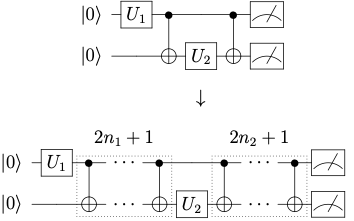

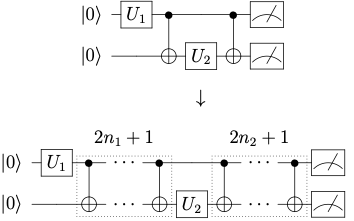

author="P. Deliyannis, J. Sud, D. Chamaki, Z. Webb-Mack, C. W. Bauer, B. Nachman",

title="{Improving Quantum Simulation Efficiency of Final State Radiation with Dynamic Quantum Circuits}",

eprint="2203.10018",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

journal = "Phys. Rev. D",

pages = "036007",

volume = "106",

year = "2022",

doi="10.1103/PhysRevD.106.036007",

×

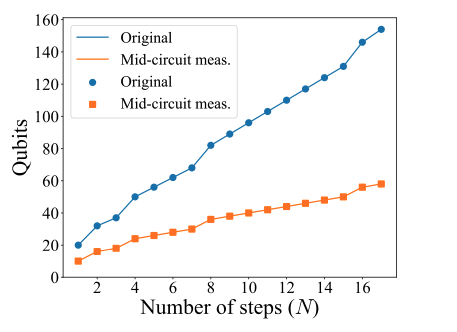

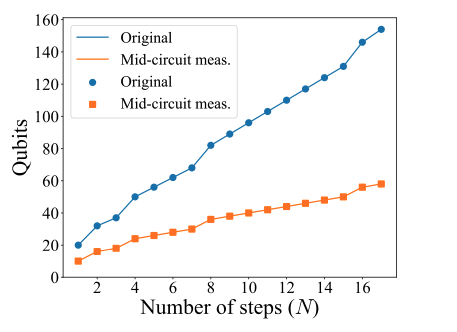

Reference arXiv:1904.03196 recently introduced an algorithm (QPS) for simulating parton showers with intermediate flavor states using polynomial resources on a digital quantum computer. We make use of a new quantum hardware capability called dynamical quantum computing to improve the scaling of this algorithm to significantly improve the method precision. In particular, we modify the quantum parton shower circuit to incorporate mid-circuit qubit measurements, resets, and quantum operations conditioned on classical information. This reduces the computational depth and the qubit requirements. Using "matrix product state" statevector simulators, we demonstrate that the improved algorithm yields expected results for 2, 3, 4, and 5-steps of the algorithm. We compare absolute costs with the original QPS algorithm, and show that dynamical quantum computing can significantly reduce costs in the class of digital quantum algorithms representing quantum walks (which includes the QPS).

×

author="K. Krzyzanska and B. Nachman",

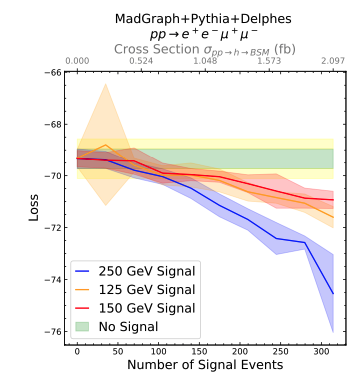

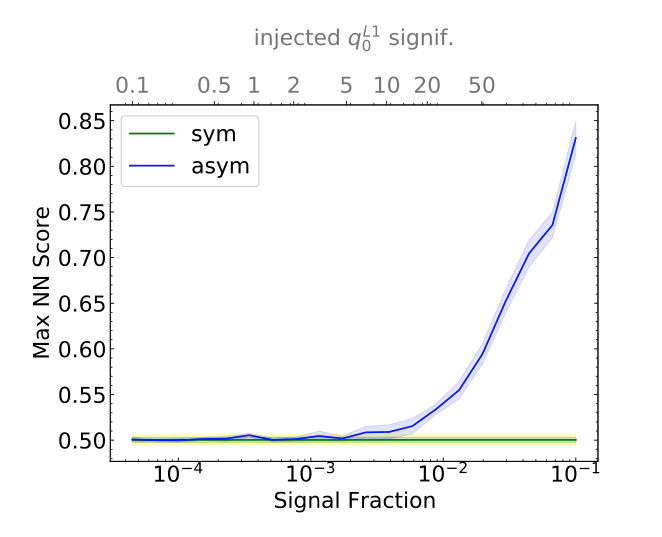

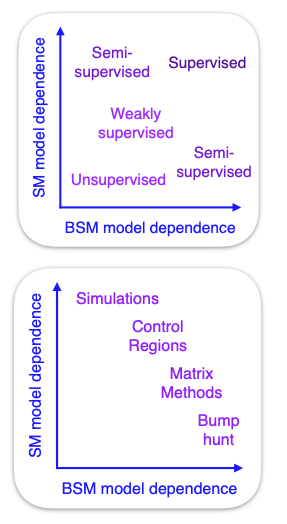

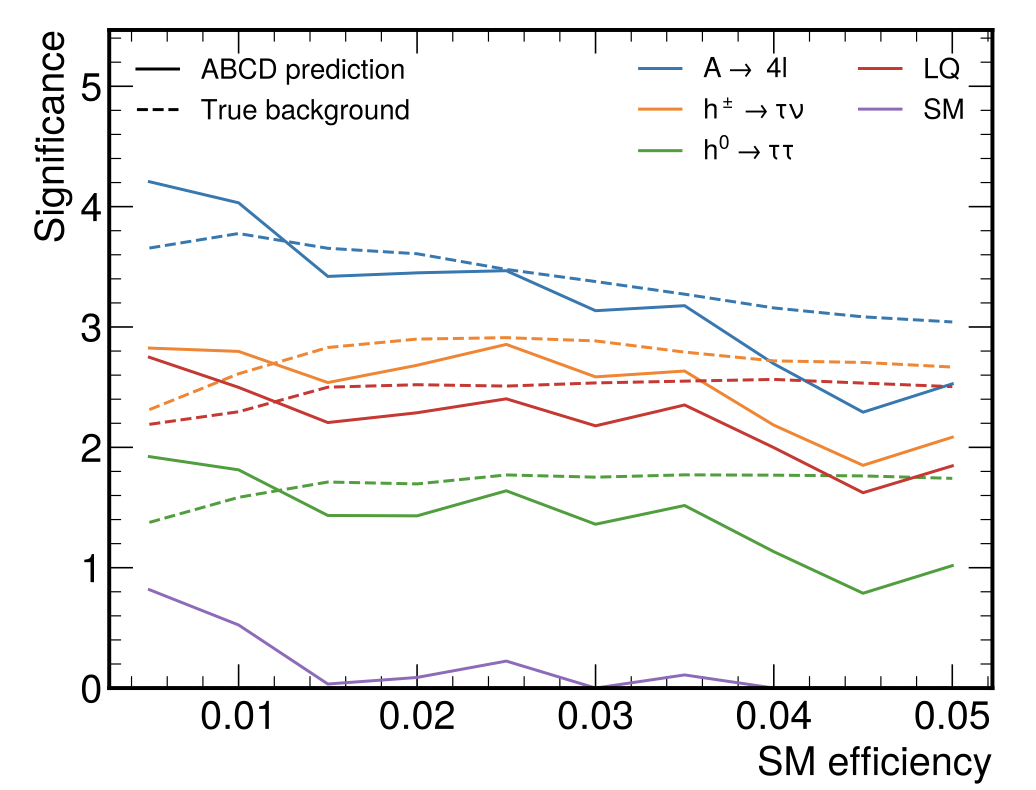

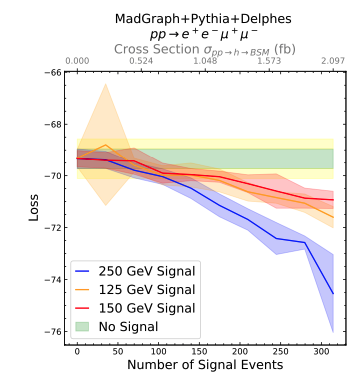

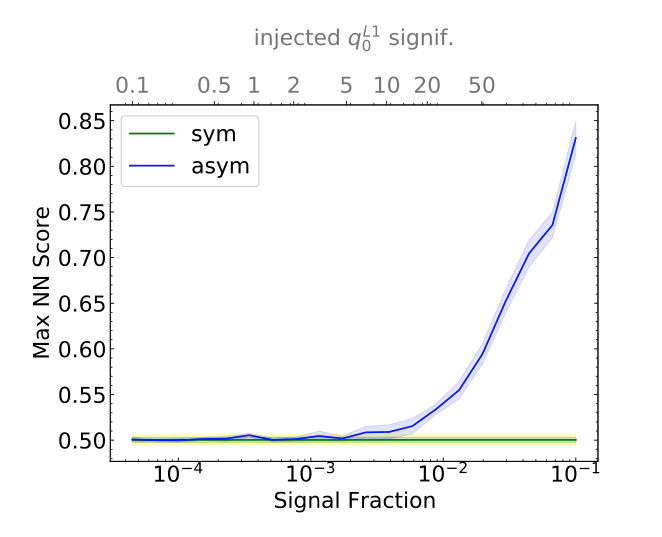

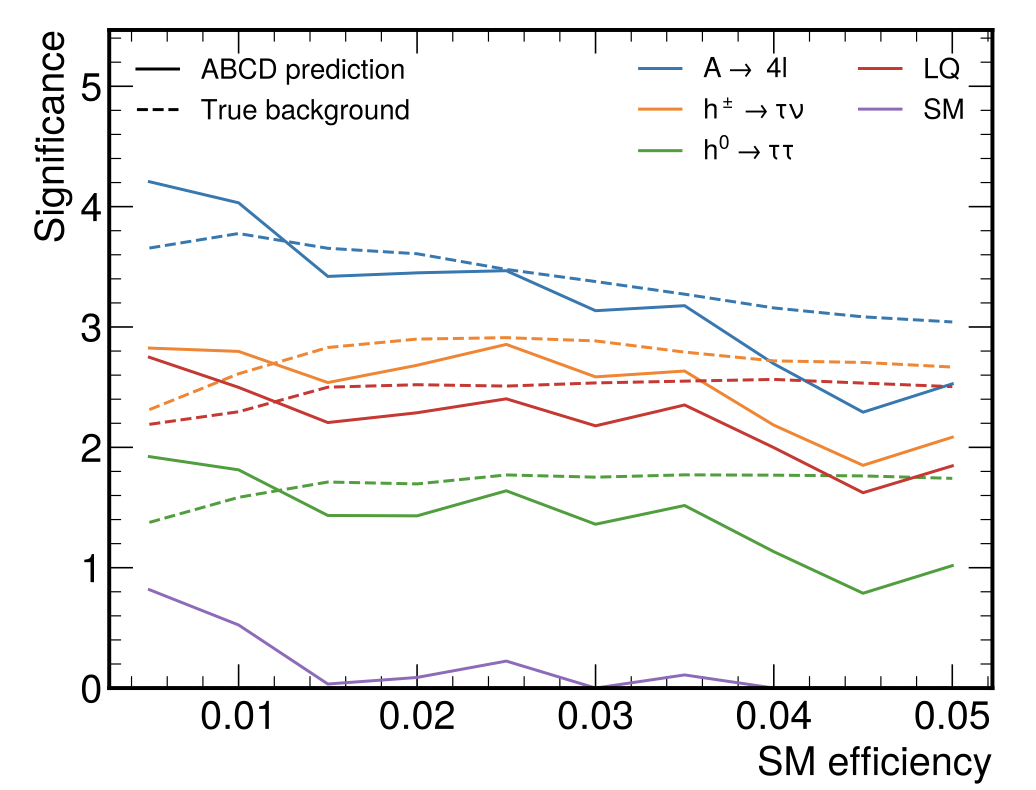

title="{Simulation-based Anomaly Detection for Multileptons at the LHC}",

eprint="2203.09601",

archivePrefix = "arXiv",

primaryClass = "hep-ph",

year = "2022",

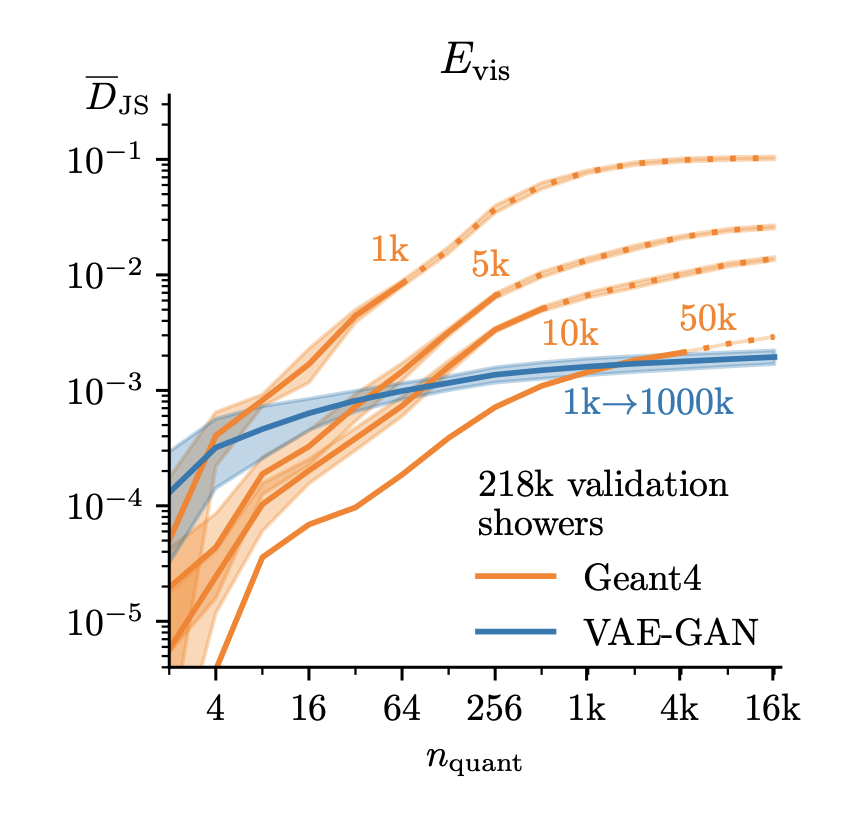

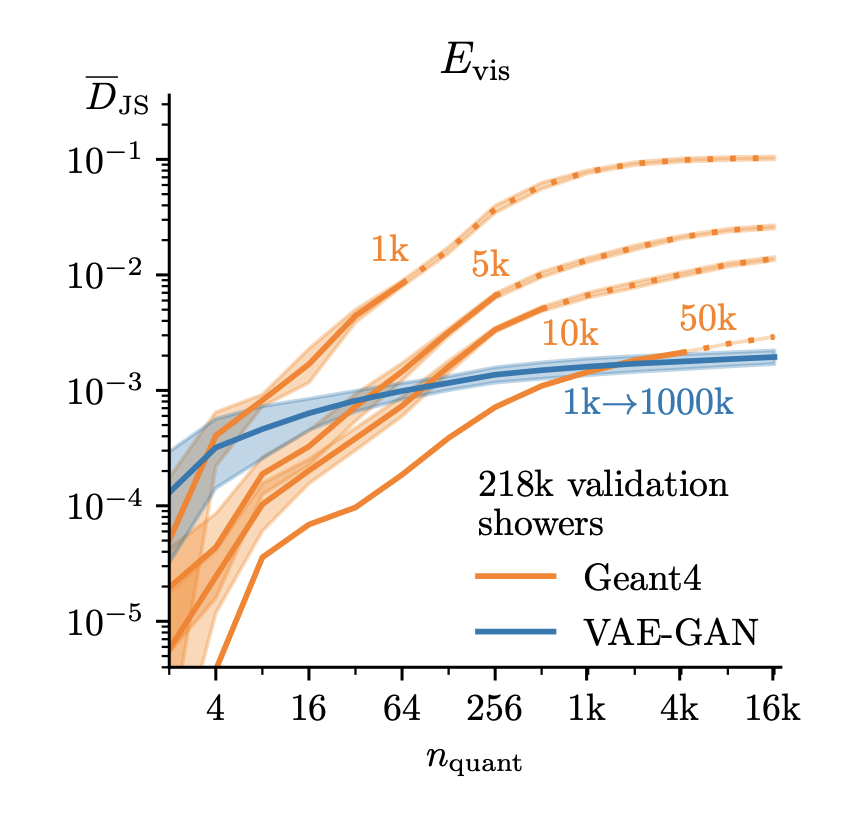

×